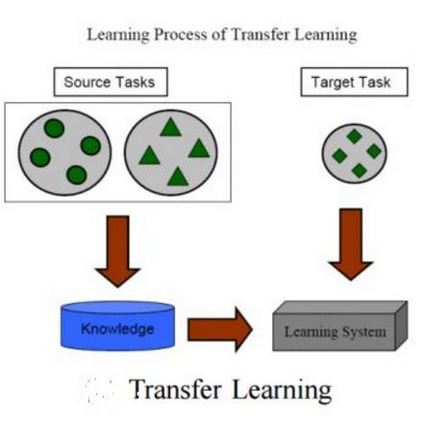

Supervised deep learning-based approaches have been applied to task-oriented dialog and have proven to be effective for limited domain and language applications when a sufficient number of training examples are available. In practice, these approaches suffer from the drawbacks of domain-driven design and under-resourced languages. Domain and language models are supposed to grow and change as the problem space evolves. On one hand, research on transfer learning has demonstrated the cross-lingual ability of multilingual Transformers-based models to learn semantically rich representations. On the other, in addition to the above approaches, meta-learning have enabled the development of task and language learning algorithms capable of far generalization. Through this context, this article proposes to investigate the cross-lingual transferability of using synergistically few-shot learning with prototypical neural networks and multilingual Transformers-based models. Experiments in natural language understanding tasks on MultiATIS++ corpus shows that our approach substantially improves the observed transfer learning performances between the low and the high resource languages. More generally our approach confirms that the meaningful latent space learned in a given language can be can be generalized to unseen and under-resourced ones using meta-learning.

翻译:实践上,这些方法受到域驱动设计和资源不足语言的缺点; 域和语言模型应当随着问题空间的演变而增长和变化; 一方面,关于转让学习的研究表明,多语种变异器模型具有跨语种能力,能够学习丰富的语言表现; 另一方面,除了上述方法外,元学习还有助于发展能够广泛概括的任务和语言学习算法; 通过这一背景,本条款提议调查利用模拟神经网络和多语种变异器模型协同少发式学习的跨语言可转让性; 对多语种变异器模型的自然语言理解任务进行的实验表明,我们的方法大大改进了所观察到的低语种语言和高资源语言之间的转移学习表现; 更一般地说,我们的方法证实,在特定语言中学习的有意义的潜在空间可以被普遍化为利用元学习的无形和资源不足的空间。