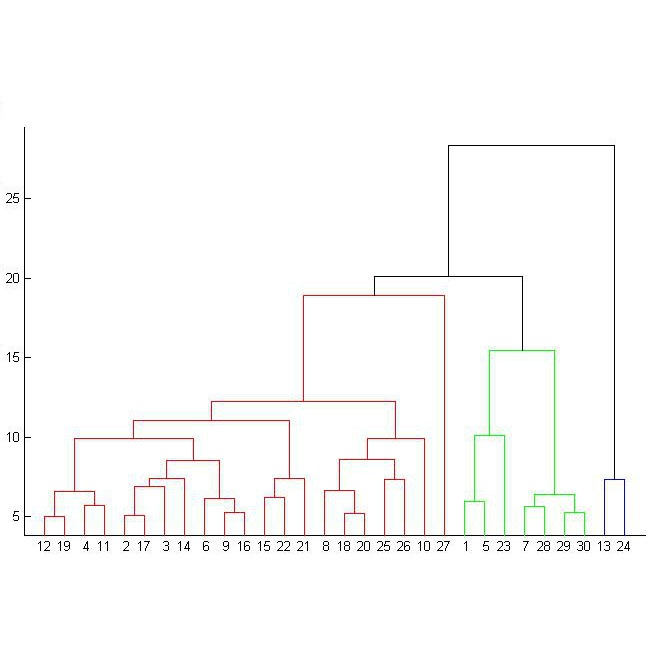

In this paper we address imbalanced binary classification (IBC) tasks. Applying resampling strategies to balance the class distribution of training instances is a common approach to tackle these problems. Many state-of-the-art methods find instances of interest close to the decision boundary to drive the resampling process. However, under-sampling the majority class may potentially lead to important information loss. Over-sampling also may increase the chance of overfitting by propagating the information contained in instances from the minority class. The main contribution of our work is a new method called ICLL for tackling IBC tasks which is not based on resampling training observations. Instead, ICLL follows a layered learning paradigm to model the data in two stages. In the first layer, ICLL learns to distinguish cases close to the decision boundary from cases which are clearly from the majority class, where this dichotomy is defined using a hierarchical clustering analysis. In the subsequent layer, we use instances close to the decision boundary and instances from the minority class to solve the original predictive task. A second contribution of our work is the automatic definition of the layers which comprise the layered learning strategy using a hierarchical clustering model. This is a relevant discovery as this process is usually performed manually according to domain knowledge. We carried out extensive experiments using 100 benchmark data sets. The results show that the proposed method leads to a better performance relatively to several state-of-the-art methods for IBC.

翻译:在本文中,我们处理的是不平衡的二进制分类(IBC)任务。应用重新抽样战略来平衡培训案例的班级分布是解决这些问题的通用办法。许多最先进的方法发现在接近决定界限的地方有兴趣的情况,以驱动再抽样进程。然而,低抽样调查多数阶层可能会导致信息损失。过度抽样调查还可能增加过分匹配的机会,传播少数阶层案例中所含信息。我们工作的主要贡献是采用一种新方法,称为ICLL, 处理IBC任务,这种方法并非基于重新抽样培训观察。相反,ICLL遵循一个分层学习模式,以模拟两个阶段的数据。在第一个层次,ICLL学会将接近决定界限的案件与明显来自多数阶层的案件区分开来,而这种分层分组分析也可能会增加过度匹配的可能性。在下一个层次,我们使用接近决定界限的例子和少数阶层案例来解决最初的预测任务。我们工作的第二个贡献是自动界定层次,这层是正常的层次化学习模式,即使用一个层次化的层次化方法,我们用一个层次化的实验方法来显示一个比层次级级级的实验结果。