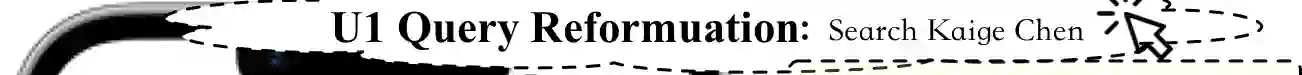

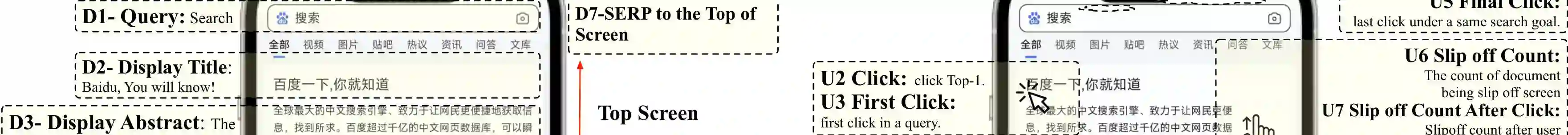

The unbiased learning to rank (ULTR) problem has been greatly advanced by recent deep learning techniques and well-designed debias algorithms. However, promising results on the existing benchmark datasets may not be extended to the practical scenario due to the following disadvantages observed from those popular benchmark datasets: (1) outdated semantic feature extraction where state-of-the-art large scale pre-trained language models like BERT cannot be exploited due to the missing of the original text;(2) incomplete display features for in-depth study of ULTR, e.g., missing the displayed abstract of documents for analyzing the click necessary bias; (3) lacking real-world user feedback, leading to the prevalence of synthetic datasets in the empirical study. To overcome the above disadvantages, we introduce the Baidu-ULTR dataset. It involves randomly sampled 1.2 billion searching sessions and 7,008 expert annotated queries, which is orders of magnitude larger than the existing ones. Baidu-ULTR provides:(1) the original semantic feature and a pre-trained language model for easy usage; (2) sufficient display information such as position, displayed height, and displayed abstract, enabling the comprehensive study of different biases with advanced techniques such as causal discovery and meta-learning; and (3) rich user feedback on search result pages (SERPs) like dwelling time, allowing for user engagement optimization and promoting the exploration of multi-task learning in ULTR. In this paper, we present the design principle of Baidu-ULTR and the performance of benchmark ULTR algorithms on this new data resource, favoring the exploration of ranking for long-tail queries and pre-training tasks for ranking. The Baidu-ULTR dataset and corresponding baseline implementation are available at https://github.com/ChuXiaokai/baidu_ultr_dataset.

翻译:由于最近的深层次学习技巧和设计得当的德比亚算法,对排名问题进行公正不偏的学习已经大大推进;然而,现有基准数据集的有希望的结果可能不会扩大到实际情景,因为从这些流行的基准数据集中观察到以下不利之处:(1) 过时的语义特征提取,因为缺少原始文本,无法利用像BERT这样的最先进的大规模预先培训语言模型;(2) 用于深入研究LTTR的不完整显示功能,例如,缺少用于分析点击必要偏差的显示文件摘要;(3) 缺乏真实世界用户反馈,导致实实在在的数据集在实证研究中普遍存在。为克服上述不利之处,我们引入了Baidu-LukR数据集数据集,随机抽样了12亿次搜索会和7 008次专家附加说明性查询,这是比现有文本要大得多的顺序。 Baidu-LACR提供了:(1) 最初的语义特征和预经过训练的语言模型,供容易使用;(2) 足够的显示信息,如显示高度位置,显示真实用户反馈,并显示抽象,使用户能够全面进行数据排序,在高级数据库中进行这种高级研究。