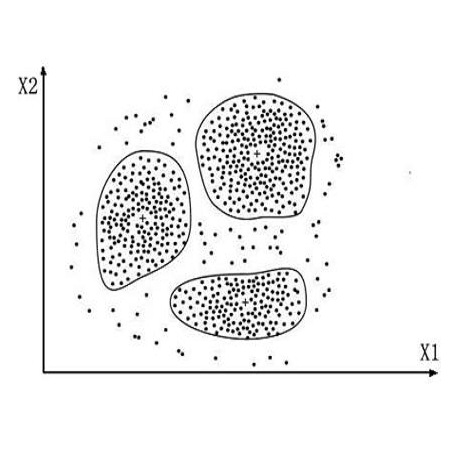

Short text clustering has far-reaching effects on semantic analysis, showing its importance for multiple applications such as corpus summarization and information retrieval. However, it inevitably encounters the severe sparsity of short text representations, making the previous clustering approaches still far from satisfactory. In this paper, we present a novel attentive representation learning model for shot text clustering, wherein cluster-level attention is proposed to capture the correlations between text representations and cluster representations. Relying on this, the representation learning and clustering for short texts are seamlessly integrated into a unified model. To further ensure robust model training for short texts, we apply adversarial training to the unsupervised clustering setting, by injecting perturbations into the cluster representations. The model parameters and perturbations are optimized alternately through a minimax game. Extensive experiments on four real-world short text datasets demonstrate the superiority of the proposed model over several strong competitors, verifying that robust adversarial training yields substantial performance gains.

翻译:短文本群集对语义分析具有深远影响,表明其对诸如质谱汇总和信息检索等多种应用的重要性;然而,它不可避免地会遇到短文本代表形式极为分散的情况,使得先前的分组方法仍然远远不能令人满意;在本文件中,我们为短文本群集提出了一个新颖的专注代表性学习模式,其中提出了集束关注模式,以捕捉文本代表形式和分组代表形式之间的相互关系;根据这一点,短文本的代表学习和组合无缝地融入一个统一模式;为进一步确保短文本的强有力示范培训,我们通过向分组代表机构注入干扰,对无监督的分组设置采用对抗性培训;示范参数和扰动性通过小型游戏交替优化。关于四个现实世界短文本数据集的广泛实验表明拟议模式优于几个强大的竞争者,并证实强势的对立培训能够产生实质性的业绩收益。