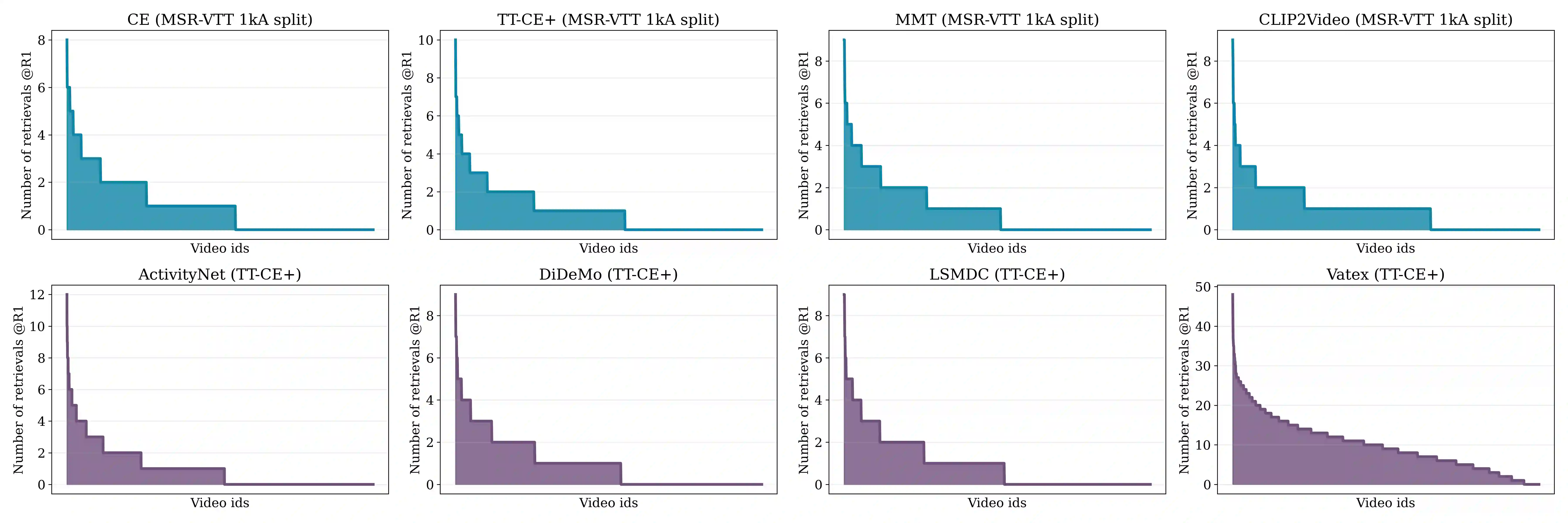

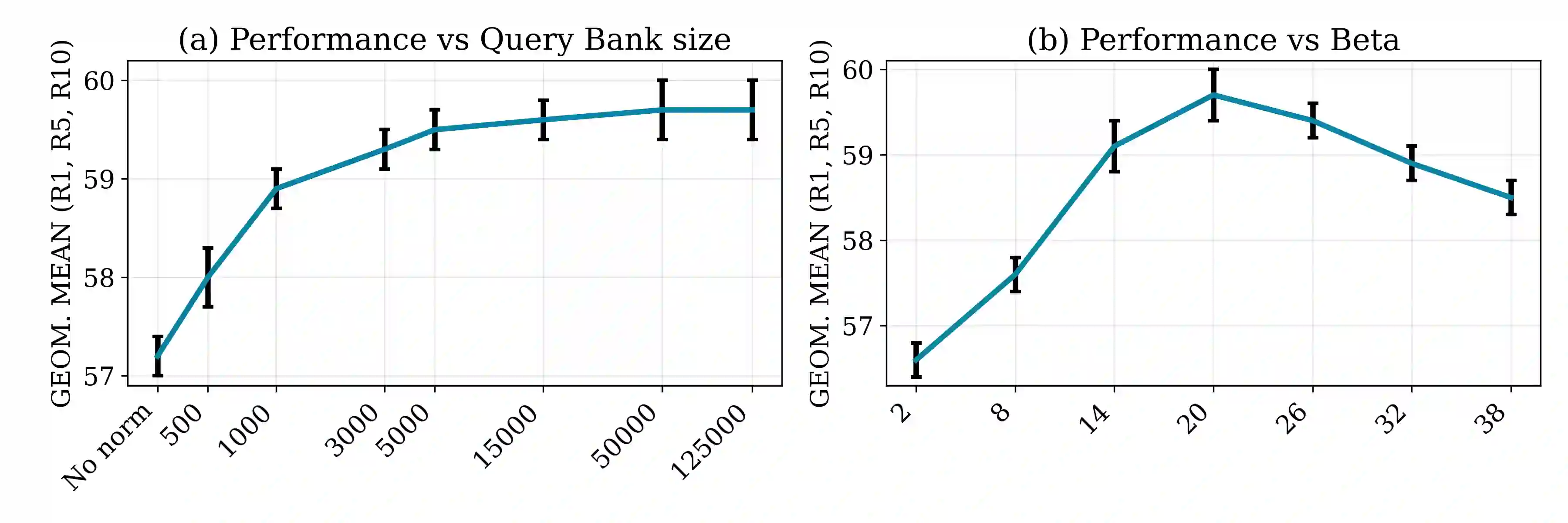

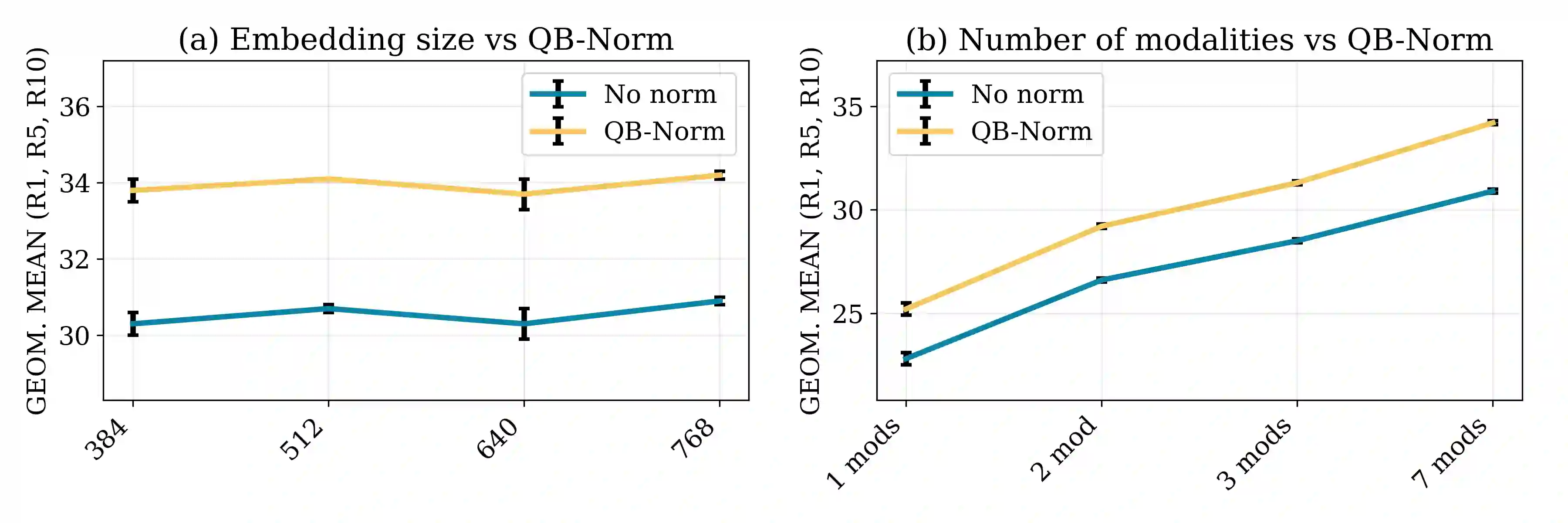

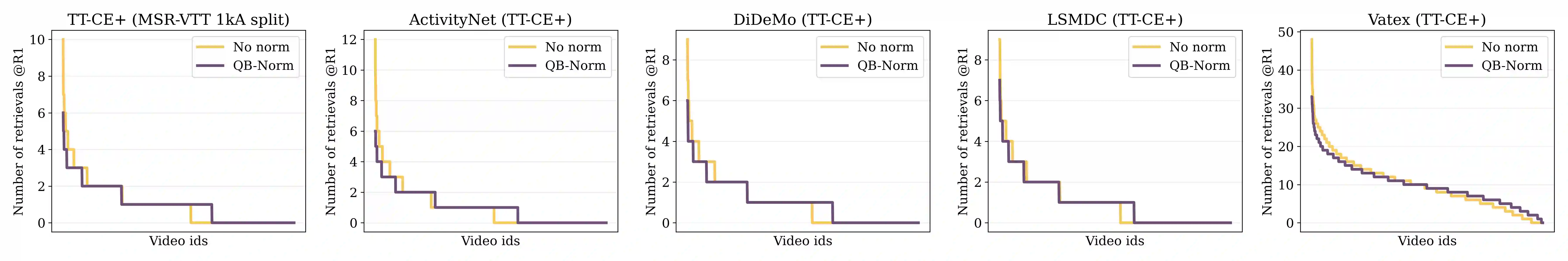

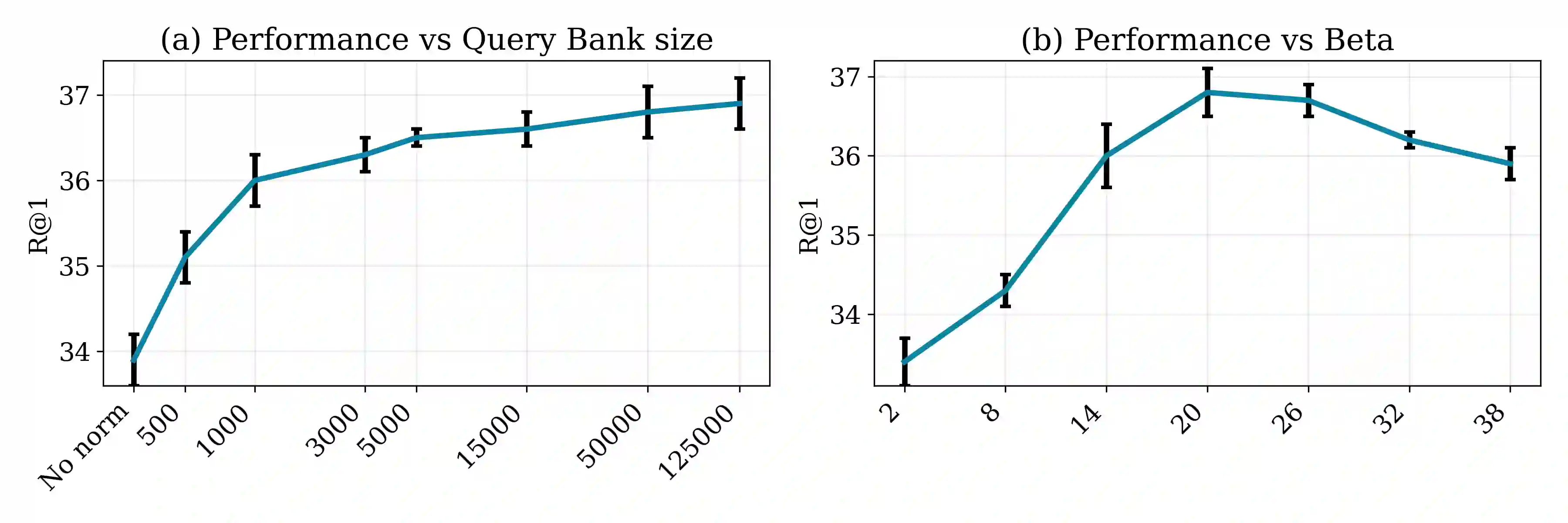

Profiting from large-scale training datasets, advances in neural architecture design and efficient inference, joint embeddings have become the dominant approach for tackling cross-modal retrieval. In this work we first show that, despite their effectiveness, state-of-the-art joint embeddings suffer significantly from the longstanding hubness problem in which a small number of gallery embeddings form the nearest neighbours of many queries. Drawing inspiration from the NLP literature, we formulate a simple but effective framework called Querybank Normalisation (QB-Norm) that re-normalises query similarities to account for hubs in the embedding space. QB-Norm improves retrieval performance without requiring retraining. Differently from prior work, we show that QB-Norm works effectively without concurrent access to any test set queries. Within the QB-Norm framework, we also propose a novel similarity normalisation method, the Dynamic Inverted Softmax, that is significantly more robust than existing approaches. We showcase QB-Norm across a range of cross modal retrieval models and benchmarks where it consistently enhances strong baselines beyond the state of the art. Code is available at https://vladbogo.github.io/QB-Norm/.

翻译:从大规模培训数据集、神经结构设计的进步和高效的推断中获益的大型培训数据集、神经结构设计的进步和高效的推断中,联合嵌入已成为解决跨模式检索的主导方法。在这项工作中,我们首先表明,尽管最先进的联合嵌入系统具有效力,但与其以往的工作不同,我们显示,QB-Norm在长期的枢纽问题中工作有效,没有同时获得任何测试设置的查询。在QB-Norm框架内,我们还提出了一种新型的类似正常化方法,即动态反向软体,比现有方法更加有力。我们展示了跨跨模式检索模型/基准的QB-Norm,这在可持续加强AB/AD的基线。