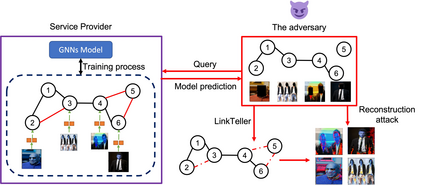

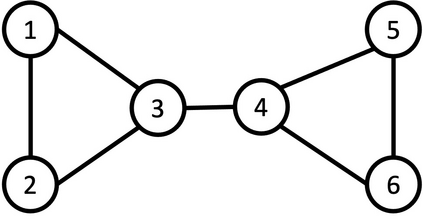

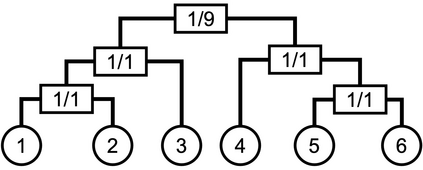

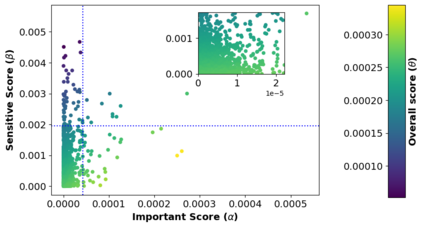

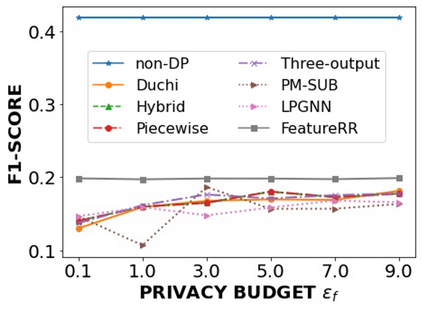

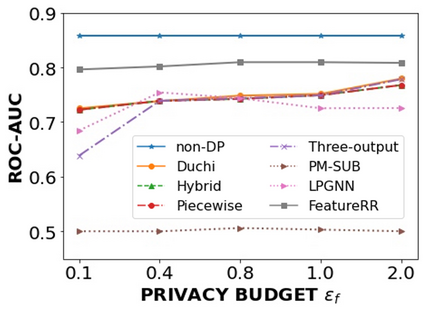

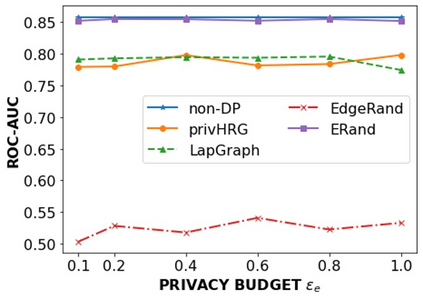

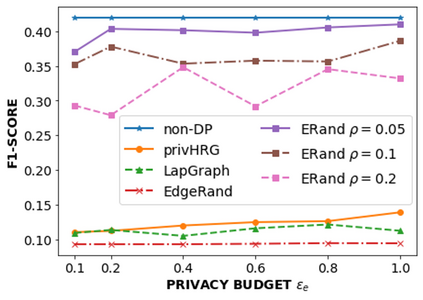

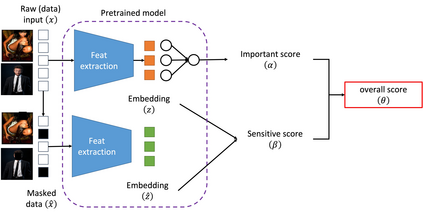

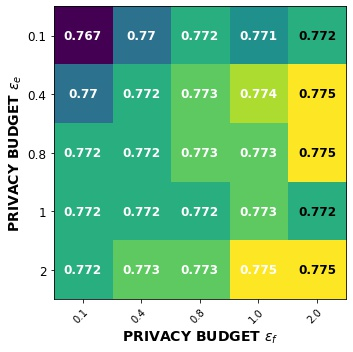

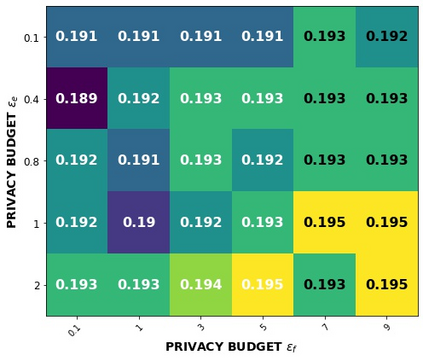

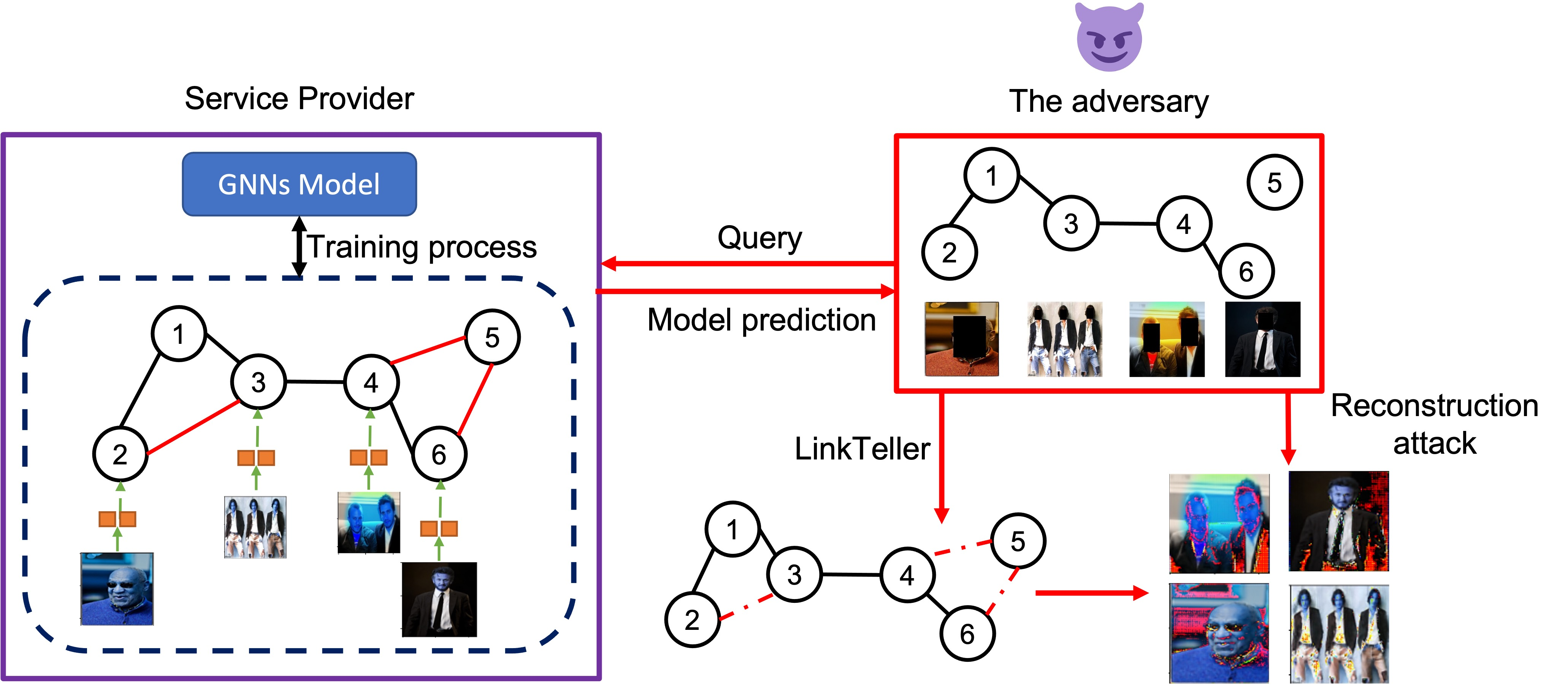

Graph neural networks (GNNs) are susceptible to privacy inference attacks (PIAs), given their ability to learn joint representation from features and edges among nodes in graph data. To prevent privacy leakages in GNNs, we propose a novel heterogeneous randomized response (HeteroRR) mechanism to protect nodes' features and edges against PIAs under differential privacy (DP) guarantees without an undue cost of data and model utility in training GNNs. Our idea is to balance the importance and sensitivity of nodes' features and edges in redistributing the privacy budgets since some features and edges are more sensitive or important to the model utility than others. As a result, we derive significantly better randomization probabilities and tighter error bounds at both levels of nodes' features and edges departing from existing approaches, thus enabling us to maintain high data utility for training GNNs. An extensive theoretical and empirical analysis using benchmark datasets shows that HeteroRR significantly outperforms various baselines in terms of model utility under rigorous privacy protection for both nodes' features and edges. That enables us to defend PIAs in DP-preserving GNNs effectively.

翻译:图形神经网络(GNNS)容易受到隐私推断攻击,因为其有能力从图形数据中各节点的特征和边缘中学习共同代表。为了防止GNNS的隐私泄漏,我们提议了一个新型的多样随机响应机制,以保护节点特征和边缘,在不同的隐私保障下保护节点的特征和边缘,而没有不适当的数据费用和培训GNNS的模型实用性。我们的想法是平衡节点的特征和边缘在重新分配隐私预算方面的重要性和敏感性,因为某些特征和边缘对模型的实用性比其他特征和边缘都更为敏感或重要。因此,我们在节点特点和边缘的级别上都出现了显著更好的随机概率概率和更严格的错误界限,从而使我们能够保持高的数据效用,用于培训GNNS。使用基准数据集进行的广泛理论和实验分析表明,HetroRRR(HetroreroR)在严格保护节点特征和边缘的隐私保护下,在模型实用性方面大大超出各种基线。这使我们能够在DP-PNUSGNS中有效保护PIA。