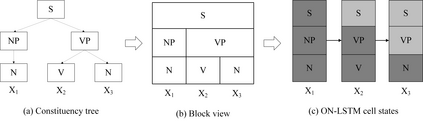

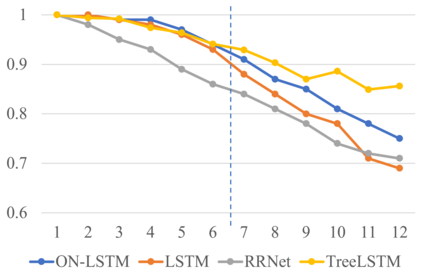

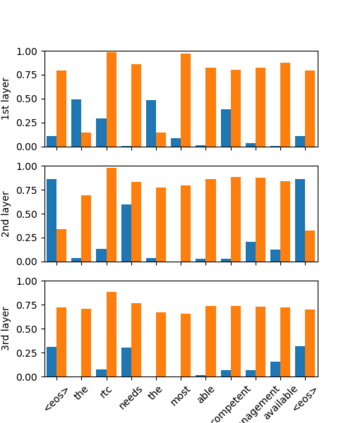

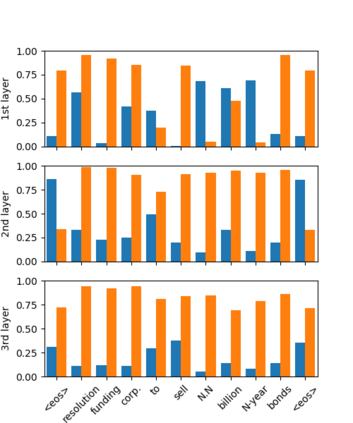

Recurrent neural network (RNN) models are widely used for processing sequential data governed by a latent tree structure. Previous work shows that RNN models (especially Long Short-Term Memory (LSTM) based models) could learn to exploit the underlying tree structure. However, its performance consistently lags behind that of tree-based models. This work proposes a new inductive bias Ordered Neurons, which enforces an order of updating frequencies between hidden state neurons. We show that the ordered neurons could explicitly integrate the latent tree structure into recurrent models. To this end, we propose a new RNN unit: ON-LSTM, which achieve good performances on four different tasks: language modeling, unsupervised parsing, targeted syntactic evaluation, and logical inference.

翻译:经常性神经网络模型(RNN)被广泛用于处理由潜伏树结构调节的连续数据。先前的工作表明,RNN模型(特别是长期短期内存模型)可以学习利用树底结构,但其性能始终落后于树基模型。这项工作提出了一个新的感应偏向性定序神经元模型,该模型可以执行隐藏状态神经元之间更新频率的顺序。我们显示,定购神经元可以明确地将潜伏树木结构纳入经常性模型。为此,我们提议一个新的RNN单元:ON-LSTM, 它可以在四种不同任务上取得良好的性能:语言建模、无监督的剖析、有针对性的合成评估和逻辑推断。