【论文推荐】最新八篇知识图谱相关论文—神经信息检索、可解释推理网络、Zero-Shot、上下文、Attentive RNN

【导读】专知内容组今天为大家推出八篇知识图谱(Knowledge Graph)相关论文,欢迎查看!

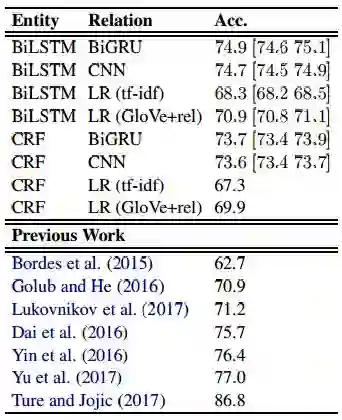

1.Strong Baselines for Simple Question Answering over Knowledge Graphs with and without Neural Networks(有和没有神经网络的知识图表对于简单问答的Baseline)

作者:Salman Mohammed,Peng Shi,Jimmy Lin

Published in NAACL HLT 2018

机构:University of Waterloo

摘要:We examine the problem of question answering over knowledge graphs, focusing on simple questions that can be answered by the lookup of a single fact. Adopting a straightforward decomposition of the problem into entity detection, entity linking, relation prediction, and evidence combination, we explore simple yet strong baselines. On the popular SimpleQuestions dataset, we find that basic LSTMs and GRUs plus a few heuristics yield accuracies that approach the state of the art, and techniques that do not use neural networks also perform reasonably well. These results show that gains from sophisticated deep learning techniques proposed in the literature are quite modest and that some previous models exhibit unnecessary complexity.

期刊:arXiv, 2018年6月6日

网址:

http://www.zhuanzhi.ai/document/f53b87b183392331888d792b1fd64fb7

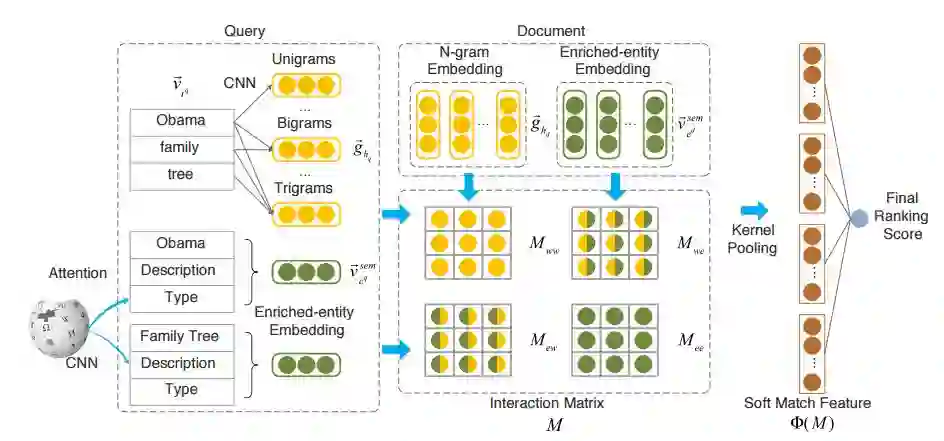

2.Entity-Duet Neural Ranking: Understanding the Role of Knowledge Graph Semantics in Neural Information Retrieval(Entity-Duet Neural Ranking: 理解知识图谱语义在神经信息检索中的作用)

作者:Zhenghao Liu,Chenyan Xiong,Maosong Sun,Zhiyuan Liu

ACL2018

机构:Tsinghua University,Carnegie Mellon University

摘要:This paper presents the Entity-Duet Neural Ranking Model (EDRM), which introduces knowledge graphs to neural search systems. EDRM represents queries and documents by their words and entity annotations. The semantics from knowledge graphs are integrated in the distributed representations of their entities, while the ranking is conducted by interaction-based neural ranking networks. The two components are learned end-to-end, making EDRM a natural combination of entity-oriented search and neural information retrieval. Our experiments on a commercial search log demonstrate the effectiveness of EDRM. Our analyses reveal that knowledge graph semantics significantly improve the generalization ability of neural ranking models.

期刊:arXiv, 2018年6月3日

网址:

http://www.zhuanzhi.ai/document/32c676eb268a5e8be4cd22c2c2a418a4

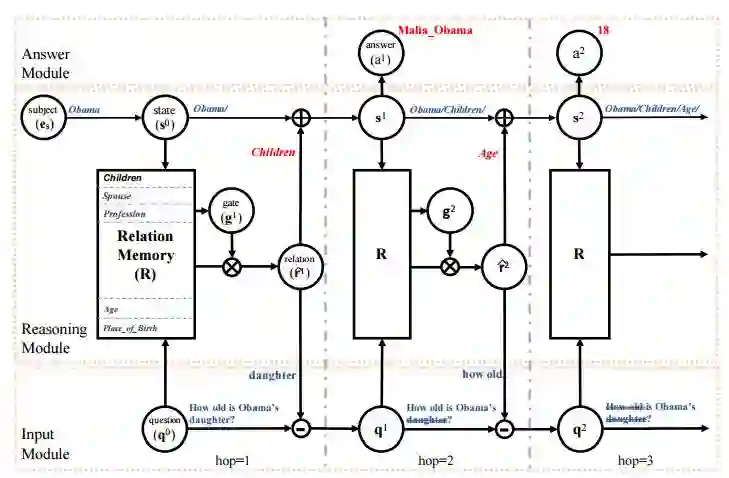

3.An Interpretable Reasoning Network for Multi-Relation Question Answering(基于可解释推理网络的多关系问答)

作者:Mantong Zhou,Minlie Huang,Xiaoyan Zhu

COLING 2018

机构:Tsinghua University

摘要:Multi-relation Question Answering is a challenging task, due to the requirement of elaborated analysis on questions and reasoning over multiple fact triples in knowledge base. In this paper, we present a novel model called Interpretable Reasoning Network that employs an interpretable, hop-by-hop reasoning process for question answering. The model dynamically decides which part of an input question should be analyzed at each hop; predicts a relation that corresponds to the current parsed results; utilizes the predicted relation to update the question representation and the state of the reasoning process; and then drives the next-hop reasoning. Experiments show that our model yields state-of-the-art results on two datasets. More interestingly, the model can offer traceable and observable intermediate predictions for reasoning analysis and failure diagnosis, thereby allowing manual manipulation in predicting the final answer.

期刊:arXiv, 2018年6月1日

网址:

http://www.zhuanzhi.ai/document/807ee8d54682d9356a8eb8b408a1a7d6

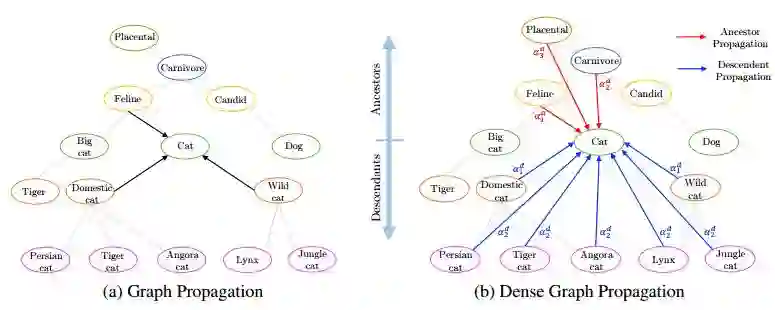

4.Rethinking Knowledge Graph Propagation for Zero-Shot Learning

作者:Michael Kampffmeyer,Yinbo Chen,Xiaodan Liang,Hao Wang,Yujia Zhang,Eric P. Xing

机构:UiT The Arctic University of Norway,Tsinghua University,Carnegie Mellon University

摘要:The potential of graph convolutional neural networks for the task of zero-shot learning has been demonstrated recently. These models are highly sample efficient as related concepts in the graph structure share statistical strength allowing generalization to new classes when faced with a lack of data. However, knowledge from distant nodes can get diluted when propagating through intermediate nodes, because current approaches to zero-shot learning use graph propagation schemes that perform Laplacian smoothing at each layer. We show that extensive smoothing does not help the task of regressing classifier weights in zero-shot learning. In order to still incorporate information from distant nodes and utilize the graph structure, we propose an Attentive Dense Graph Propagation Module (ADGPM). ADGPM allows us to exploit the hierarchical graph structure of the knowledge graph through additional connections. These connections are added based on a node's relationship to its ancestors and descendants and an attention scheme is further used to weigh their contribution depending on the distance to the node. Finally, we illustrate that finetuning of the feature representation after training the ADGPM leads to considerable improvements. Our method achieves competitive results, outperforming previous zero-shot learning approaches.

期刊:arXiv, 2018年6月1日

网址:

http://www.zhuanzhi.ai/document/011b254f6780093cdf7d02560b9564da

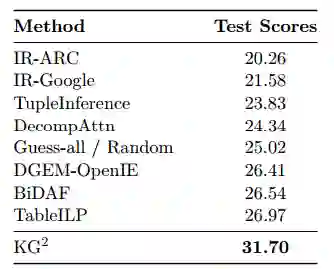

5.KG^2: Learning to Reason Science Exam Questions with Contextual Knowledge Graph Embeddings(KG^2: 学习用上下文知识图谱嵌入来推理科学试题)

作者:Yuyu Zhang,Hanjun Dai,Kamil Toraman,Le Song

机构:College of Computing

摘要:The AI2 Reasoning Challenge (ARC), a new benchmark dataset for question answering (QA) has been recently released. ARC only contains natural science questions authored for human exams, which are hard to answer and require advanced logic reasoning. On the ARC Challenge Set, existing state-of-the-art QA systems fail to significantly outperform random baseline, reflecting the difficult nature of this task. In this paper, we propose a novel framework for answering science exam questions, which mimics human solving process in an open-book exam. To address the reasoning challenge, we construct contextual knowledge graphs respectively for the question itself and supporting sentences. Our model learns to reason with neural embeddings of both knowledge graphs. Experiments on the ARC Challenge Set show that our model outperforms the previous state-of-the-art QA systems.

期刊:arXiv, 2018年5月31日

网址:

http://www.zhuanzhi.ai/document/d262356f874b05f67652725423e943a8

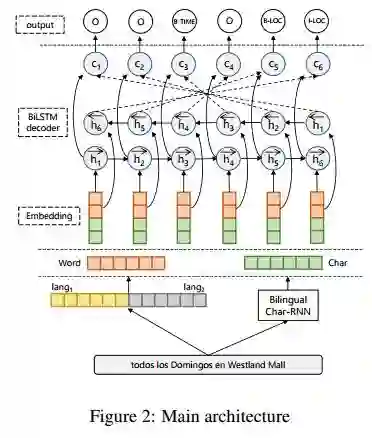

6.Bilingual Character Representation for Efficiently Addressing Out-of-Vocabulary Words in Code-Switching Named Entity Recognition(用于在命名实体识别的代码转换中有效地处理词汇表外的单词的双语字符表示方法)

作者:Genta Indra Winata,Chien-Sheng Wu,Andrea Madotto,Pascale Fung

Accepted in "3rd Workshop in Computational Approaches in Linguistic Code-switching", ACL 2018

机构:Hong Kong University of Science and Technology

摘要:We propose an LSTM-based model with hierarchical architecture on named entity recognition from code-switching Twitter data. Our model uses bilingual character representation and transfer learning to address out-of-vocabulary words. In order to mitigate data noise, we propose to use token replacement and normalization. In the 3rd Workshop on Computational Approaches to Linguistic Code-Switching Shared Task, we achieved second place with 62.76% harmonic mean F1-score for English-Spanish language pair without using any gazetteer and knowledge-based information.

期刊:arXiv, 2018年5月31日

网址:

http://www.zhuanzhi.ai/document/4b68d396c0ca0a4b1246e7196735050a

7.Question Answering over Freebase via Attentive RNN with Similarity Matrix based CNN(通过基于CNN的相似矩阵的Attentive RNN对Freebase进行问答)

作者:Yingqi Qu,Jie Liu,Liangyi Kang,Qinfeng Shi,Dan Ye

摘要:With the rapid growth of knowledge bases (KBs), question answering over knowledge base, a.k.a. KBQA has drawn huge attention in recent years. Most of the existing KBQA methods follow so called encoder-compare framework. They map the question and the KB facts to a common embedding space, in which the similarity between the question vector and the fact vectors can be conveniently computed. This, however, inevitably loses original words interaction information. To preserve more original information, we propose an attentive recurrent neural network with similarity matrix based convolutional neural network (AR-SMCNN) model, which is able to capture comprehensive hierarchical information utilizing the advantages of both RNN and CNN. We use RNN to capture semantic-level correlation by its sequential modeling nature, and use an attention mechanism to keep track of the entities and relations simultaneously. Meanwhile, we use a similarity matrix based CNN with two-directions pooling to extract literal-level words interaction matching utilizing CNNs strength of modeling spatial correlation among data. Moreover, we have developed a new heuristic extension method for entity detection, which significantly decreases the effect of noise. Our method has outperformed the state-of-the-arts on SimpleQuestion benchmark in both accuracy and efficiency.

期刊:arXiv, 2018年5月27日

网址:

http://www.zhuanzhi.ai/document/5f6b1dc07f1683f8aa67485fd51229ce

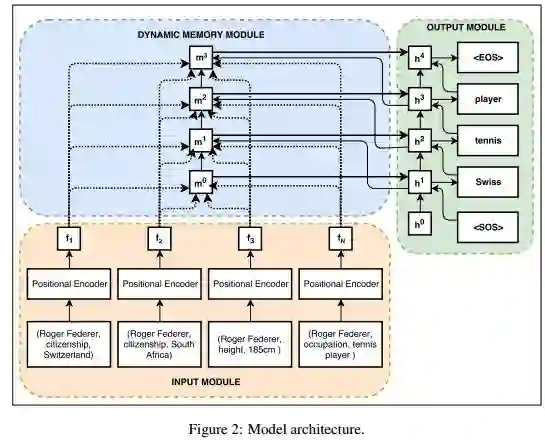

8.Generating Fine-Grained Open Vocabulary Entity Type Descriptions(生成细粒度开放词汇实体类型描述)

作者:Rajarshi Bhowmik,Gerard de Melo

Published in ACL 2018

机构:Rutgers University

摘要:While large-scale knowledge graphs provide vast amounts of structured facts about entities, a short textual description can often be useful to succinctly characterize an entity and its type. Unfortunately, many knowledge graph entities lack such textual descriptions. In this paper, we introduce a dynamic memory-based network that generates a short open vocabulary description of an entity by jointly leveraging induced fact embeddings as well as the dynamic context of the generated sequence of words. We demonstrate the ability of our architecture to discern relevant information for more accurate generation of type description by pitting the system against several strong baselines.

期刊:arXiv, 2018年5月27日

网址:

http://www.zhuanzhi.ai/document/3ca888c6090bf55ca2b5d1beb0f9f5f2

-END-

专 · 知

人工智能领域主题知识资料查看与加入专知人工智能服务群:

【专知AI服务计划】专知AI知识技术服务会员群加入与人工智能领域26个主题知识资料全集获取。欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知