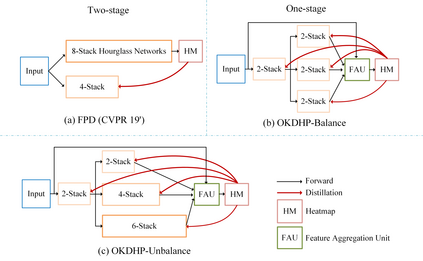

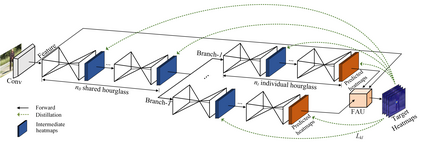

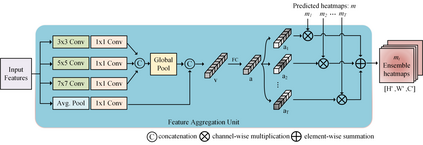

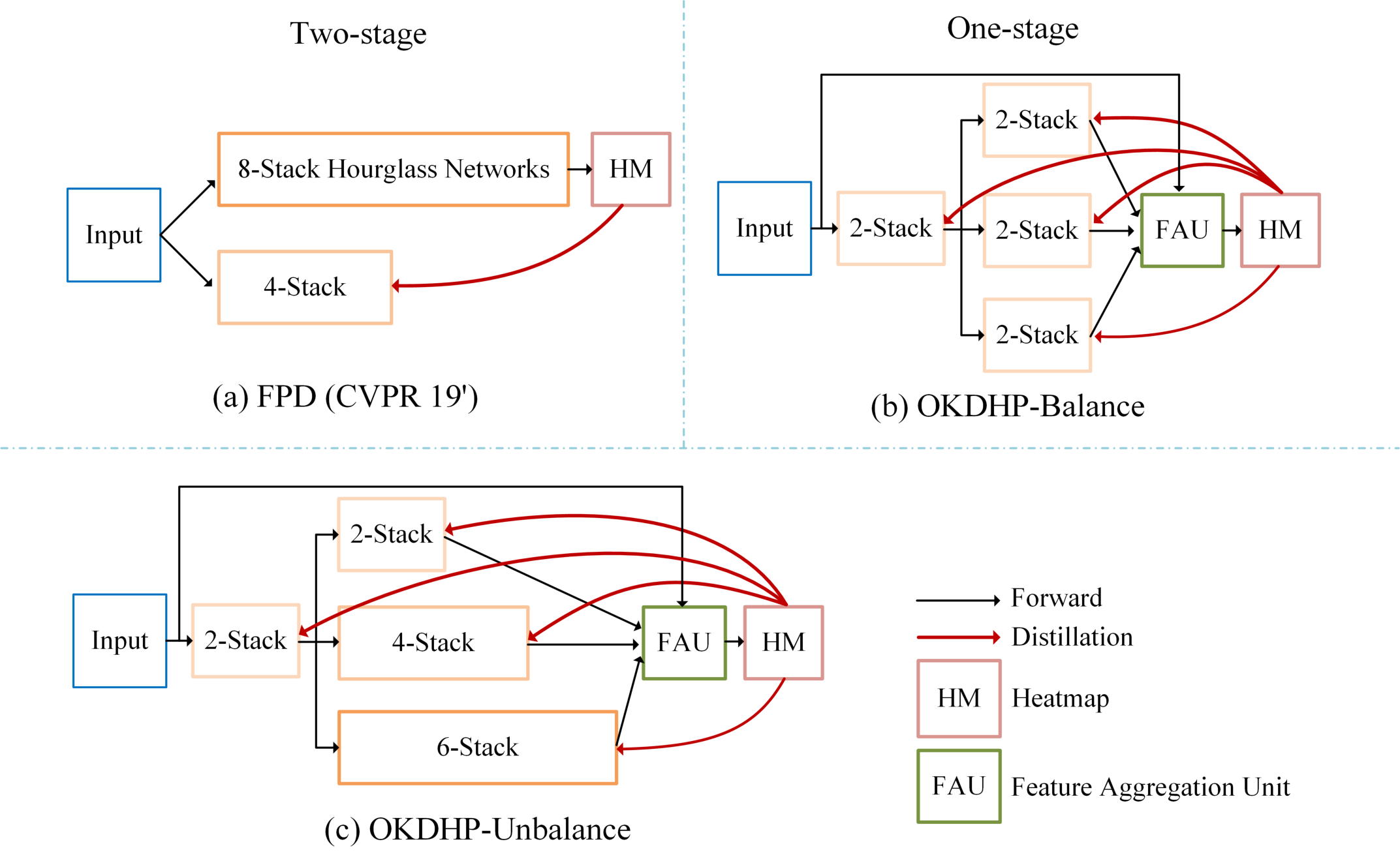

Existing state-of-the-art human pose estimation methods require heavy computational resources for accurate predictions. One promising technique to obtain an accurate yet lightweight pose estimator is knowledge distillation, which distills the pose knowledge from a powerful teacher model to a less-parameterized student model. However, existing pose distillation works rely on a heavy pre-trained estimator to perform knowledge transfer and require a complex two-stage learning procedure. In this work, we investigate a novel Online Knowledge Distillation framework by distilling Human Pose structure knowledge in a one-stage manner to guarantee the distillation efficiency, termed OKDHP. Specifically, OKDHP trains a single multi-branch network and acquires the predicted heatmaps from each, which are then assembled by a Feature Aggregation Unit (FAU) as the target heatmaps to teach each branch in reverse. Instead of simply averaging the heatmaps, FAU which consists of multiple parallel transformations with different receptive fields, leverages the multi-scale information, thus obtains target heatmaps with higher-quality. Specifically, the pixel-wise Kullback-Leibler (KL) divergence is utilized to minimize the discrepancy between the target heatmaps and the predicted ones, which enables the student network to learn the implicit keypoint relationship. Besides, an unbalanced OKDHP scheme is introduced to customize the student networks with different compression rates. The effectiveness of our approach is demonstrated by extensive experiments on two common benchmark datasets, MPII and COCO.

翻译:现有最先进的人类形态估计方法需要大量计算资源才能准确预测。 获得准确而轻轻的人类形态估计显示的有希望的方法之一是知识蒸馏,将强大的教师模型的表面知识从强大的教师模型蒸馏到不那么简单的学生模型。 然而,现有的蒸馏工作依靠一个经过严格预先训练的高级估计器来进行知识转让,需要复杂的两阶段学习程序。在这项工作中,我们通过以一个阶段的方式将人类形态结构知识蒸馏成一个全新的在线知识蒸馏框架,以预留效率保证蒸馏效率。具体地说,OKDHP将一个单一的多管网网络和从每个网络获得预测的热映射图,然后由功能集成单位组组组组组组组组组组组组组成,作为向每个分支提供反向方向教学的目标热映射仪。 我们的热映射图不是简单的,而是由不同接受场的多重平行转换法,利用多级信息,从而获得质量更高的目标热映射图。 具体地说, IMFL 数据是用于学生的软缩缩缩缩图。