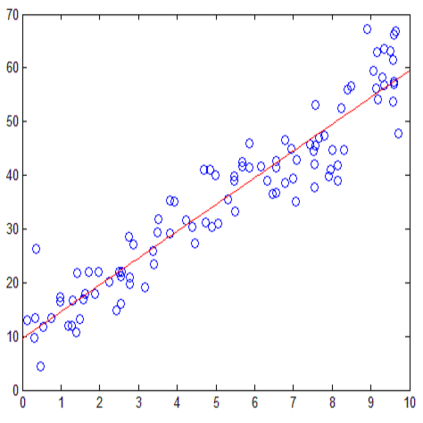

Neural sequence models, especially transformers, exhibit a remarkable capacity for in-context learning. They can construct new predictors from sequences of labeled examples $(x, f(x))$ presented in the input without further parameter updates. We investigate the hypothesis that transformer-based in-context learners implement standard learning algorithms implicitly, by encoding smaller models in their activations, and updating these implicit models as new examples appear in the context. Using linear regression as a prototypical problem, we offer three sources of evidence for this hypothesis. First, we prove by construction that transformers can implement learning algorithms for linear models based on gradient descent and closed-form ridge regression. Second, we show that trained in-context learners closely match the predictors computed by gradient descent, ridge regression, and exact least-squares regression, transitioning between different predictors as transformer depth and dataset noise vary, and converging to Bayesian estimators for large widths and depths. Third, we present preliminary evidence that in-context learners share algorithmic features with these predictors: learners' late layers non-linearly encode weight vectors and moment matrices. These results suggest that in-context learning is understandable in algorithmic terms, and that (at least in the linear case) learners may rediscover standard estimation algorithms. Code and reference implementations are released at https://github.com/ekinakyurek/google-research/blob/master/incontext.

翻译:神经序列模型, 特别是变压器, 展示出一种惊人的内流学习能力。 它们可以从输入中的标签示例序列中, $(x, f(x) $(x) 美元) 构建新的预测器, 而输入时没有进一步的参数更新 。 我们调查一个假设, 即基于变压器的内流学习者在启动时将较小的模型编码成默认的学习算法, 并随着新例子的出现而更新这些隐含的模型 。 使用线性回归作为原型问题, 我们为这一假设提供三个证据来源 。 首先, 我们通过建筑证明, 变压器可以使用基于梯度下移和封闭式脊脊脊回归的线模型序列进行新的预测。 其次, 我们显示, 受过培训的内流学习者与由梯度下降、 脊柱回归和精确最小最小的最小方位回归算法计算的预测器密切匹配。 不同的预测器随着变压深度和数据设置的噪音, 与Bayesians 估测算器的宽度和深度。 我们提出初步证据表明, 与这些变压学习者与这些预测器在后层非线级的变压/ 判判判判算中, 判判判算法 。