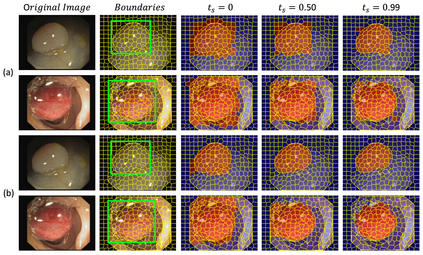

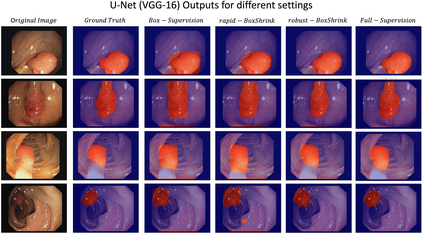

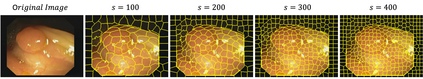

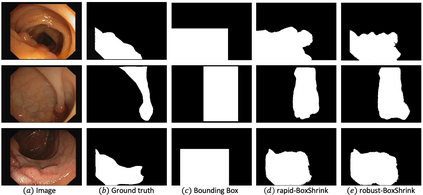

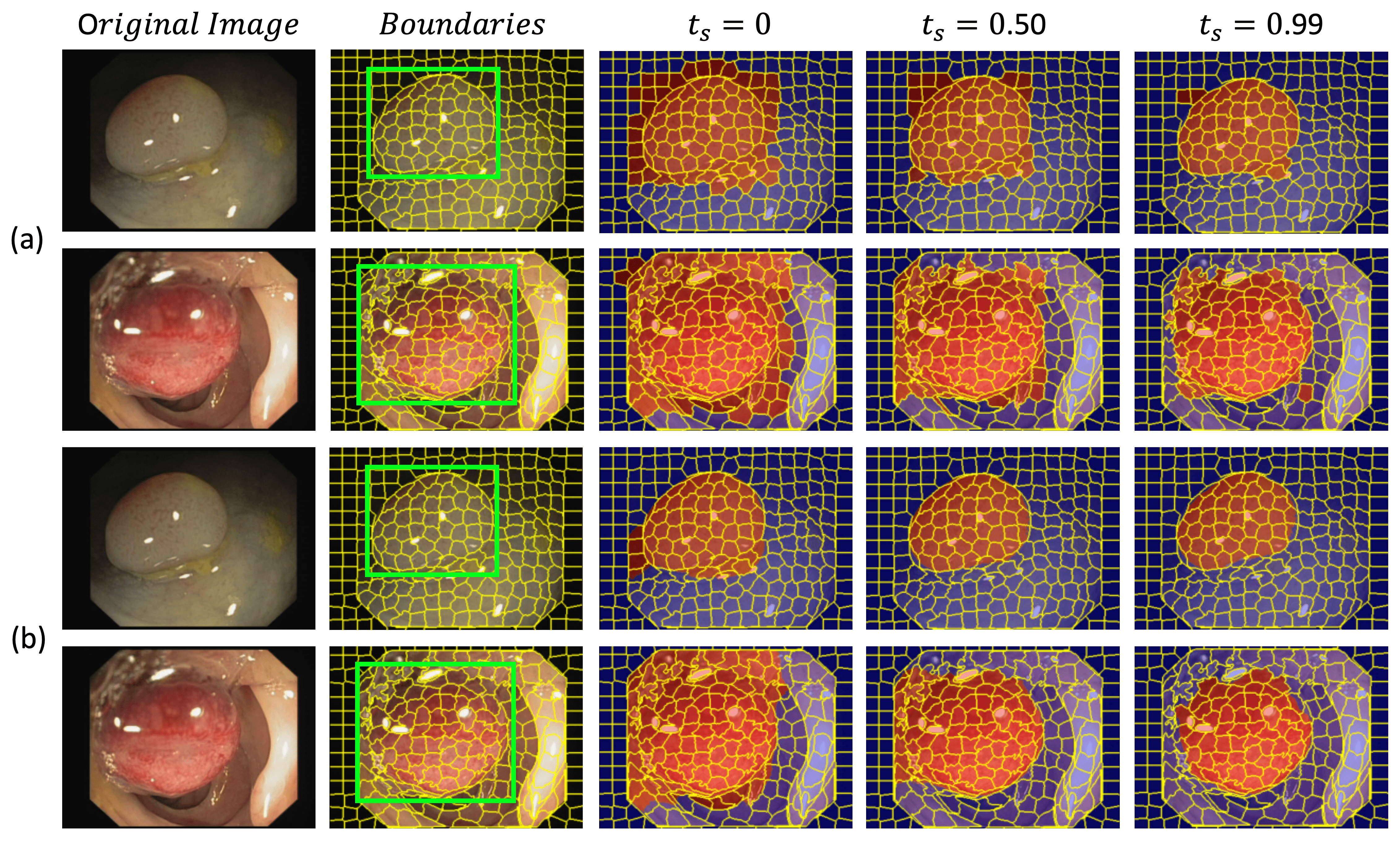

One of the core challenges facing the medical image computing community is fast and efficient data sample labeling. Obtaining fine-grained labels for segmentation is particularly demanding since it is expensive, time-consuming, and requires sophisticated tools. On the contrary, applying bounding boxes is fast and takes significantly less time than fine-grained labeling, but does not produce detailed results. In response, we propose a novel framework for weakly-supervised tasks with the rapid and robust transformation of bounding boxes into segmentation masks without training any machine learning model, coined BoxShrink. The proposed framework comes in two variants - rapid-BoxShrink for fast label transformations, and robust-BoxShrink for more precise label transformations. An average of four percent improvement in IoU is found across several models when being trained using BoxShrink in a weakly-supervised setting, compared to using only bounding box annotations as inputs on a colonoscopy image data set. We open-sourced the code for the proposed framework and published it online.

翻译:医疗图像计算界面临的核心挑战之一是快速高效的数据样本标签。 获取精细的分割标签要求特别高, 因为它昂贵、 耗时且需要复杂的工具。 相反, 应用捆绑盒的速度很快, 花费的时间大大少于精细标签, 但并没有产生详细的结果 。 作为回应, 我们提议了一个新框架, 用于在不训练任何机器学习模型即硬盒子Shrink的情况下, 将捆绑盒快速和稳健地转换成分割面罩, 。 拟议的框架分为两种变体: 快速标签转换的BoxSrink, 以及更精确的标签转换的坚固的BoxSrink。 在使用BoxShrrink 进行微弱监督环境下的培训时, 在多个模型中发现IoU平均有4%的改进, 相比之下, 仅使用捆绑框说明作为结图像数据集的投入, 我们打开了拟议框架的代码, 并在网上公布 。