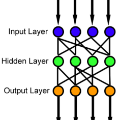

This work contributes to the development of a new data-driven method (D-DM) of feedforward neural networks (FNNs) learning. This method was proposed recently as a way of improving randomized learning of FNNs by adjusting the network parameters to the target function fluctuations. The method employs logistic sigmoid activation functions for hidden nodes. In this study, we introduce other activation functions, such as bipolar sigmoid, sine function, saturating linear functions, reLU, and softplus. We derive formulas for their parameters, i.e. weights and biases. In the simulation study, we evaluate the performance of FNN data-driven learning with different activation functions. The results indicate that the sigmoid activation functions perform much better than others in the approximation of complex, fluctuated target functions.

翻译:这项工作有助于开发新的数据驱动神经网络(FFNNs)进化学习数据驱动方法(D-DM),最近提出这一方法是为了通过调整网络参数以适应目标函数波动,改进FNNs随机学习的方法。该方法为隐藏节点使用后勤类模激活功能。在本研究中,我们引入了其他激活功能,如双极类、正弦功能、饱和线性功能、ReLU和软增。我们为它们的参数(即重量和偏差)提取公式。在模拟研究中,我们用不同的激活功能评估FNN数据驱动学习的性能。结果显示,在复杂、波动目标功能的近似近近时,Sigmobs激活功能比其他功能要好得多。