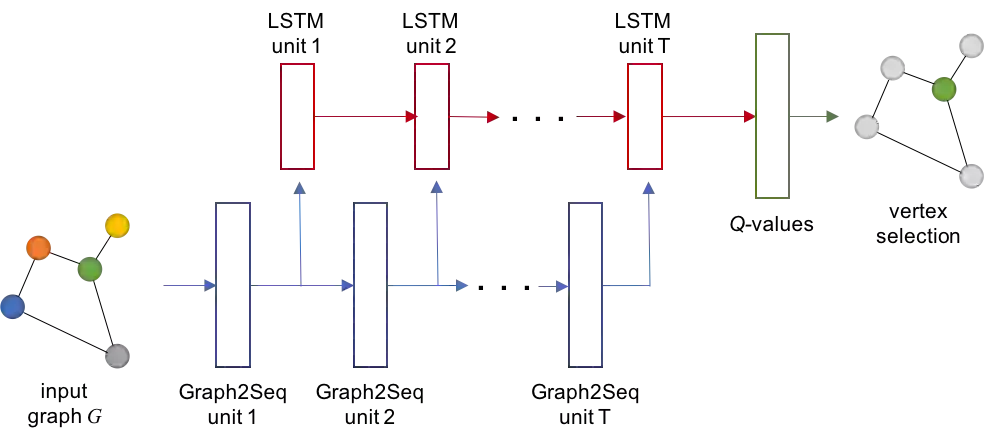

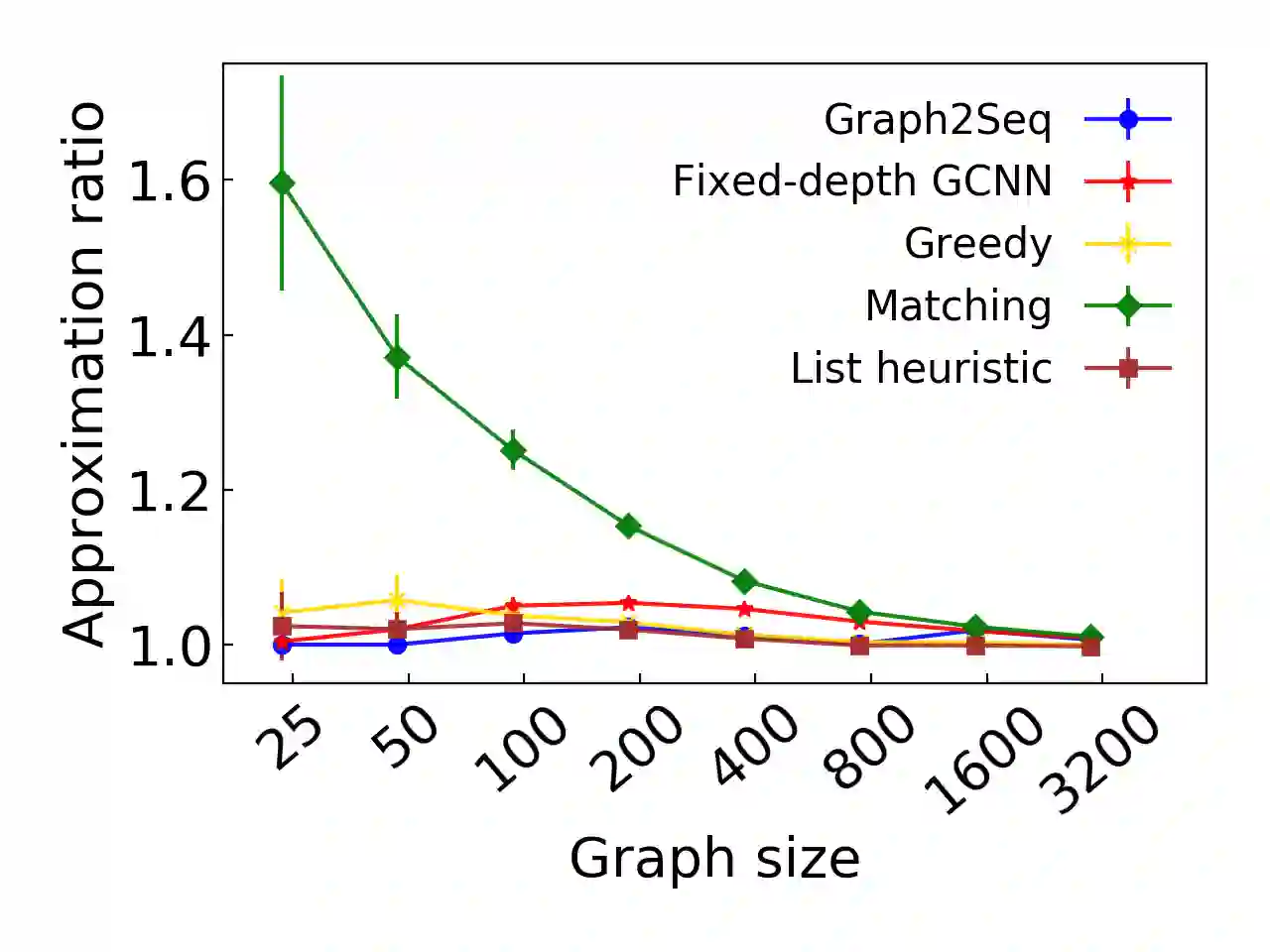

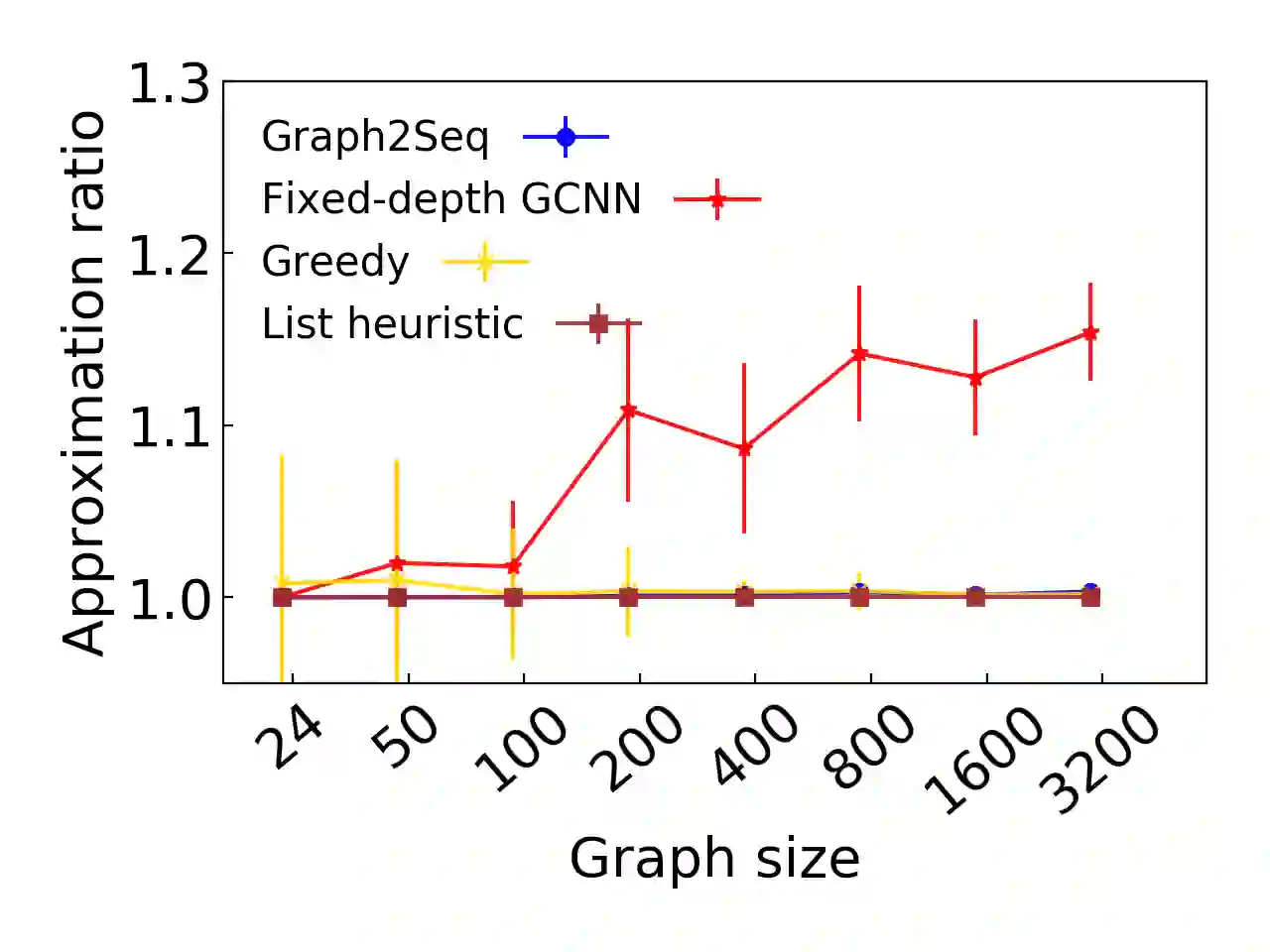

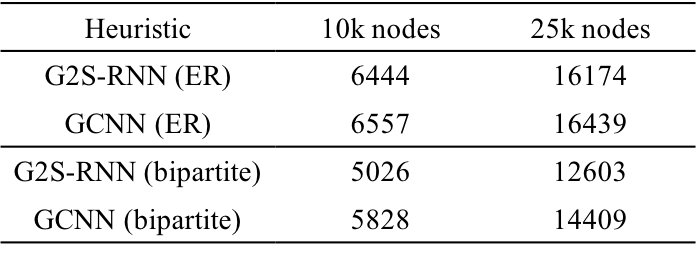

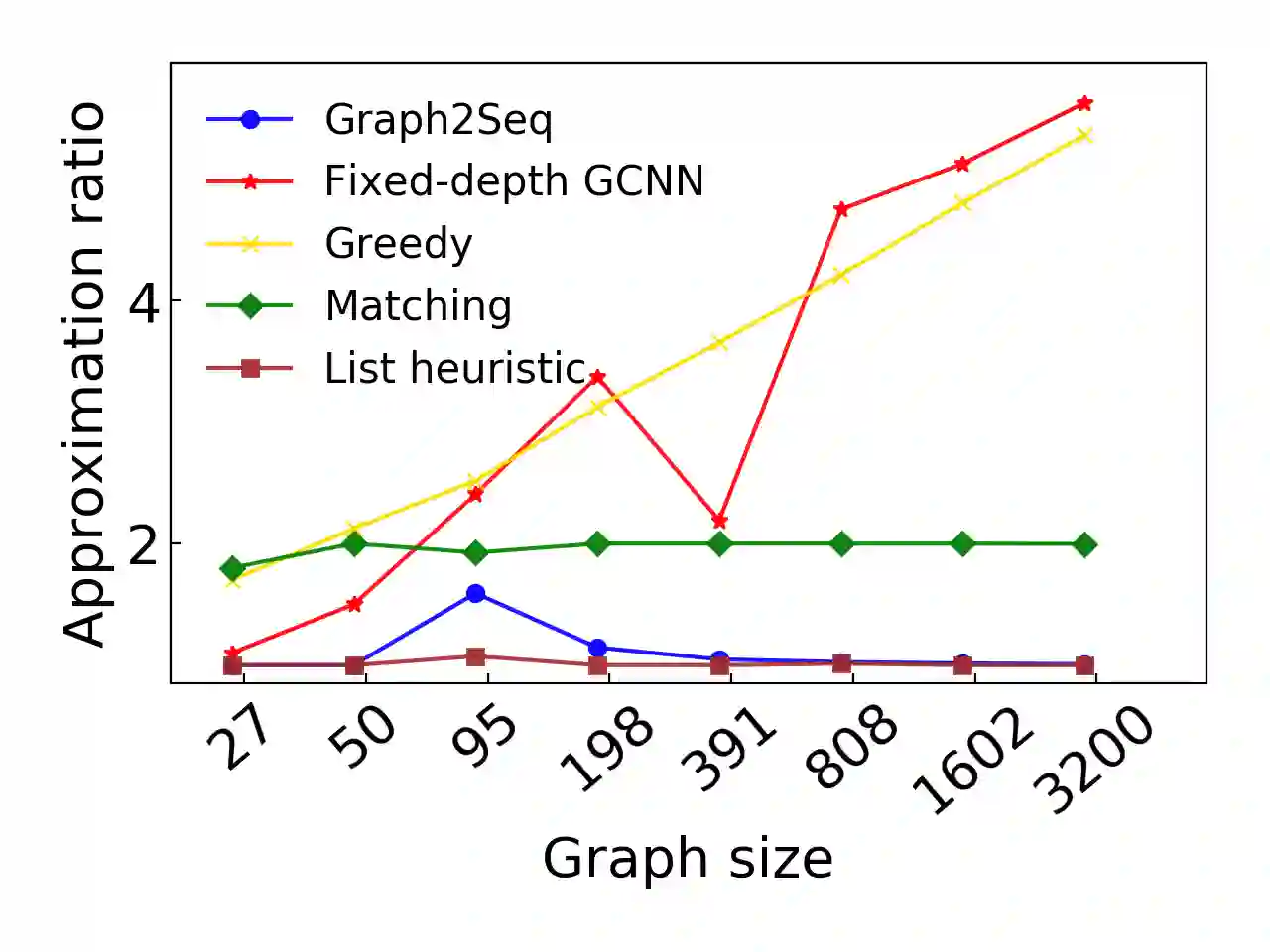

Neural networks have been shown to be an effective tool for learning algorithms over graph-structured data. However, graph representation techniques--that convert graphs to real-valued vectors for use with neural networks--are still in their infancy. Recent works have proposed several approaches (e.g., graph convolutional networks), but these methods have difficulty scaling and generalizing to graphs with different sizes and shapes. We present Graph2Seq, a new technique that represents graphs as an infinite time-series. By not limiting the representation to a fixed dimension, Graph2Seq scales naturally to graphs of arbitrary sizes and shapes. Graph2Seq is also reversible, allowing full recovery of the graph structure from the sequence. By analyzing a formal computational model for graph representation, we show that an unbounded sequence is necessary for scalability. Our experimental results with Graph2Seq show strong generalization and new state-of-the-art performance on a variety of graph combinatorial optimization problems.

翻译:神经网络已被证明是学习图形结构数据的算法的有效工具。 然而,图形代表技术 — 将图形转换成实际值矢量,供神经网络使用 — 仍处于初级阶段。最近的工作提出了几种方法(如图形革命网络),但这些方法难以向不同大小和形状的图表缩放和概括。我们展示了图2Seq,这是一种以无限的时间序列代表图形的新技术。通过不将表示限制为固定尺寸,图2Seq 比例表自然限于任意大小和形状的图表。图2Seq 也可以反转,允许从序列中完全恢复图形结构。通过分析一个正式的图形表示计算模型,我们显示一个无限制的序列对于可缩放性是必要的。我们用图2Seq 的实验结果显示了各种图形组合优化问题的强烈概括性和新状态性表现。