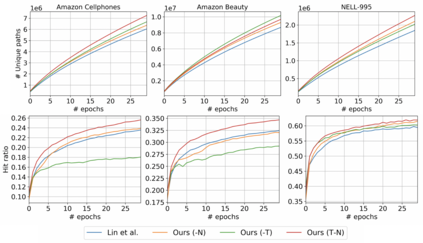

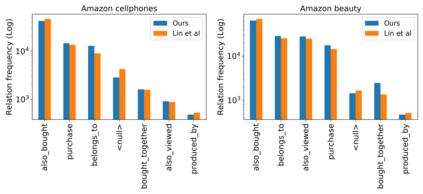

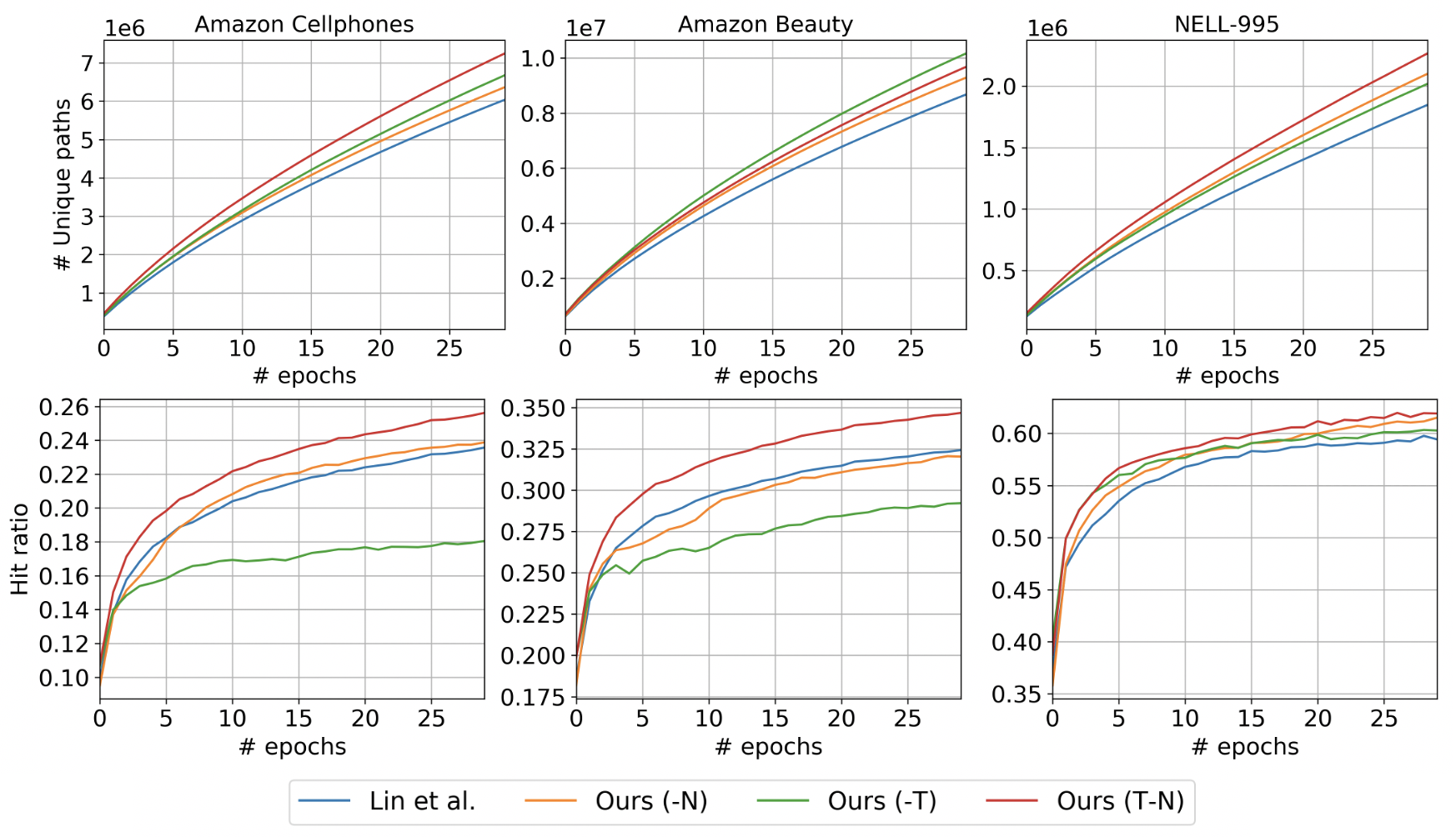

Path-based relational reasoning over knowledge graphs has become increasingly popular due to a variety of downstream applications such as question answering in dialogue systems, fact prediction, and recommender systems. In recent years, reinforcement learning (RL) has provided solutions that are more interpretable and explainable than other deep learning models. However, these solutions still face several challenges, including large action space for the RL agent and accurate representation of entity neighborhood structure. We address these problems by introducing a type-enhanced RL agent that uses the local neighborhood information for efficient path-based reasoning over knowledge graphs. Our solution uses graph neural network (GNN) for encoding the neighborhood information and utilizes entity types to prune the action space. Experiments on real-world dataset show that our method outperforms state-of-the-art RL methods and discovers more novel paths during the training procedure.

翻译:由于对话系统中的问答、事实预测和建议系统等一系列下游应用,知识图表的基于路径的关系推理越来越受欢迎。近年来,强化学习(RL)提供了比其他深层学习模式更易解释和解释的解决方案。然而,这些解决方案仍面临若干挑战,包括RL代理商的大型行动空间和实体邻里结构的准确代表性。我们通过引入一个类型强化的RL代理商来解决这些问题,该代理商利用本地邻里信息对知识图表进行有效的基于路径的推理。我们的解决方案使用图形神经网络(GNN)来编码邻里信息,并利用实体类型来模拟行动空间。现实世界数据集实验显示,我们的方法超越了最先进的RL方法,并在培训过程中发现了更多新的路径。