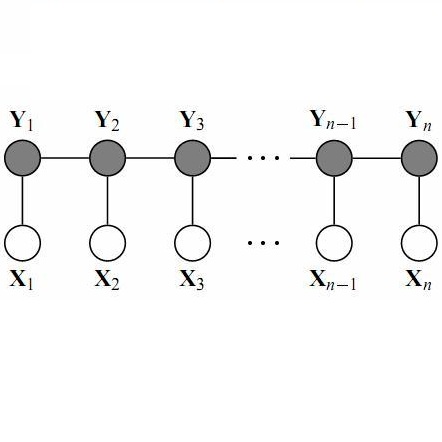

A major challenge in structured prediction is to represent the interdependencies within output structures. When outputs are structured as sequences, linear-chain conditional random fields (CRFs) are a widely used model class which can learn \textit{local} dependencies in the output. However, the CRF's Markov assumption makes it impossible for CRFs to represent distributions with \textit{nonlocal} dependencies, and standard CRFs are unable to respect nonlocal constraints of the data (such as global arity constraints on output labels). We present a generalization of CRFs that can enforce a broad class of constraints, including nonlocal ones, by specifying the space of possible output structures as a regular language $\mathcal{L}$. The resulting regular-constrained CRF (RegCCRF) has the same formal properties as a standard CRF, but assigns zero probability to all label sequences not in $\mathcal{L}$. Notably, RegCCRFs can incorporate their constraints during training, while related models only enforce constraints during decoding. We prove that constrained training is never worse than constrained decoding, and show empirically that it can be substantially better in practice. Additionally, we demonstrate a practical benefit on downstream tasks by incorporating a RegCCRF into a deep neural model for semantic role labeling, exceeding state-of-the-art results on a standard dataset.

翻译:在结构化预测中,一个重大挑战是代表产出结构中的相互依存性。当产出按顺序排列时,线性链有条件随机字段(CRFs)是一个广泛使用的模型类,可以学习输出中的成份(textit{当地}依赖性)。然而,由于通用报告格式的Markov假设,通用报告格式不可能代表与数据成份(textit{非当地}依赖性)的分布,标准通用报告格式无法尊重数据的非本地限制(例如全球对产出标签的平等性限制)。当产出按顺序排列时,我们提出通用报告格式的概括化,通过将可能的产出结构的空间指定为常规语言($\mathcal{L}$),可以实施广泛的限制,包括非本地的限制。因此,常规化的通用报告格式(RegCCRF)具有与标准通用报告格式相同的正式属性,但给不是以$mathcal=L}的所有标签序列设定了零概率。 值得注意的是,RegCCRFs在培训过程中可以纳入它们的制约,而相关的模型只能在解码过程中强制实施各种限制。我们证明,制约性的培训比深层次的标准化任务要更差得多,要显示我们将标准化的结果,我们更能显示我们更深入地纳入标准。