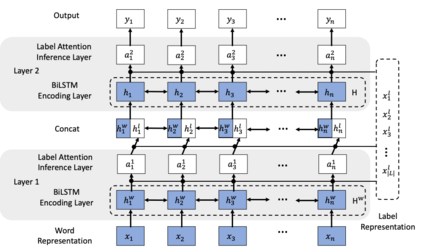

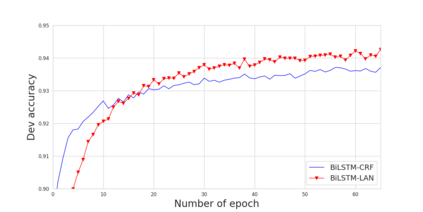

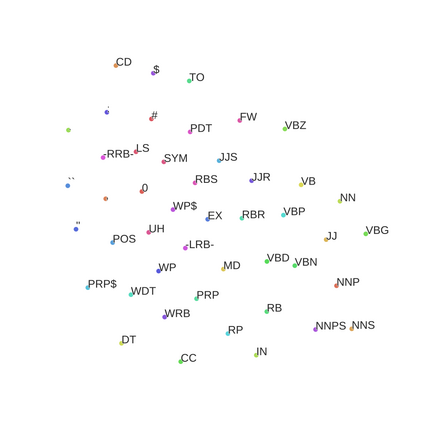

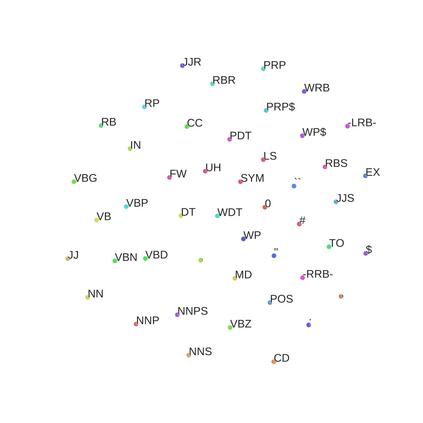

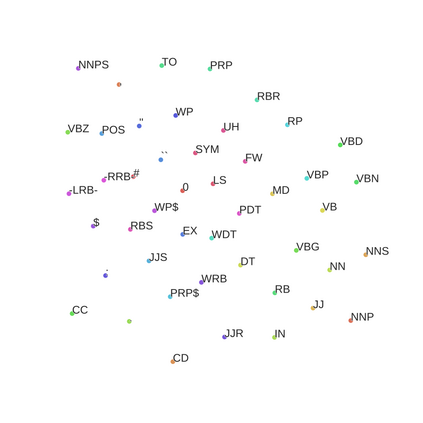

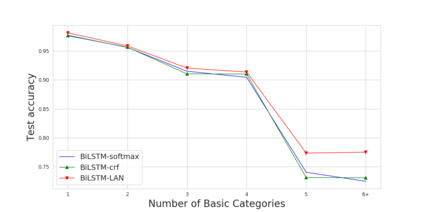

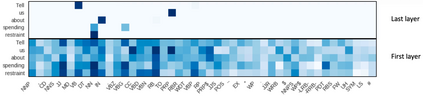

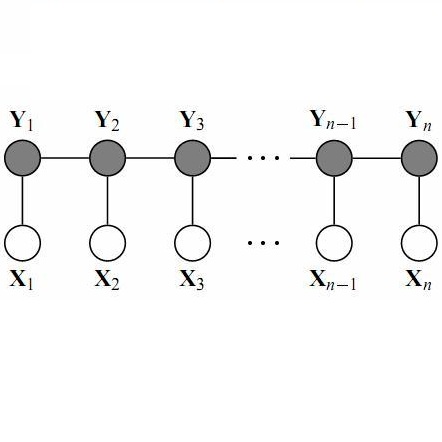

CRF has been used as a powerful model for statistical sequence labeling. For neural sequence labeling, however, BiLSTM-CRF does not always lead to better results compared with BiLSTM-softmax local classification. This can be because the simple Markov label transition model of CRF does not give much information gain over strong neural encoding. For better representing label sequences, we investigate a hierarchically-refined label attention network, which explicitly leverages label embeddings and captures potential long-term label dependency by giving each word incrementally refined label distributions with hierarchical attention. Results on POS tagging, NER and CCG supertagging show that the proposed model not only improves the overall tagging accuracy with similar number of parameters, but also significantly speeds up the training and testing compared to BiLSTM-CRF.

翻译:对于神经序列标签,BILSTM-CRF并非总能比BILSTM-软式本地分类产生更好的结果。这可能是因为简单的Markov标签过渡模式在强大的神经编码中并没有带来多少信息收益。为了更好地代表标签序列,我们调查一个按等级调整的标签关注网络,它明确利用标签嵌入和捕捉潜在的长期标签依赖性,在等级上注意下,给每个字逐字逐级细化标签分布。POS标签、NER和CCG的上贴结果显示,拟议的模型不仅提高了类似参数的总体标签准确性,而且大大加快了与BILSTM-CRF相比的培训和测试。