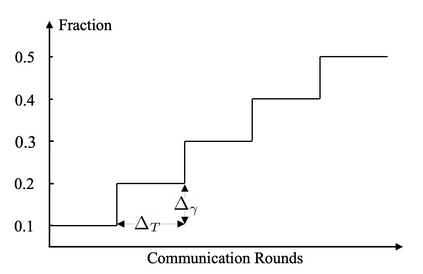

Federated learning (FL) offers a solution to train a global machine learning model while still maintaining data privacy, without needing access to data stored locally at the clients. However, FL suffers performance degradation when client data distribution is non-IID, and a longer training duration to combat this degradation may not necessarily be feasible due to communication limitations. To address this challenge, we propose a new adaptive training algorithm $\texttt{AdaFL}$, which comprises two components: (i) an attention-based client selection mechanism for a fairer training scheme among the clients; and (ii) a dynamic fraction method to balance the trade-off between performance stability and communication efficiency. Experimental results show that our $\texttt{AdaFL}$ algorithm outperforms the usual $\texttt{FedAvg}$ algorithm, and can be incorporated to further improve various state-of-the-art FL algorithms, with respect to three aspects: model accuracy, performance stability, and communication efficiency.

翻译:联邦学习(FL)为培训全球机器学习模式提供了一种解决方案,同时保持数据隐私,而无需查阅客户在当地储存的数据。然而,FL在客户数据传播非IID时,业绩会退化,而由于通信限制,防止这种退化的培训时间可能不一定可行。为了应对这一挑战,我们建议采用一个新的适应性培训算法$\tt{AdaFL},其中包括两个部分:(一) 以关注为基础的客户选择机制,以便在客户中实施更公平的培训计划;(二) 平衡性能稳定性和通信效率之间的平衡的动态分数法。实验结果表明,我们的美元(textt{AdaFL})的算法超过了通常的$(textt{FedAvg})的算法,可以在三个方面进一步改进各种最先进的FL算法:模型准确性、性能稳定性和通信效率。