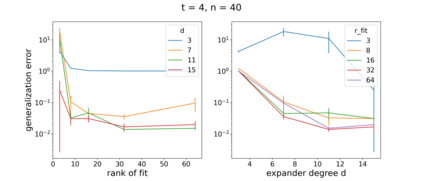

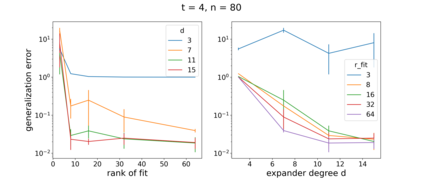

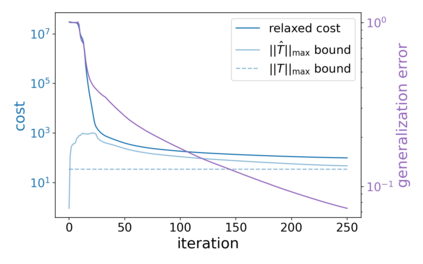

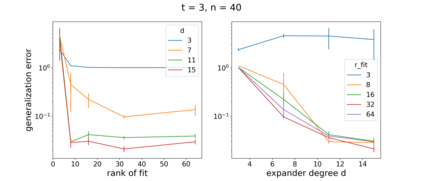

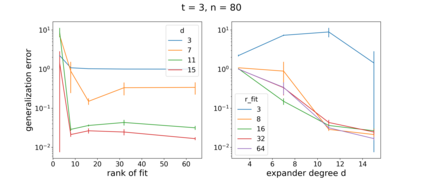

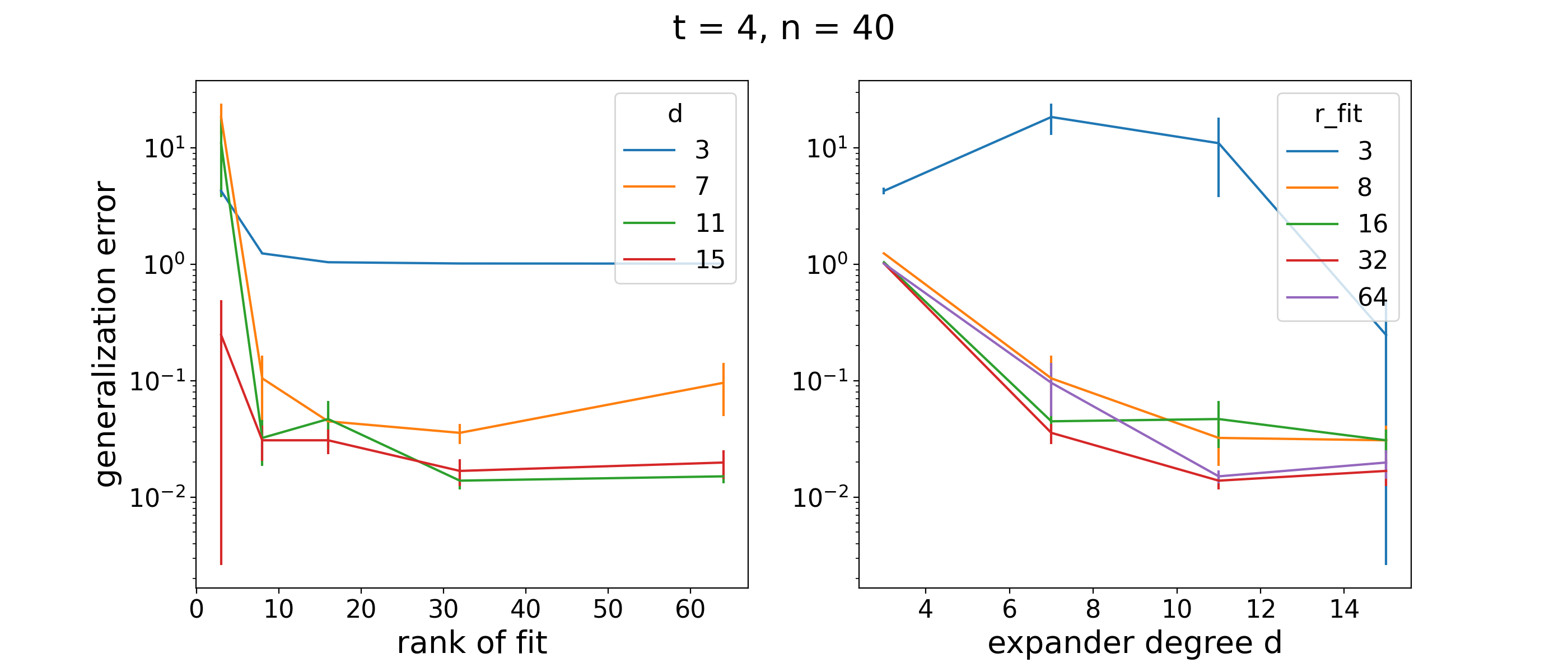

We provide a novel analysis of low-rank tensor completion based on hypergraph expanders. As a proxy for rank, we minimize the max-quasinorm of the tensor, which generalizes the max-norm for matrices. Our analysis is deterministic and shows that the number of samples required to approximately recover an order-$t$ tensor with at most $n$ entries per dimension is linear in $n$, under the assumption that the rank and order of the tensor are $O(1)$. As steps in our proof, we find a new expander mixing lemma for a $t$-partite, $t$-uniform regular hypergraph model, and prove several new properties about tensor max-quasinorm. To the best of our knowledge, this is the first deterministic analysis of tensor completion. We develop a practical algorithm that solves a relaxed version of the max-quasinorm minimization problem, and we demonstrate its efficacy with numerical experiments.

翻译:我们根据高压扩张器对低压强完成量进行新颖的分析。 作为等级的代名词,我们最大限度地减少高压的最大负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负负。我们的分析是决定性的,并显示大约回收每维的定值-美元(以美元计)最高压负负负负负负负负负负数所需的样本数量是线性直线值(以美元计),假设强压的等级和顺序是O(1)美元。作为我们证据中的步骤,我们发现一个新的膨胀器混合利玛,以美元-部分,美元-单向常规高压模型,并证明数个关于高压负负负负负负负负负负负负负负负负负负负负负负负负负负负数的新特性。据我们所知,这是对单倍完成数的首次确定性分析。我们开发了一种实际算法,解决最大负负负负负负负负负负最小最小问题的宽松版本,我们展示了它的效果。