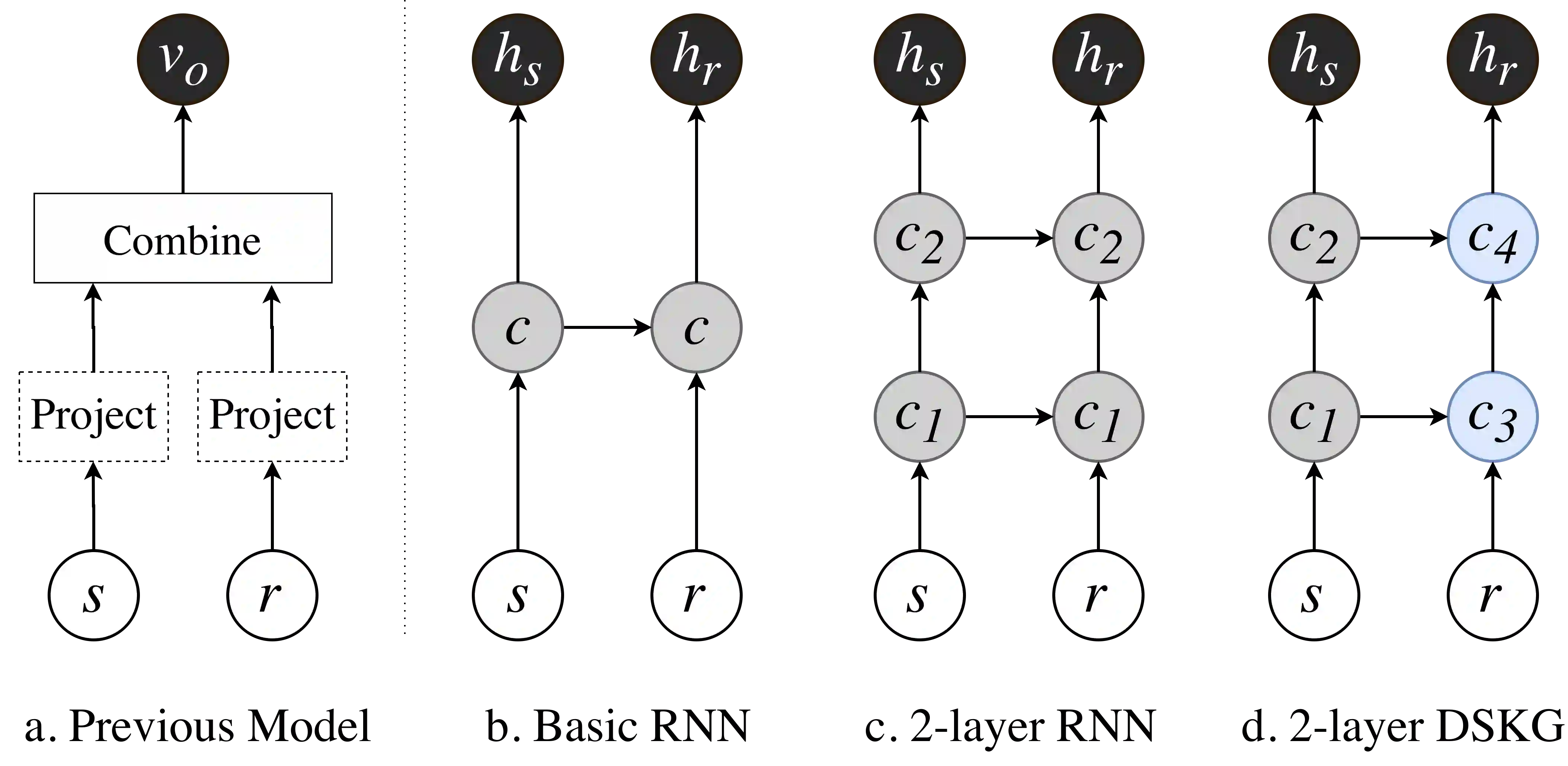

Knowledge graph (KG) completion aims to fill the missing facts in a KG, where a fact is represented as a triple in the form of $(subject, relation, object)$. Current KG completion models compel two-thirds of a triple provided (e.g., $subject$ and $relation$) to predict the remaining one. In this paper, we propose a new model, which uses a KG-specific multi-layer recurrent neural network (RNN) to model triples in a KG as sequences. It outperformed several state-of-the-art KG completion models on the conventional entity prediction task for many evaluation metrics, based on two benchmark datasets and a more difficult dataset. Furthermore, our model is enabled by the sequential characteristic and thus capable of predicting the whole triples only given one entity. Our experiments demonstrated that our model achieved promising performance on this new triple prediction task.

翻译:完成知识图( KG) 的目的是填充 KG 中缺失的事实, 事实以美元( 主题、 关系、 对象) 的形式表现为三重。 当前 KG 完成模型迫使提供的三重数据中的三分之二( 主题、 关系、 对象) 预测剩余数据 。 在本文中, 我们提出了一个新模型, 将KG 特定多层经常性神经网络作为KG 序列的三重模型。 它在两个基准数据集和一个更困难的数据集的基础上, 完成了许多评估指标常规实体预测任务上的一些最先进的 KG 完成模型。 此外, 我们的模型是由连续特征所促成的, 因而只能对一个实体预测整个三重数据。 我们的实验表明, 我们的模式在新的三重预测任务上取得了有希望的业绩。