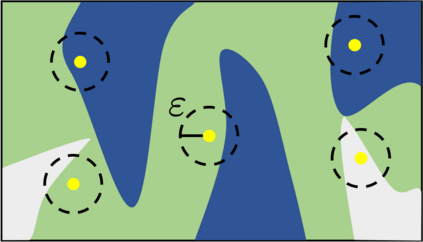

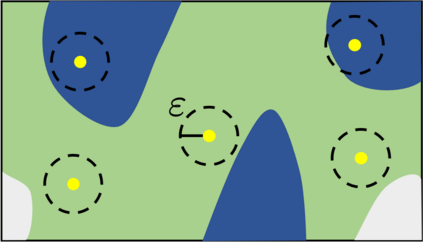

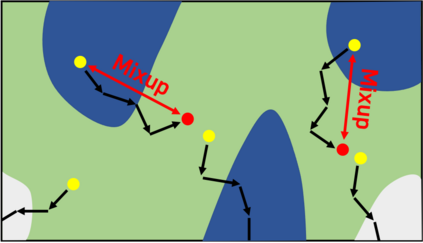

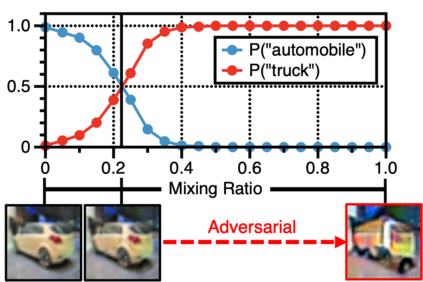

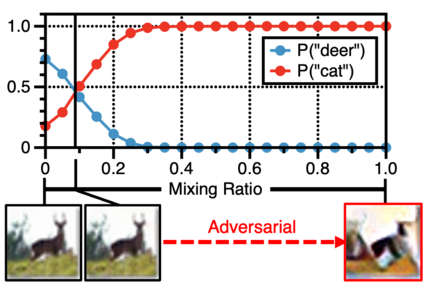

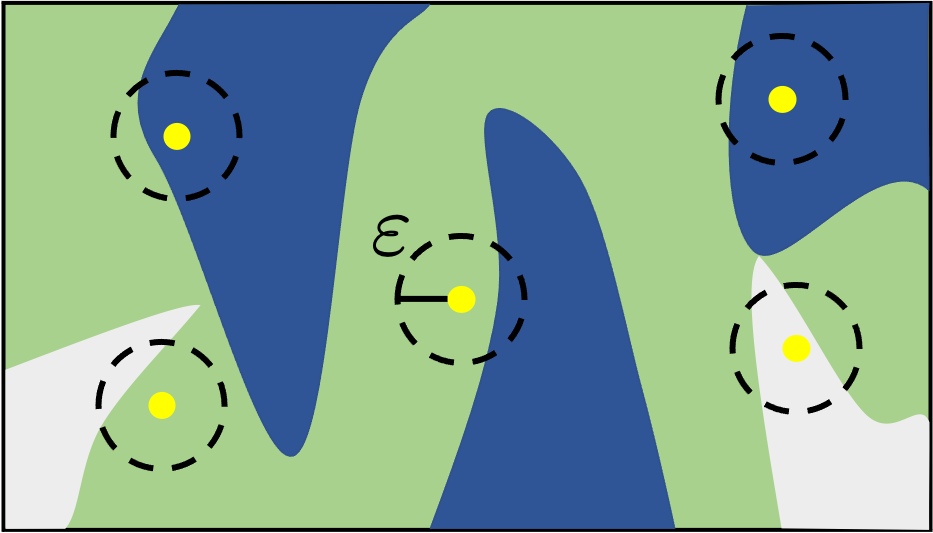

Randomized smoothing is currently a state-of-the-art method to construct a certifiably robust classifier from neural networks against $\ell_2$-adversarial perturbations. Under the paradigm, the robustness of a classifier is aligned with the prediction confidence, i.e., the higher confidence from a smoothed classifier implies the better robustness. This motivates us to rethink the fundamental trade-off between accuracy and robustness in terms of calibrating confidences of a smoothed classifier. In this paper, we propose a simple training scheme, coined SmoothMix, to control the robustness of smoothed classifiers via self-mixup: it trains on convex combinations of samples along the direction of adversarial perturbation for each input. The proposed procedure effectively identifies over-confident, near off-class samples as a cause of limited robustness in case of smoothed classifiers, and offers an intuitive way to adaptively set a new decision boundary between these samples for better robustness. Our experimental results demonstrate that the proposed method can significantly improve the certified $\ell_2$-robustness of smoothed classifiers compared to existing state-of-the-art robust training methods.

翻译:目前,通过随机扰动,从神经网络中构建一个由神经网络用美元=2美元对抗性扰动来控制平稳叙级器的稳健性。在这种范式下,一个叙级器的稳健性与预测信心相一致,也就是说,一个平滑的叙级器的更自信意味着更稳健性。这促使我们重新思考调适一个平滑的叙级器信任度的准确性和稳健性之间的基本权衡。在本文中,我们提出了一个简单的培训计划,即硬拷贝平滑混合,以通过自我混合控制平滑的叙级器的稳健性:按照每项投入的对抗性渗透性对立方向,对样品的结节式组合进行训练。拟议的程序有效地查明了过度自信、近舱外采样在平滑的叙级器中造成有限的稳健性,并为调整这些样品之间的新的决定边界以更稳健性。我们的实验结果表明,拟议的方法可以大大改进经认证的美元=稳健的现有州级培训方法。