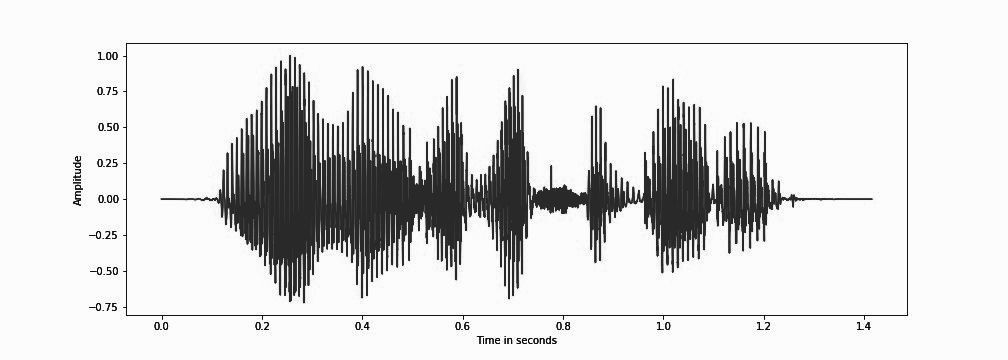

Large-scale, weakly-supervised speech recognition models, such as Whisper, have demonstrated impressive results on speech recognition across domains and languages. However, their application to long audio transcription via buffered or sliding window approaches is prone to drifting, hallucination & repetition; and prohibits batched transcription due to their sequential nature. Further, timestamps corresponding each utterance are prone to inaccuracies and word-level timestamps are not available out-of-the-box. To overcome these challenges, we present WhisperX, a time-accurate speech recognition system with word-level timestamps utilising voice activity detection and forced phoneme alignment. In doing so, we demonstrate state-of-the-art performance on long-form transcription and word segmentation benchmarks. Additionally, we show that pre-segmenting audio with our proposed VAD Cut & Merge strategy improves transcription quality and enables a twelve-fold transcription speedup via batched inference.

翻译:大型、薄弱监督的语音识别模型,如Whisper,在跨域和语言的语音识别上取得了令人印象深刻的成果。然而,它们通过缓冲或滑动窗口方式对长音转录器的应用容易漂浮、产生幻觉和重复;禁止分批转录,因为其相继性质。此外,每个词的对应时间标记往往不准确,单词级时间戳无法从箱外获取。为了克服这些挑战,我们介绍了WhisperX,这是一个时间精确的语音识别系统,有使用语音活动探测和强制电话路段对齐的单词级时间标记。我们这样做是为了展示长式转录和单词分割基准方面的最先进的性能。此外,我们显示,将声音与我们提议的VAD Cut & Merge 战略预先隔开来,可以提高抄录质量,并通过分批推法加快12倍的转录速度。</s>