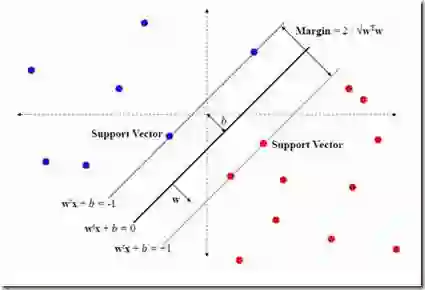

Support Vector Machines have been successfully used for one-class classification (OCSVM, SVDD) when trained on clean data, but they work much worse on dirty data: outliers present in the training data tend to become support vectors, and are hence considered "normal". In this article, we improve the effectiveness to detect outliers in dirty training data with a leave-out strategy: by temporarily omitting one candidate at a time, this point can be judged using the remaining data only. We show that this is more effective at scoring the outlierness of points than using the slack term of existing SVM-based approaches. Identified outliers can then be removed from the data, such that outliers hidden by other outliers can be identified, to reduce the problem of masking. Naively, this approach would require training N individual SVMs (and training $O(N^2)$ SVMs when iteratively removing the worst outliers one at a time), which is prohibitively expensive. We will discuss that only support vectors need to be considered in each step and that by reusing SVM parameters and weights, this incremental retraining can be accelerated substantially. By removing candidates in batches, we can further improve the processing time, although it obviously remains more costly than training a single SVM.

翻译:在经过清洁数据培训后,支持矢量机被成功地用于单级分类(OCSVM、SVDDD),但在使用清洁数据时,支持矢量机被成功使用,但在使用肮脏数据方面效果要差得多:培训数据中的外部值往往成为支持矢量,因此被认为是“正常的”。在本条中,我们提高了用脱产战略检测肮脏培训数据中外部值的效率:通过一次暂时省略一名候选人,只能用其余数据来判断这一点。我们表示,这比使用基于SVM的现有方法的短暂期限来评分点的偏差效果要大得多。然后,从数据中可以删除已查明的外部值,这样就可以发现由其他外部值隐藏的外部值,从而减少“正常”问题。在本条中,我们提高这一方法需要培训N 单个SVMM 数据( $( N) 2), $SVM ), 当反复删除最坏的外部值数据时,这代价太高。我们将讨论,只需要每一步考虑支持矢量的矢量才需要考虑,而且通过重新使用SVM参数来大幅度地提高SVM参数,当然可以加速地提高。