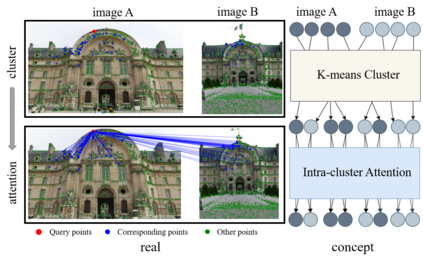

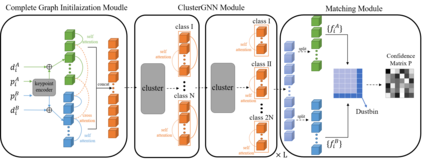

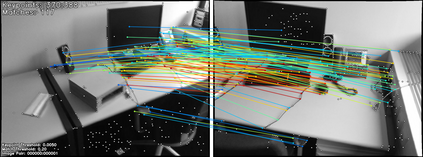

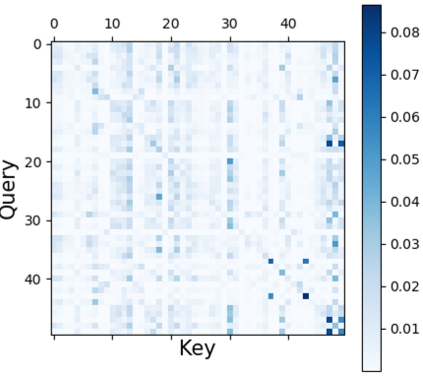

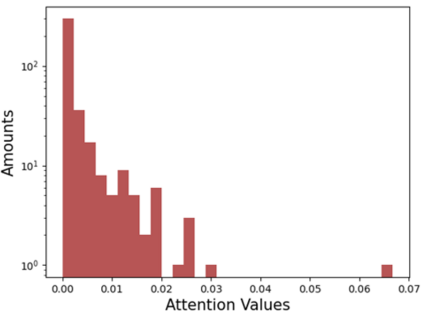

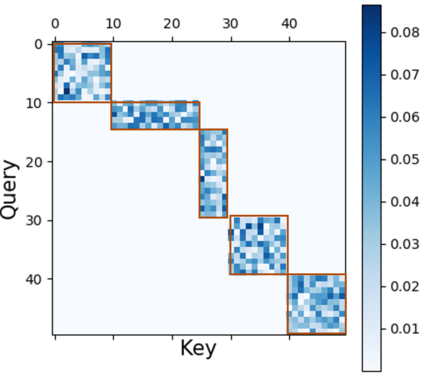

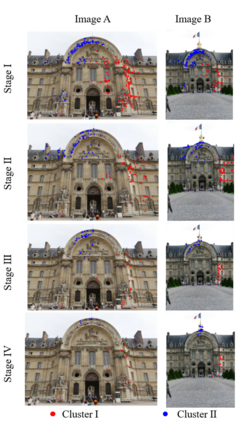

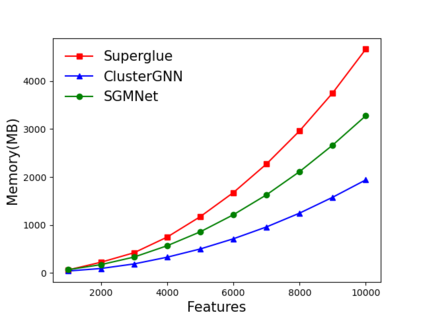

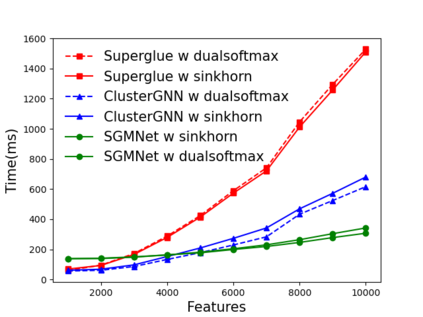

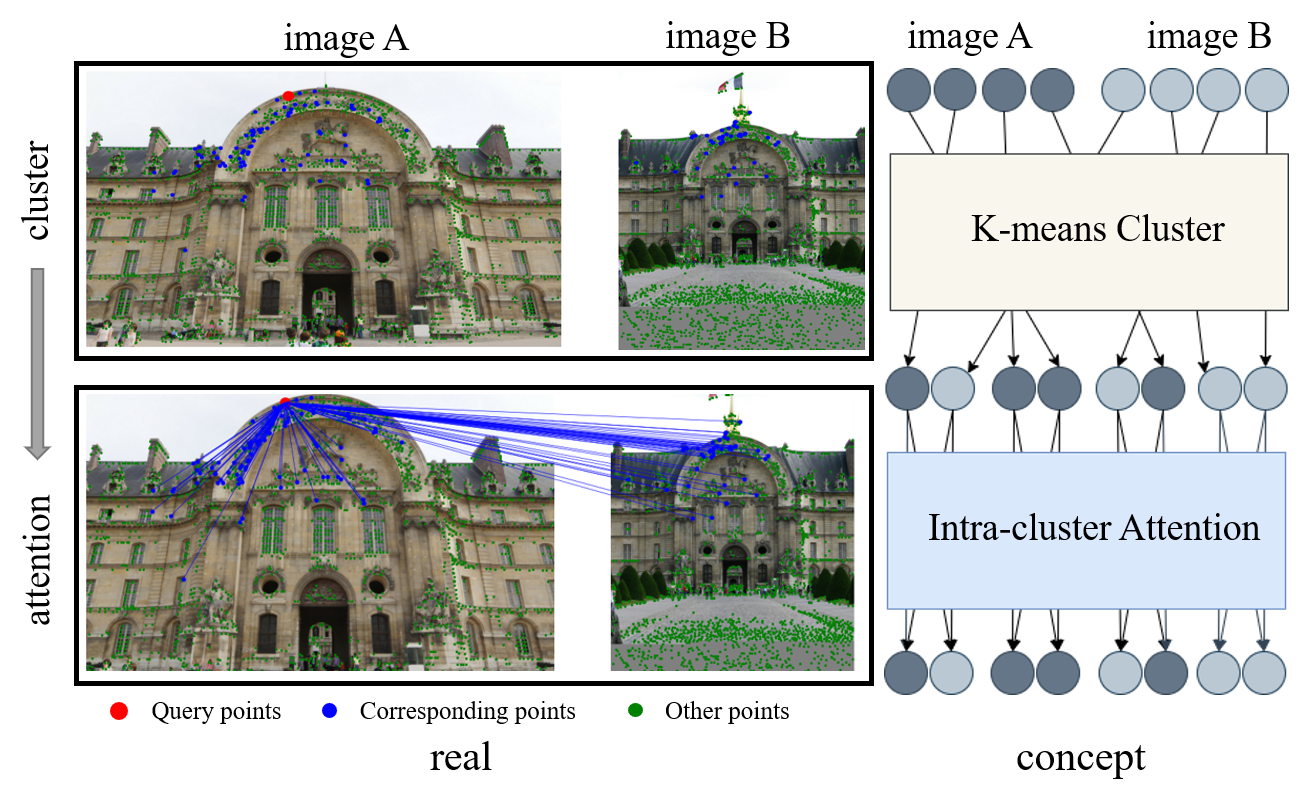

Graph Neural Networks (GNNs) with attention have been successfully applied for learning visual feature matching. However, current methods learn with complete graphs, resulting in a quadratic complexity in the number of features. Motivated by a prior observation that self- and cross- attention matrices converge to a sparse representation, we propose ClusterGNN, an attentional GNN architecture which operates on clusters for learning the feature matching task. Using a progressive clustering module we adaptively divide keypoints into different subgraphs to reduce redundant connectivity, and employ a coarse-to-fine paradigm for mitigating miss-classification within images. Our approach yields a 59.7% reduction in runtime and 58.4% reduction in memory consumption for dense detection, compared to current state-of-the-art GNN-based matching, while achieving a competitive performance on various computer vision tasks.

翻译:关注度较高的神经网络图(GNNs)已被成功应用来学习视觉特征匹配。 然而,目前的方法是用完整的图表学习,导致特征数量的四重复杂度。 之前的观察显示,自我和交叉关注矩阵会聚集到一个稀疏的代表点,因此我们提议GNM(GNN),这是一个关注的GNN(GNN)架构,在集群上运行,以学习功能匹配任务。我们使用渐进式组合模块,将关键点分为不同的子集,以减少冗余连接,并采用粗略到细微的模型来减少图像中的误分类。 我们的方法使得运行时间减少59.7%,高密度检测的记忆消耗减少58.4%,而目前以GNN为主的匹配则在各种计算机愿景任务上实现竞争性的运行。