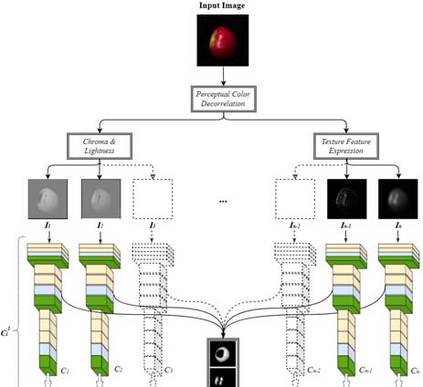

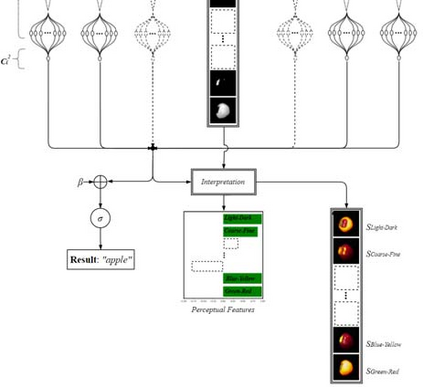

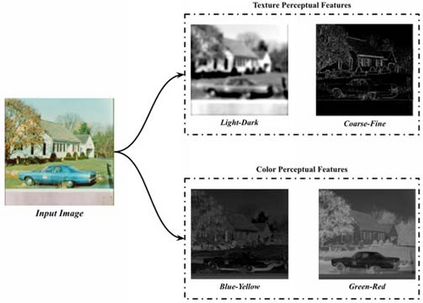

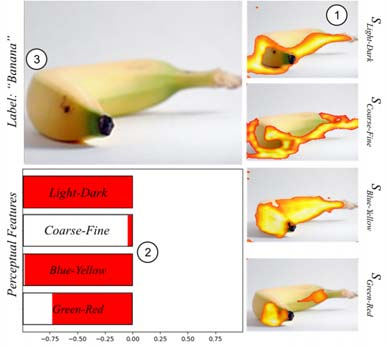

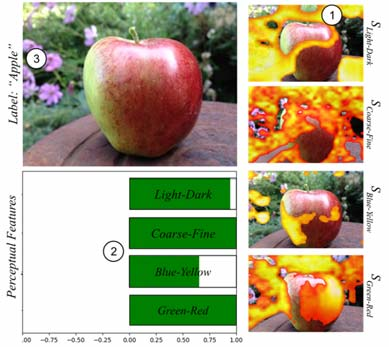

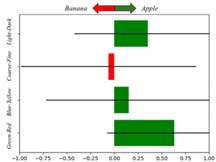

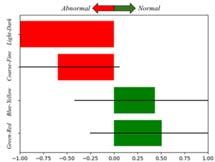

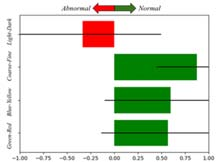

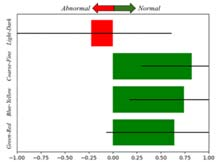

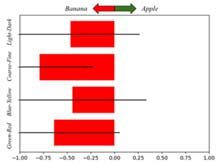

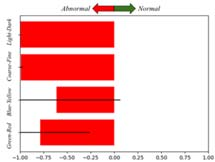

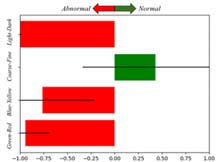

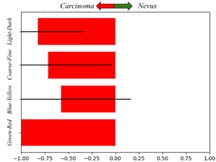

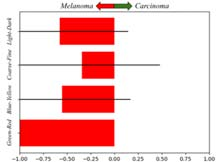

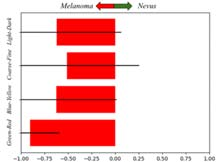

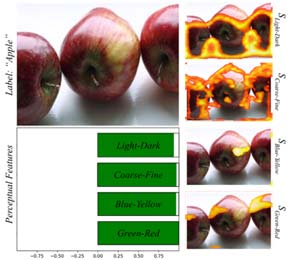

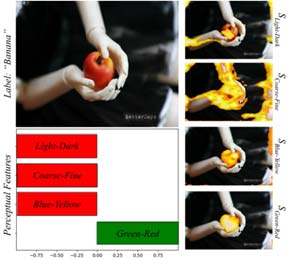

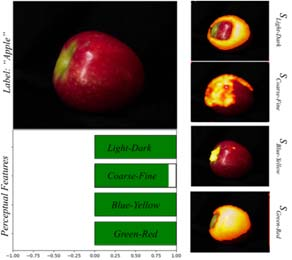

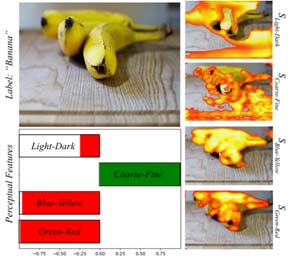

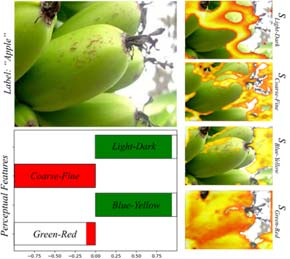

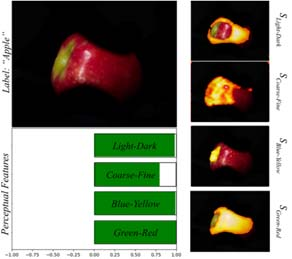

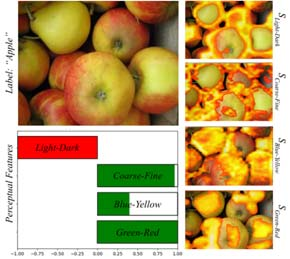

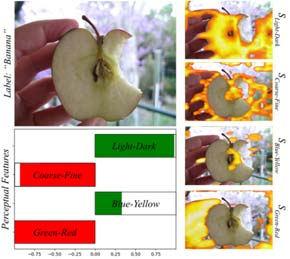

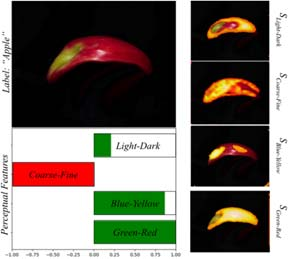

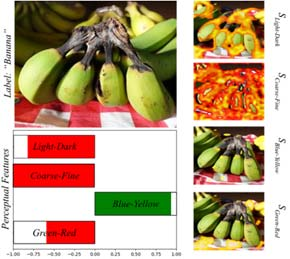

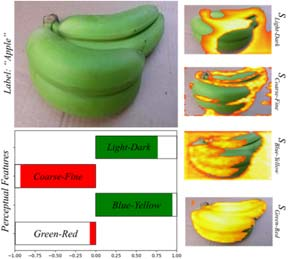

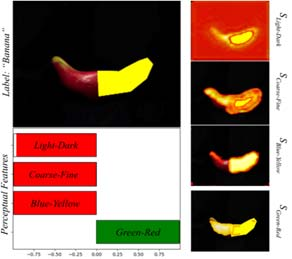

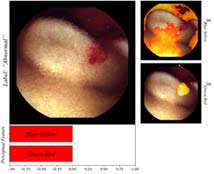

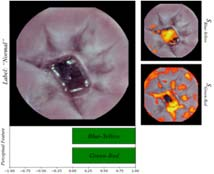

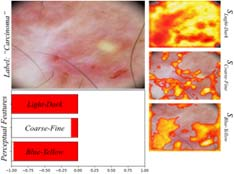

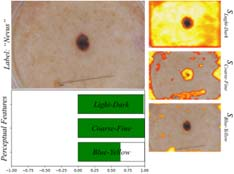

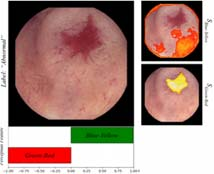

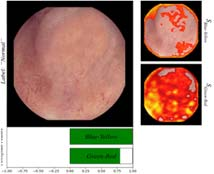

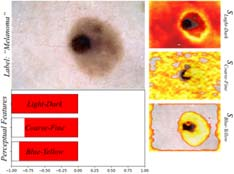

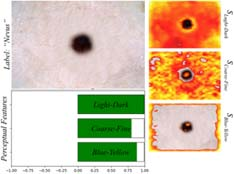

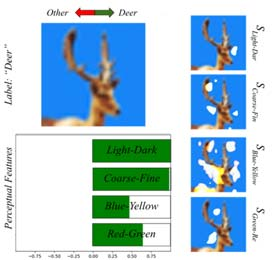

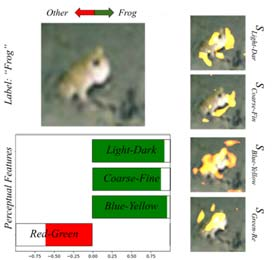

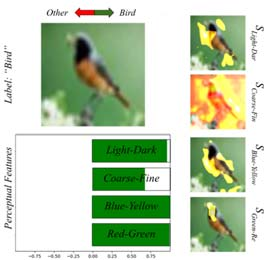

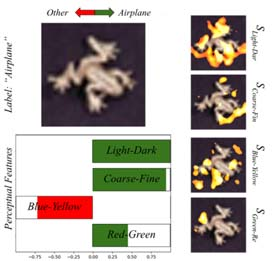

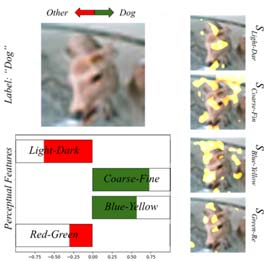

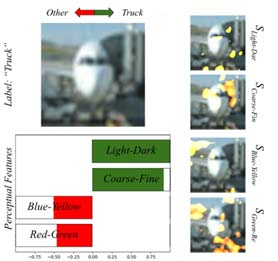

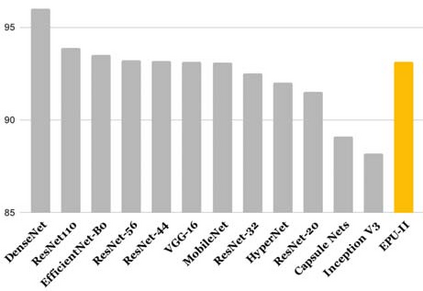

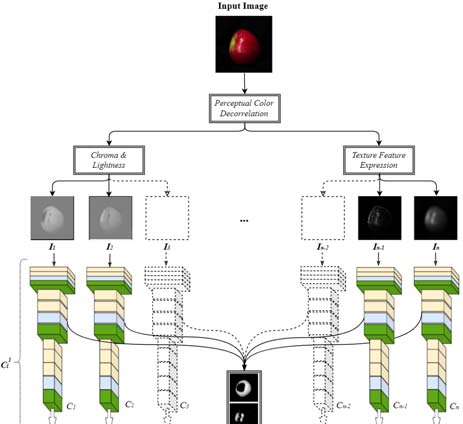

The adoption of Convolutional Neural Network (CNN) models in high-stake domains is hindered by their inability to meet society's demand for transparency in decision-making. So far, a growing number of methodologies have emerged for developing CNN models that are interpretable by design. However, such models are not capable of providing interpretations in accordance with human perception, while maintaining competent performance. In this paper, we tackle these challenges with a novel, general framework for instantiating inherently interpretable CNN models, named E Pluribus Unum Interpretable CNN (EPU-CNN). An EPU-CNN model consists of CNN sub-networks, each of which receives a different representation of an input image expressing a perceptual feature, such as color or texture. The output of an EPU-CNN model consists of the classification prediction and its interpretation, in terms of relative contributions of perceptual features in different regions of the input image. EPU-CNN models have been extensively evaluated on various publicly available datasets, as well as a contributed benchmark dataset. Medical datasets are used to demonstrate the applicability of EPU-CNN for risk-sensitive decisions in medicine. The experimental results indicate that EPU-CNN models can achieve a comparable or better classification performance than other CNN architectures while providing humanly perceivable interpretations.

翻译:由于无法满足社会对决策透明度的要求,在高占用领域采用进化神经网络模式受到阻碍,在高占用领域采用进化神经网络模式受到阻碍,迄今为止,在开发设计可解释的CNN模式方面出现了越来越多的方法,然而,这些模式无法根据人的看法提供解释,同时保持称职的性能;在本文件中,我们以一个创新的、通用的框架来应对这些挑战,以即时应用内在可解释的CNN模式,名为E Pluribus Unum Interpreabil CNN(EPU-CNN)。EPU-CNN模型由CNN子网络组成,每个网络都有不同的表示有色或质等外观特征的输入图象。EPU-CNN模型的输出包括分类预测及其解释,从输入图像不同区域的感知特征的相对贡献来看。EPU-CNN模型在各种公开的数据集中得到了广泛评价,以及一个贡献的基准数据集。医疗CN数据集用来显示EPUN的可适用性,而EPUN的实验性N解释则显示在可比较的模型中可以实现的风险分析。