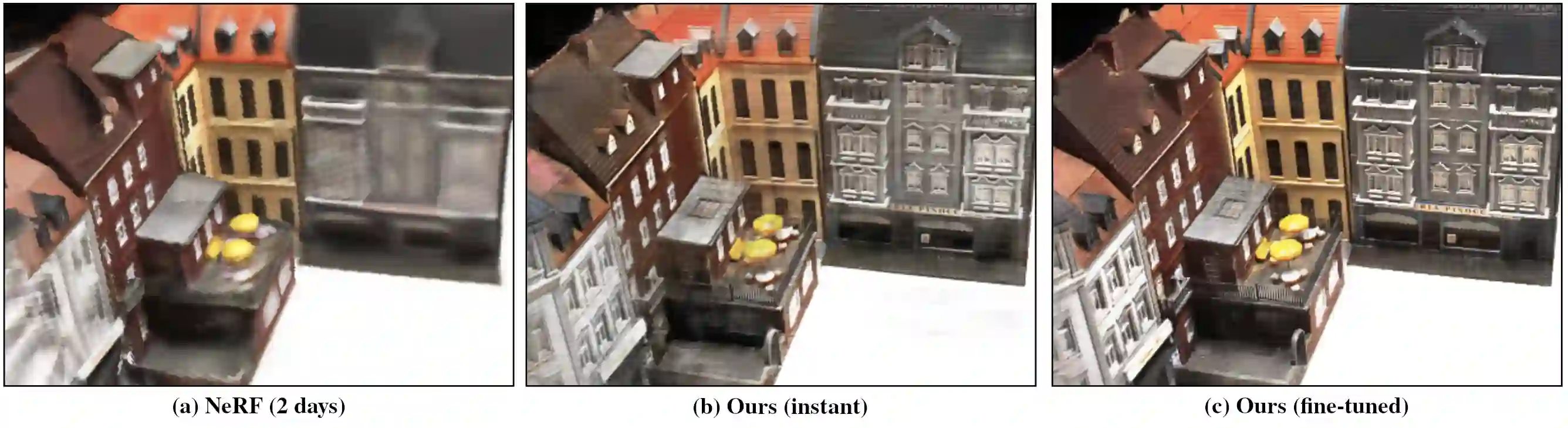

Recent neural view synthesis methods have achieved impressive quality and realism, surpassing classical pipelines which rely on multi-view reconstruction. State-of-the-Art methods, such as NeRF, are designed to learn a single scene with a neural network and require dense multi-view inputs. Testing on a new scene requires re-training from scratch, which takes 2-3 days. In this work, we introduce Stereo Radiance Fields (SRF), a neural view synthesis approach that is trained end-to-end, generalizes to new scenes, and requires only sparse views at test time. The core idea is a neural architecture inspired by classical multi-view stereo methods, which estimates surface points by finding similar image regions in stereo images. In SRF, we predict color and density for each 3D point given an encoding of its stereo correspondence in the input images. The encoding is implicitly learned by an ensemble of pair-wise similarities -- emulating classical stereo. Experiments show that SRF learns structure instead of overfitting on a scene. We train on multiple scenes of the DTU dataset and generalize to new ones without re-training, requiring only 10 sparse and spread-out views as input. We show that 10-15 minutes of fine-tuning further improve the results, achieving significantly sharper, more detailed results than scene-specific models. The code, model, and videos are available at https://virtualhumans.mpi-inf.mpg.de/srf/.

翻译:最近神经观点合成方法取得了令人印象深刻的质量和现实主义,超越了依赖多视图重建的古典管道。NeRF等最先进的方法旨在学习神经网络的单一场景,需要密集的多视图投入。新场景的测试需要从头到尾的再培训,这需要2至3天的时间。在这项工作中,我们引入了神经观点合成方法,即神经观点合成方法,经过培训的端到端,向新场景概括,在测试时只需要很少的视角。核心理念是一个由古典多视图立体法启发的神经结构,它通过在立体图像中找到相似的图像区域来估计表面点。在SRF中,我们预测每个3D点的颜色和密度需要从头到脚,这需要从输入图像中的立体对应编码编码编码编码中进行重新训练。这种编码隐含着一种配对式的相似之处 -- 模拟古典立体立体。实验显示SRFFS在场景上学习结构而不是过度适应。我们训练多处的多处多处的多处多处多处多处多处多处数据集和一般化,在新处进行新的显示,而无需再分析,只需要显示10处的图像中的结果,只需要显示10处的模型,只需要在10处的精确地显示,只需要在10处的精确的模制模制模制成。只需要的模型,只需的精确的模型,只需要在10处的精确的模型,只需要在10处的精确的精确的模型,只需要对10处的精确的精确的输入。