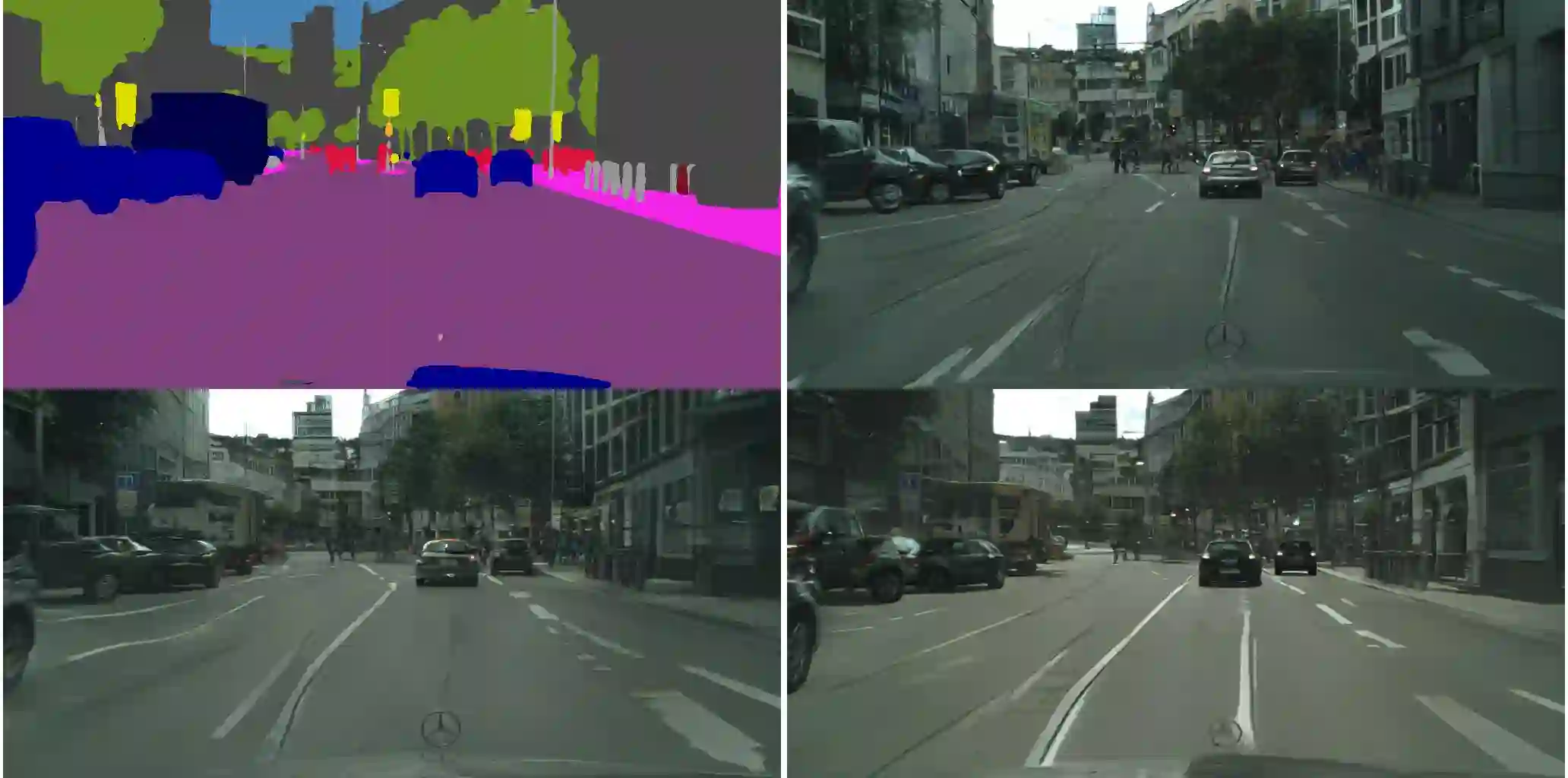

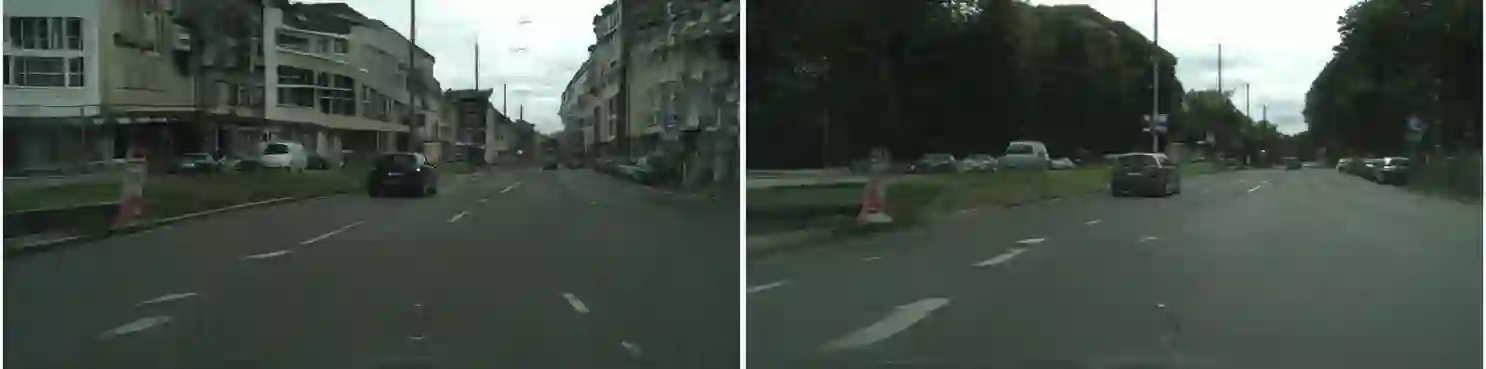

We study the problem of video-to-video synthesis, whose goal is to learn a mapping function from an input source video (e.g., a sequence of semantic segmentation masks) to an output photorealistic video that precisely depicts the content of the source video. While its image counterpart, the image-to-image synthesis problem, is a popular topic, the video-to-video synthesis problem is less explored in the literature. Without understanding temporal dynamics, directly applying existing image synthesis approaches to an input video often results in temporally incoherent videos of low visual quality. In this paper, we propose a novel video-to-video synthesis approach under the generative adversarial learning framework. Through carefully-designed generator and discriminator architectures, coupled with a spatio-temporal adversarial objective, we achieve high-resolution, photorealistic, temporally coherent video results on a diverse set of input formats including segmentation masks, sketches, and poses. Experiments on multiple benchmarks show the advantage of our method compared to strong baselines. In particular, our model is capable of synthesizing 2K resolution videos of street scenes up to 30 seconds long, which significantly advances the state-of-the-art of video synthesis. Finally, we apply our approach to future video prediction, outperforming several state-of-the-art competing systems.

翻译:我们研究的是视频到视频合成问题,其目的是从一个输入源视频(例如,语义截面面遮罩序列)到一个能准确描述源视频内容的输出摄影现实视频中学习一个绘图功能。虽然图像对像合成问题是一个受欢迎的主题,但视频到视频合成问题在文献中探索较少。不理解时间动态,直接将现有图像合成方法应用于一个输入视频,结果往往是在视觉质量低且时间不连贯的视频中出现。在本文中,我们提议了一种新型视频到视频的合成方法,在基因对抗性对立学习框架内,通过精心设计的生成器和制导师结构,加上一个片面对立的对立目标,我们在多种输入格式上取得了高分辨率、摄影真实性、时间一致的视频结果,包括截面蒙、草图和图像。对多种基准的实验显示我们方法相对于强度基线的优势。特别是,我们的模型能够将2K分辨率视频合成2的视频合成方法在基因对抗性对抗性对抗性对抗性学习框架内进行。我们最后30秒里将几度的街头图像的动态预视系统快速应用到未来状态。