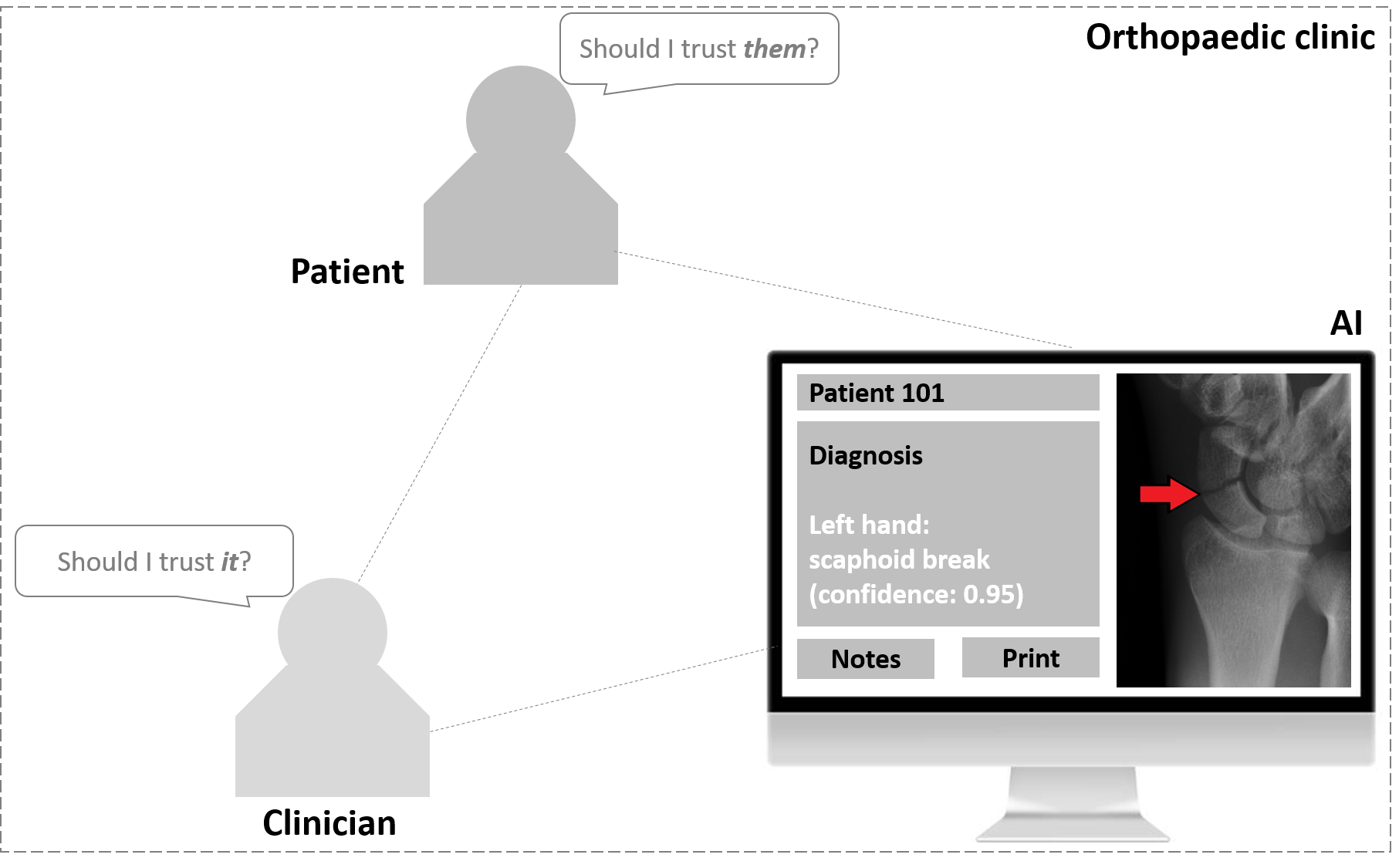

Building trust in AI-based systems is deemed critical for their adoption and appropriate use. Recent research has thus attempted to evaluate how various attributes of these systems affect user trust. However, limitations regarding the definition and measurement of trust in AI have hampered progress in the field, leading to results that are inconsistent or difficult to compare. In this work, we provide an overview of the main limitations in defining and measuring trust in AI. We focus on the attempt of giving trust in AI a numerical value and its utility in informing the design of real-world human-AI interactions. Taking a socio-technical system perspective on AI, we explore two distinct approaches to tackle these challenges. We provide actionable recommendations on how these approaches can be implemented in practice and inform the design of human-AI interactions. We thereby aim to provide a starting point for researchers and designers to re-evaluate the current focus on trust in AI, improving the alignment between what empirical research paradigms may offer and the expectations of real-world human-AI interactions.

翻译:最近的研究因此试图评估这些系统的各种属性如何影响用户信任。然而,对大赦国际信任的定义和衡量方面的限制妨碍了实地的进展,导致结果不一致或难以比较。在这项工作中,我们概述了在确定和衡量对大赦国际的信任方面存在的主要限制。我们侧重于尝试对大赦国际给予信任,使之具有数值价值,并有利于为设计真实世界人类与大赦国际的互动提供信息。从社会技术系统的角度看待大赦国际,我们探讨两种不同的方法来应对这些挑战。我们就如何在实践中实施这些方法提供了可操作的建议,并为人类与大赦国际的互动设计提供了参考。我们力求为研究人员和设计者提供一个起点,以便重新评价目前对大赦国际信任的重视程度,改进经验性研究范式可能提供的内容与现实世界人类与大赦国际互动的期望之间的协调一致。