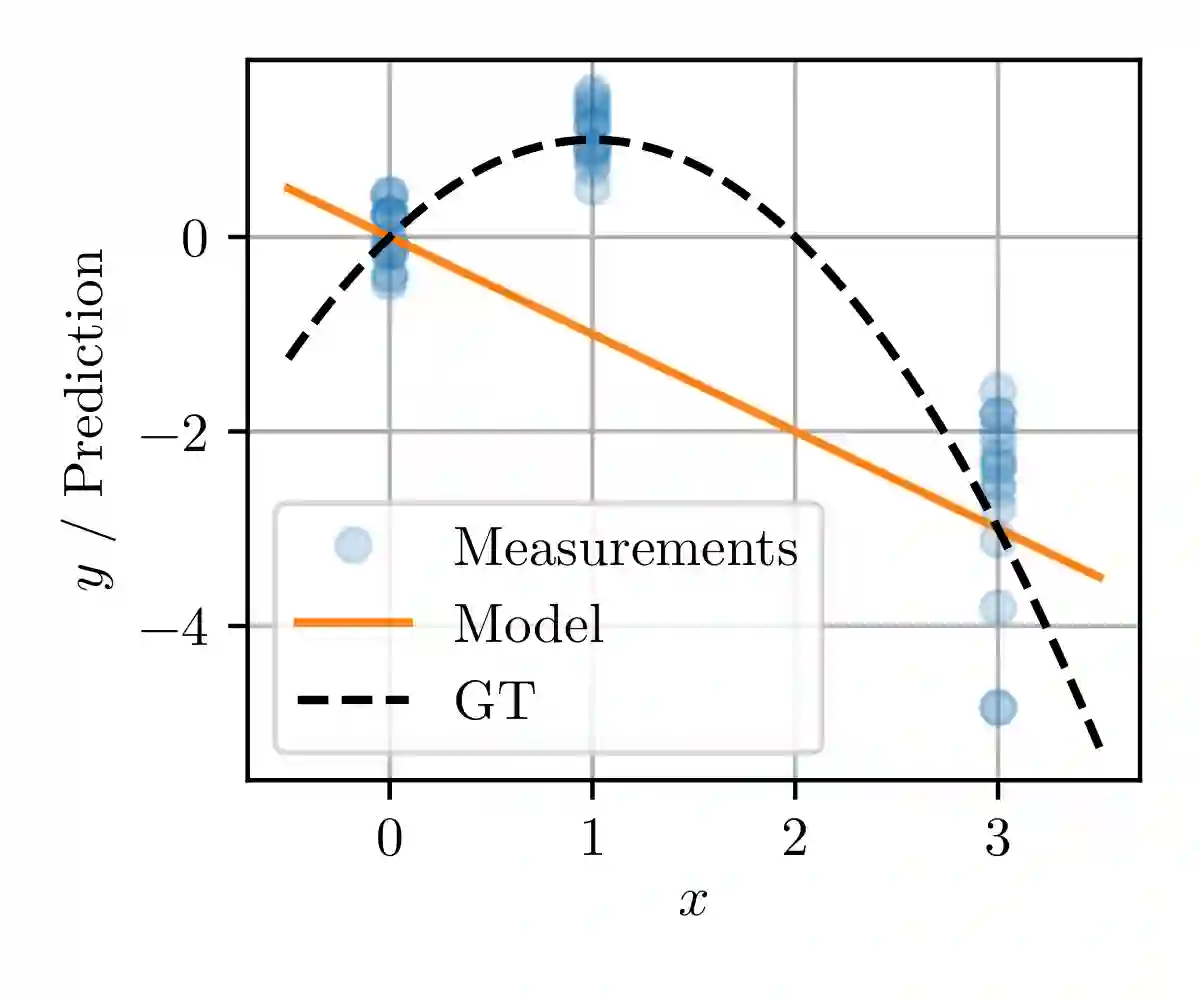

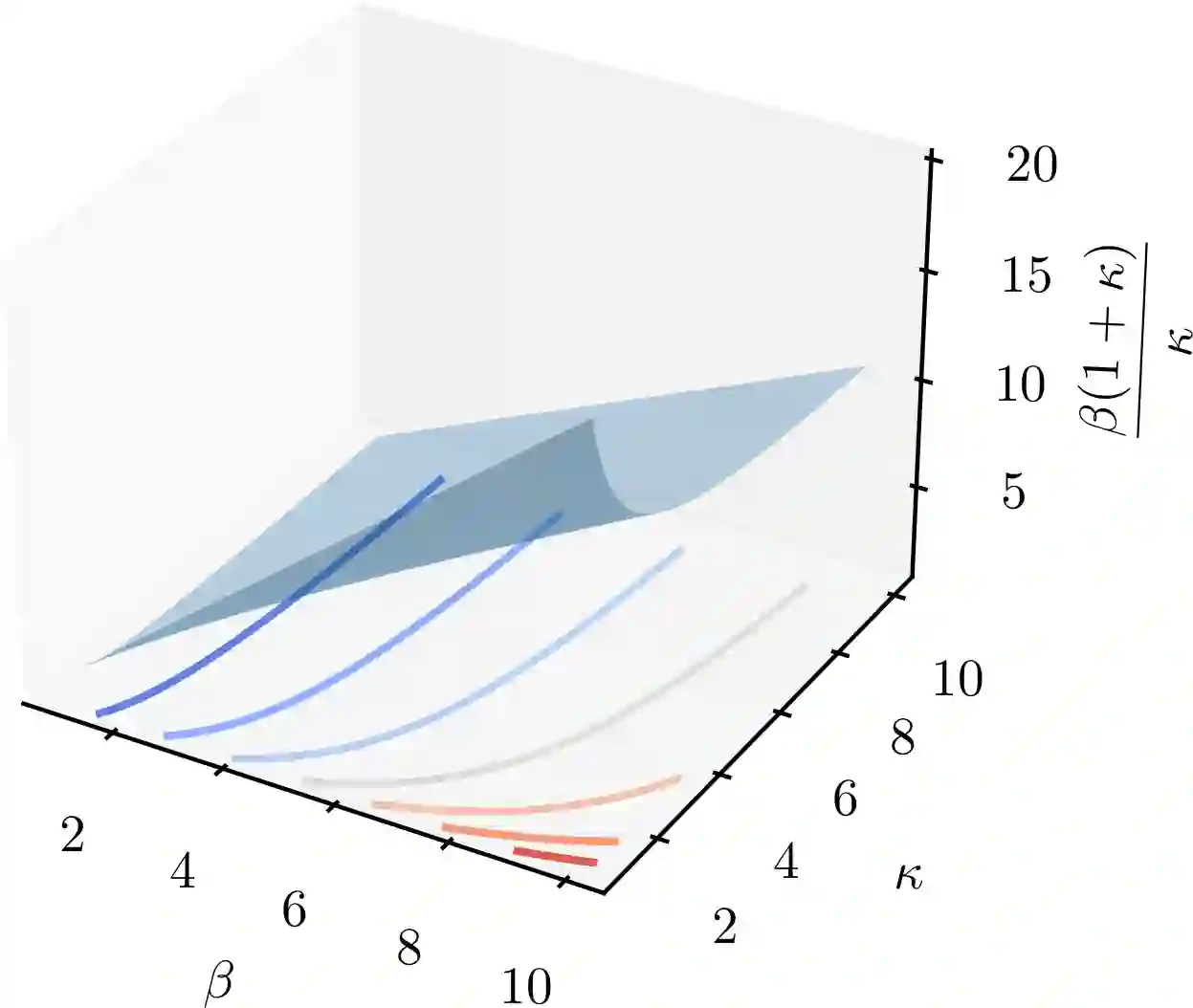

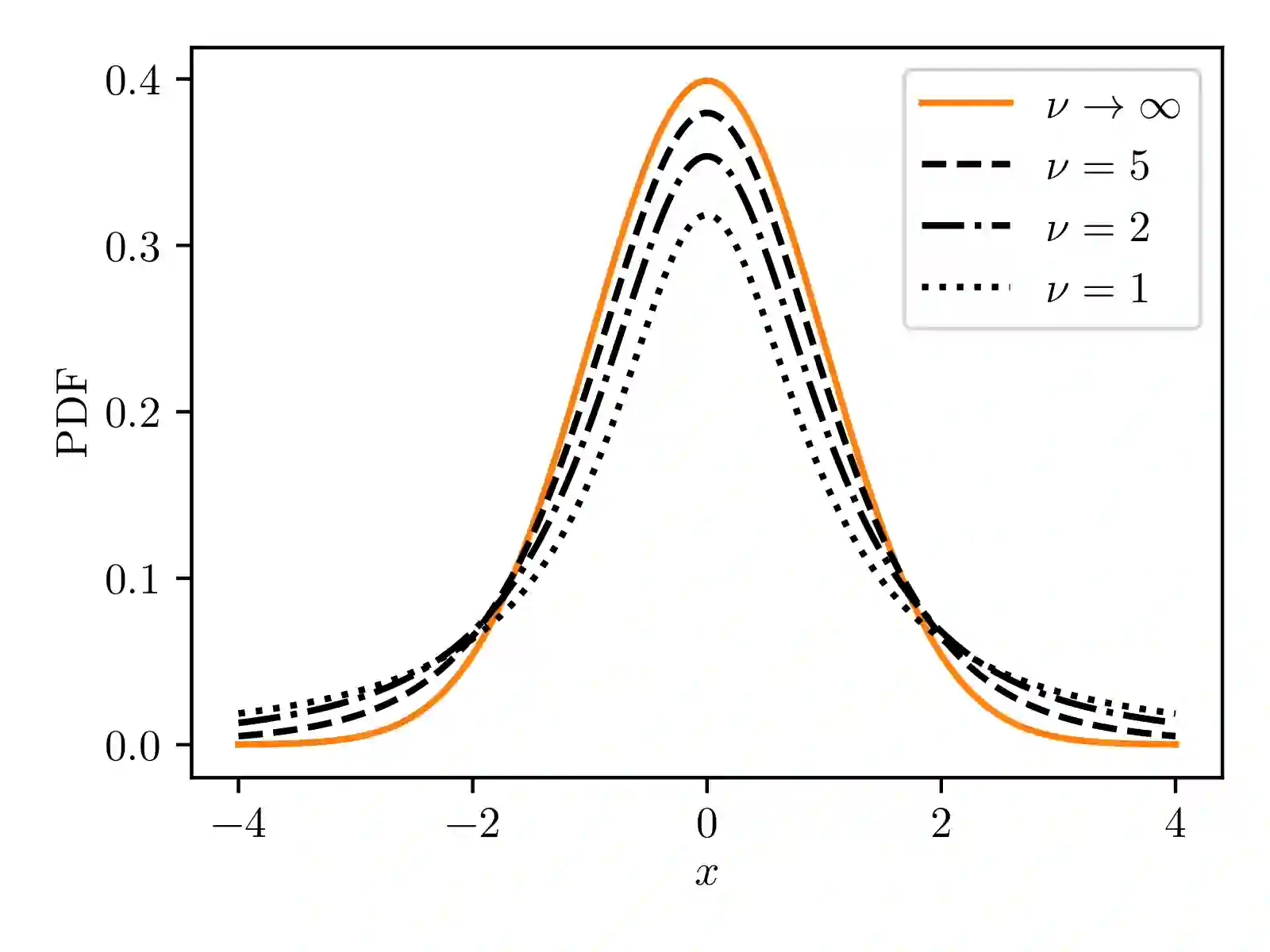

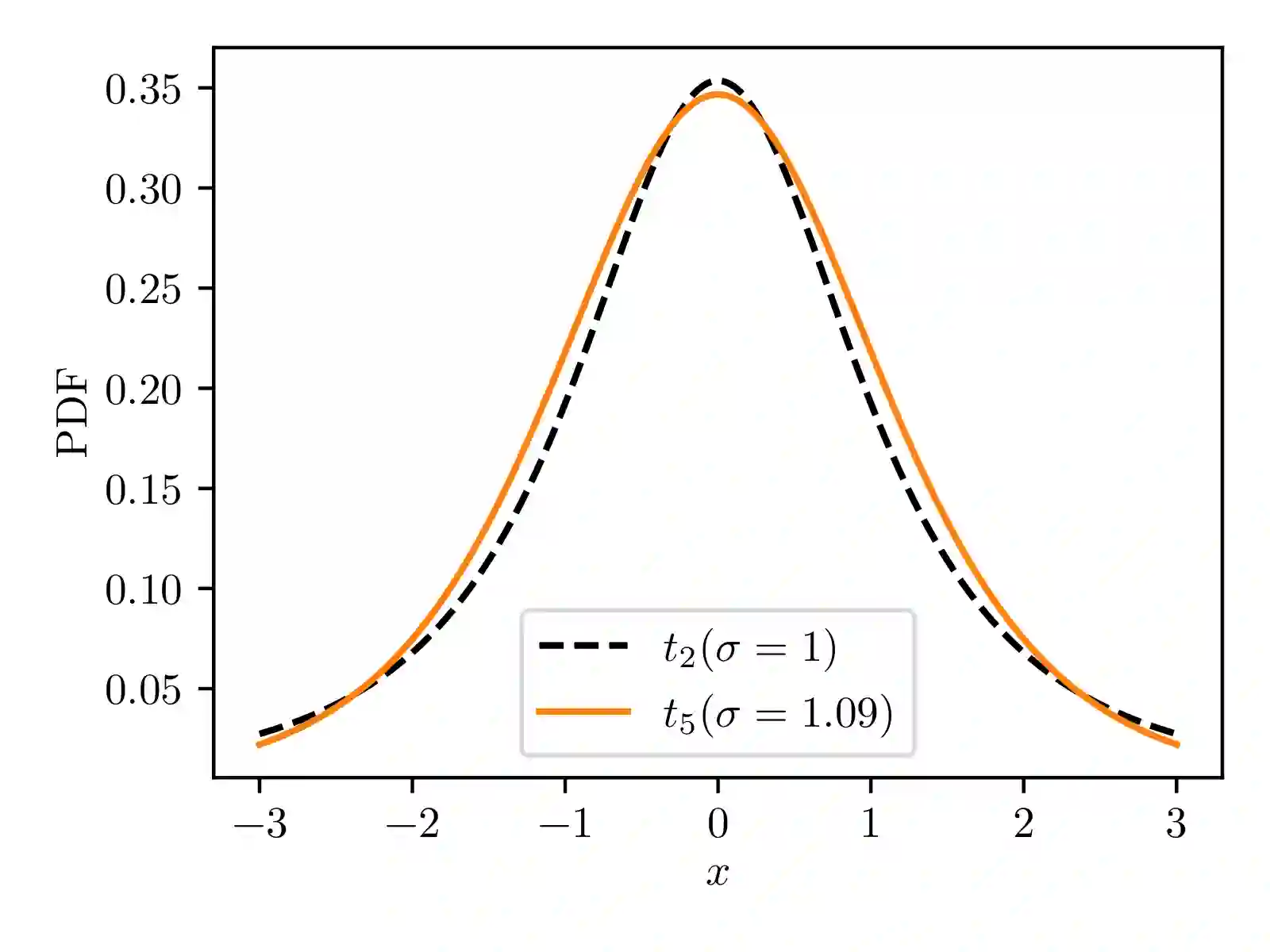

There is significant need for principled uncertainty reasoning in machine learning systems as they are increasingly deployed in safety-critical domains. A new approach with uncertainty-aware neural networks shows promise over traditional deterministic methods, yet several important gaps in the theory and implementation of these networks remain. We discuss three issues with a proposed solution to extract aleatoric and epistemic uncertainties from regression-based neural networks. The aforementioned proposal derives a technique by placing evidential priors over the original Gaussian likelihood function and training the neural network to infer the hyperparemters of the evidential distribution. Doing so allows for the simultaneous extraction of both uncertainties without sampling or utilization of out-of-distribution data for univariate regression tasks. We describe the outstanding issues in detail, provide a possible solution, and generalize the technique for the multivariate case.

翻译:由于机器学习系统越来越多地部署在安全关键领域,因此在机器学习系统中非常需要有原则的不确定性推理。一种具有不确定性神经网络的新办法显示,对传统的确定性方法有希望,然而,这些网络的理论和实施方面仍然存在一些重要差距。我们讨论了三个问题,提出了从基于回归的神经网络中提取偏差和隐含不确定性的办法。上述提议产生一种技术,即对原高斯概率功能进行证据前科,并训练神经网络推断证据分布的超纯度。这样,就可以同时提取不确定性,而无需抽样或利用分配外数据来完成单向回归任务。我们详细描述了未决问题,提供了可能的解决方案,并概括了多变情况的技术。