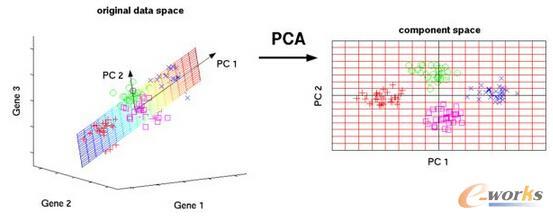

This paper proposes an extension of principal component analysis for Gaussian process (GP) posteriors, denoted by GP-PCA. Since GP-PCA estimates a low-dimensional space of GP posteriors, it can be used for meta-learning, which is a framework for improving the performance of target tasks by estimating a structure of a set of tasks. The issue is how to define a structure of a set of GPs with an infinite-dimensional parameter, such as coordinate system and a divergence. In this study, we reduce the infiniteness of GP to the finite-dimensional case under the information geometrical framework by considering a space of GP posteriors that have the same prior. In addition, we propose an approximation method of GP-PCA based on variational inference and demonstrate the effectiveness of GP-PCA as meta-learning through experiments.

翻译:高斯过程后验的主成分分析

本文提出了一种高斯过程(GP)后验的主成分分析扩展,称为GP-PCA。由于GP-PCA估计了GP后验的低维空间,因此可以用于元学习(meta-learning)框架,该框架通过估计一组任务的结构来改善目标任务的性能。问题是如何定义一组具有无限维参数(GP)的GP结构,例如坐标系统和发散。在这项研究中,我们通过信息几何框架将GP的无限性降为具有相同先验的有限维案例。此外,我们提出了一种基于变分推断的GP-PCA近似方法,并通过实验证明了GP-PCA作为元学习的有效性。