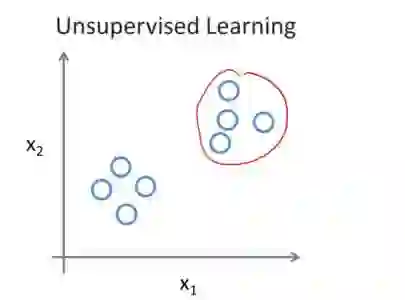

Domain generalization (DG) aims to help models trained on a set of source domains generalize better on unseen target domains. The performances of current DG methods largely rely on sufficient labeled data, which however are usually costly or unavailable. While unlabeled data are far more accessible, we seek to explore how unsupervised learning can help deep models generalizes across domains. Specifically, we study a novel generalization problem called unsupervised domain generalization, which aims to learn generalizable models with unlabeled data. Furthermore, we propose a Domain-Irrelevant Unsupervised Learning (DIUL) method to cope with the significant and misleading heterogeneity within unlabeled data and severe distribution shifts between source and target data. Surprisingly we observe that DIUL can not only counterbalance the scarcity of labeled data but also further strengthen the generalization ability of models when the labeled data are sufficient. As a pretraining approach, DIUL shows superior to ImageNet pretraining protocol even when the available data are unlabeled and of a greatly smaller amount compared to ImageNet. Extensive experiments clearly demonstrate the effectiveness of our method compared with state-of-the-art unsupervised learning counterparts.

翻译:域常规化 (DG) 旨在帮助在一组源域上培训的模型对一组源域进行更普遍化的隐性目标域。 目前的 DG 方法的性能主要依赖于足够的标签数据, 而这些数据通常成本较高或不可用。 虽然未标签数据更容易获取,但我们试图探索未经监督的学习如何帮助深度模型对各域进行广泛化。 具体地说, 我们研究一个叫作未经监督的域域通用的新颖的概括化问题, 目的是学习具有未标签数据的通用模型。 此外, 我们提议一种DG 方法, 以应对未标签数据内的重大和误导性异质性, 以及源和目标数据之间的严重分布转移。 令人惊讶的是, 我们观察到, DIUL 不仅能够抵消标签数据稀缺的程度, 还能在标签数据足够时进一步加强模型的通用能力。 作为培训前方法, DIUL 显示, 即使现有数据没有标签, 并且与图像网络相比数量要小得多, DIUL 也比图像网络 都更优越。 广泛的实验清楚地展示了我们方法的相对对应的对应方。 。