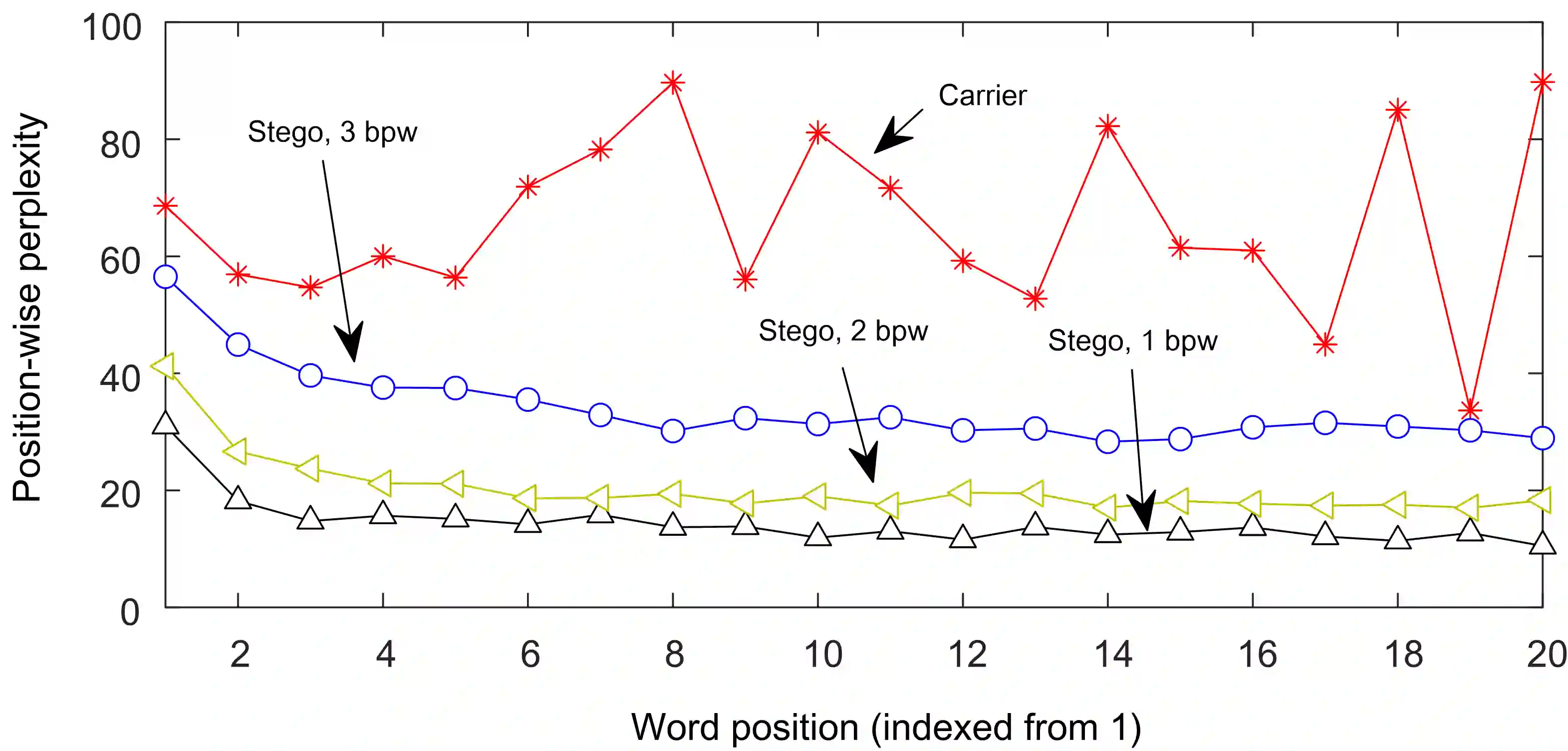

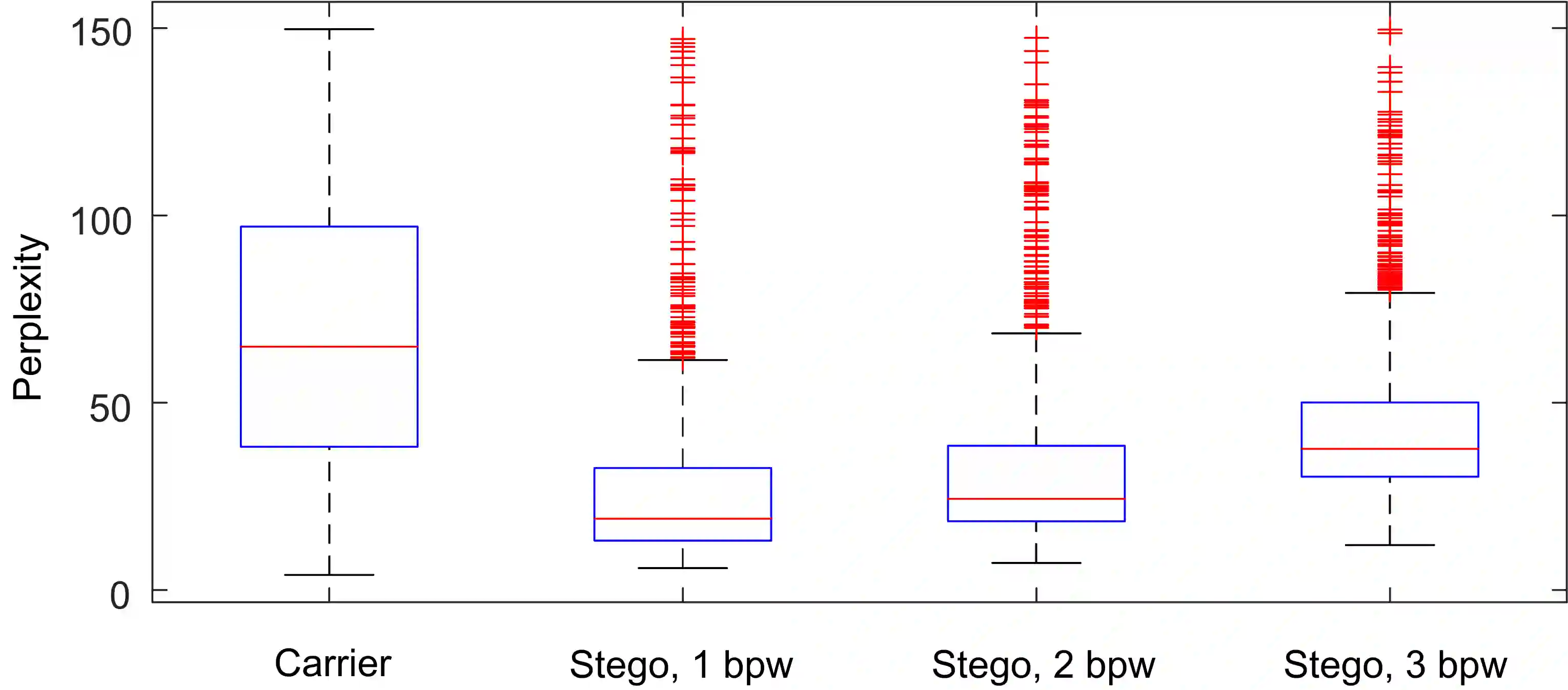

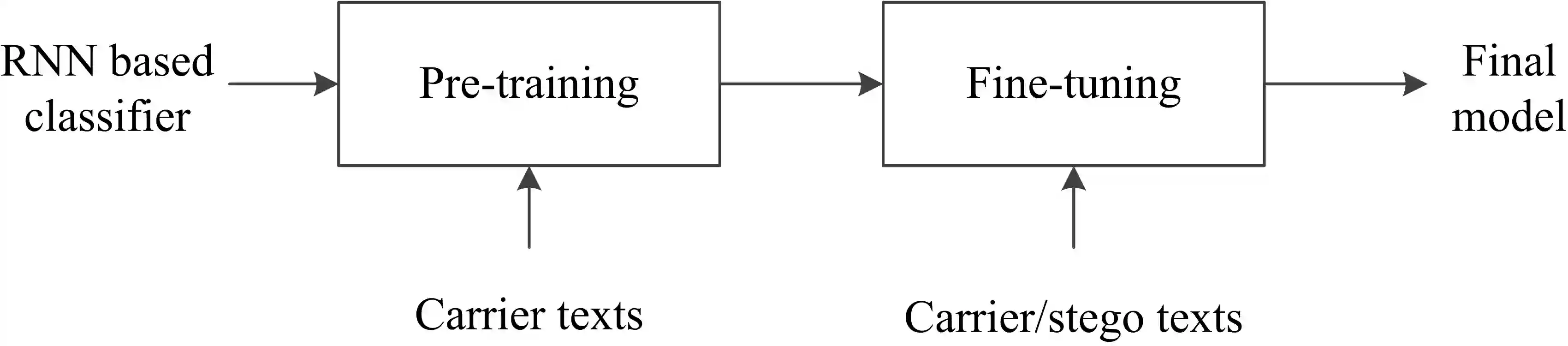

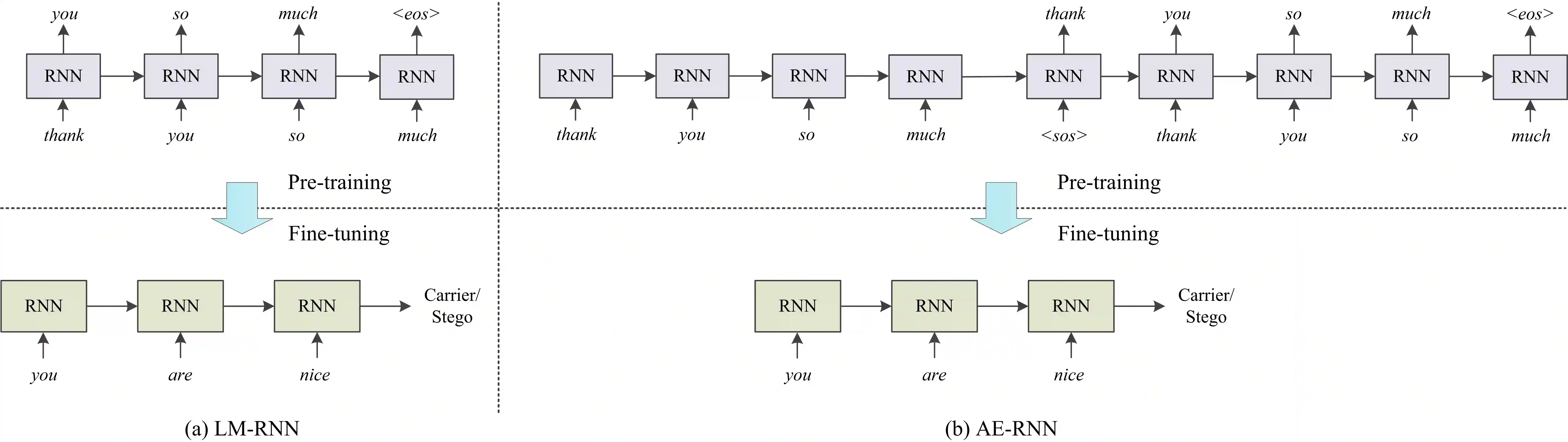

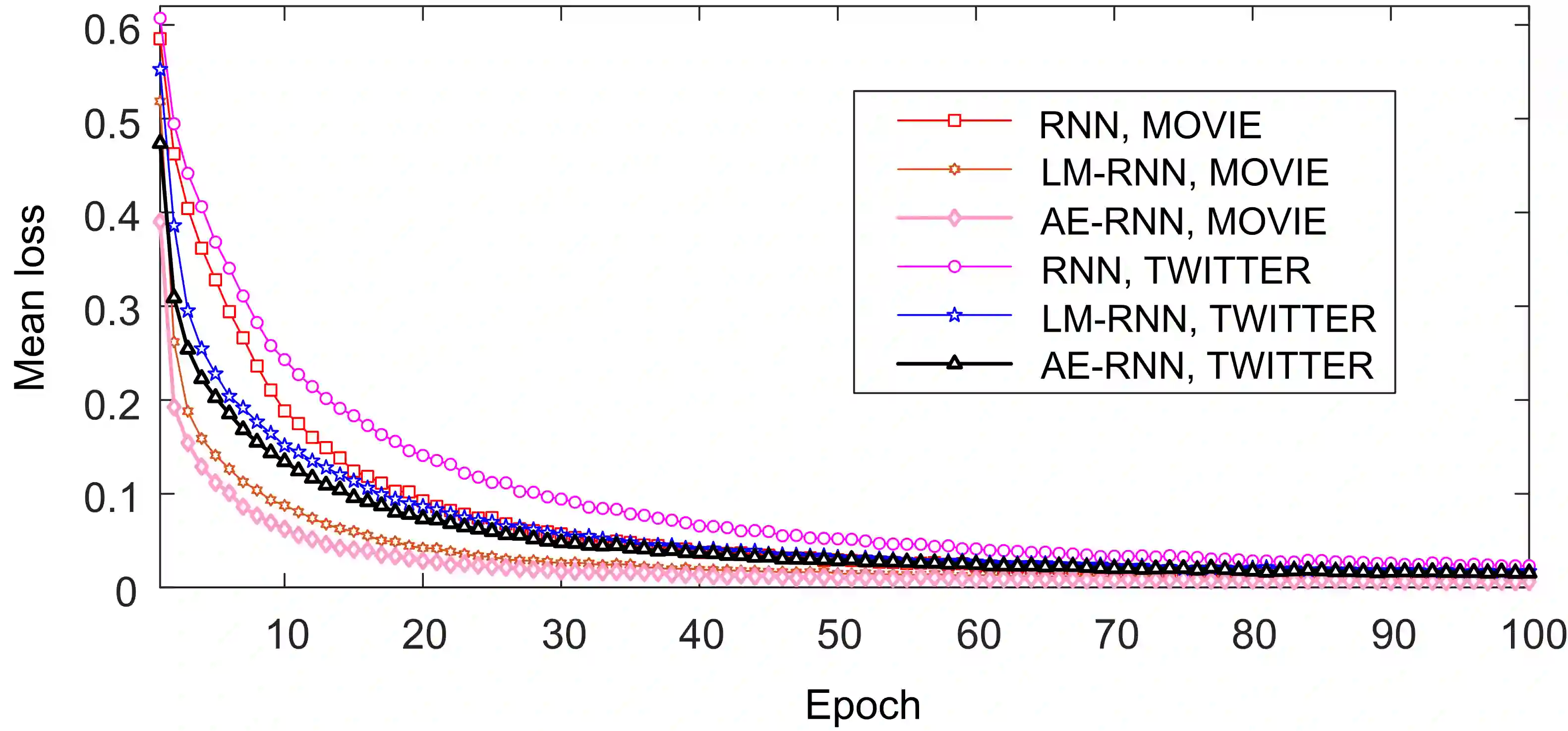

Recent advances in linguistic steganalysis have successively applied CNNs, RNNs, GNNs and other deep learning models for detecting secret information in generative texts. These methods tend to seek stronger feature extractors to achieve higher steganalysis effects. However, we have found through experiments that there actually exists significant difference between automatically generated steganographic texts and carrier texts in terms of the conditional probability distribution of individual words. Such kind of statistical difference can be naturally captured by the language model used for generating steganographic texts, which drives us to give the classifier a priori knowledge of the language model to enhance the steganalysis ability. To this end, we present two methods to efficient linguistic steganalysis in this paper. One is to pre-train a language model based on RNN, and the other is to pre-train a sequence autoencoder. Experimental results show that the two methods have different degrees of performance improvement when compared to the randomly initialized RNN classifier, and the convergence speed is significantly accelerated. Moreover, our methods have achieved the best detection results.

翻译:语言学分析的最新进展先后应用了CNN、RNN、GNN和其他深层学习模型,以探测基因文本中的机密信息。这些方法倾向于寻求更强的特征提取器,以达到更高的分解效果。然而,我们通过实验发现,在单词的有条件概率分布方面,自动生成的分解文本和载体文本之间实际上存在很大差异。这种统计差异可以自然地被生成分解文本的语言模型所捕捉,这促使我们先验地向分类者提供语言模型的知识,以加强分解能力。为此,我们提出了两种方法,在本文中高效地进行语言学分析。其中一种是预演一个基于RNN的语文模型,另一种是预演一个自动编码序列。实验结果显示,与随机初始的 RNN分解器相比,两种方法的性能改进程度不同,而且趋同速度也大大加快。此外,我们的方法已经取得了最佳的检测结果。