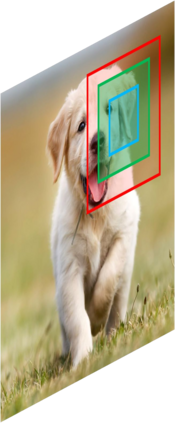

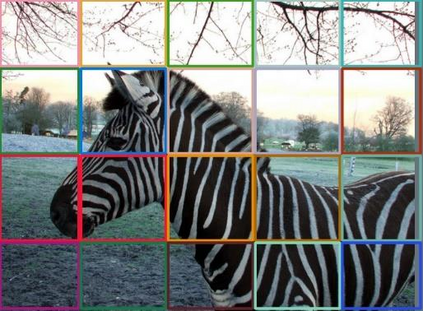

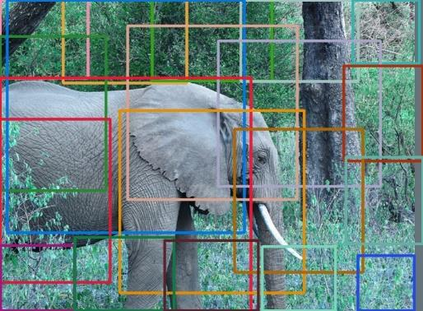

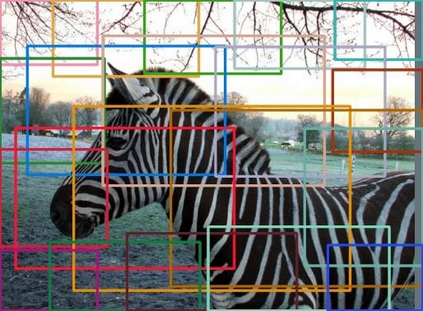

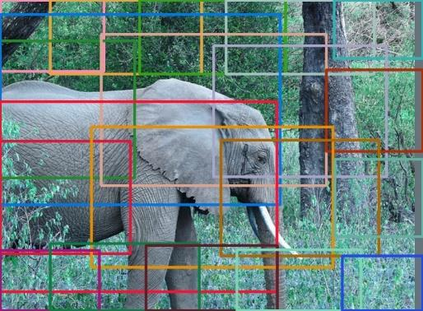

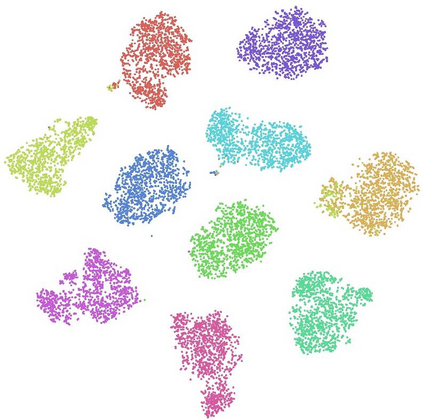

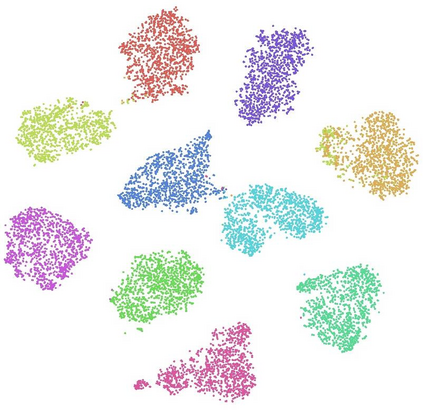

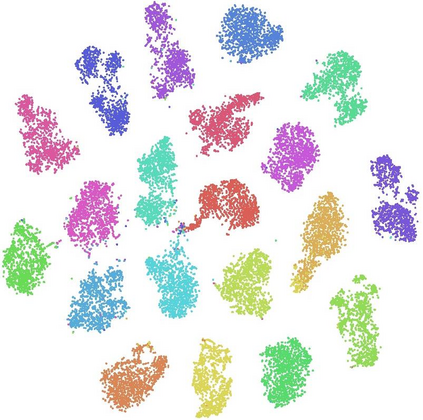

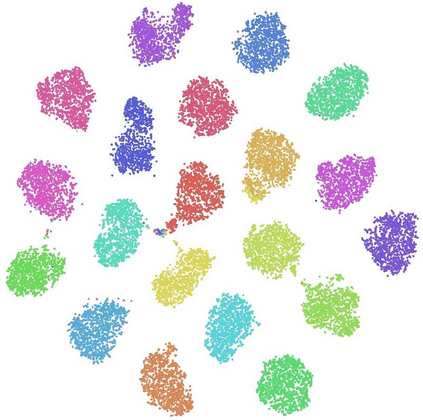

Attention within windows has been widely explored in vision transformers to balance the performance, computation complexity, and memory footprint. However, current models adopt a hand-crafted fixed-size window design, which restricts their capacity of modeling long-term dependencies and adapting to objects of different sizes. To address this drawback, we propose \textbf{V}aried-\textbf{S}ize Window \textbf{A}ttention (VSA) to learn adaptive window configurations from data. Specifically, based on the tokens within each default window, VSA employs a window regression module to predict the size and location of the target window, i.e., the attention area where the key and value tokens are sampled. By adopting VSA independently for each attention head, it can model long-term dependencies, capture rich context from diverse windows, and promote information exchange among overlapped windows. VSA is an easy-to-implement module that can replace the window attention in state-of-the-art representative models with minor modifications and negligible extra computational cost while improving their performance by a large margin, e.g., 1.1\% for Swin-T on ImageNet classification. In addition, the performance gain increases when using larger images for training and test. Experimental results on more downstream tasks, including object detection, instance segmentation, and semantic segmentation, further demonstrate the superiority of VSA over the vanilla window attention in dealing with objects of different sizes. The code will be released https://github.com/ViTAE-Transformer/ViTAE-VSA.

翻译:在视觉变压器中广泛探索了窗口内部的注意,以平衡性能、计算复杂性和记忆足迹。然而,当前模型采用手工制作的固定规模窗口设计,限制了其模拟长期依赖性和适应不同大小对象的能力。为解决这一退步,我们提议采用以下方法:Textbf{V}aried-textbf{S} 将窗口的上下文从数据中学习适应性窗口配置。具体地说,基于每个默认窗口的标志, VSA使用一个窗口回归模块来预测目标窗口的大小和位置,即关键和值符号抽样的注意区域。通过对每个关注头独立采用 VSA 来模拟长期依赖性,从不同的窗口中捕捉丰富的背景,促进重叠窗口之间的信息交流。 VSA是一个容易执行的模块,可以取代州级代表模型中的窗口关注度,有轻微的修改和微不足道的超值计算成本,同时通过大边距的S-Vial-dealalal-dealal 测试性能提升其性能,在更大范围内的S-delive-deal-deal-dealal realal exalal exal exalation lagistraudeal 上,在图像中将显示上,在更大性变压值/e-tailal-tailal-traal-tailal-tailal-tailal-tamental-tailal-tamentaltractionalisalisalisalisaltractionxal delisalisalisalxxaltraaltraalxxxxxxalation delvialation 。