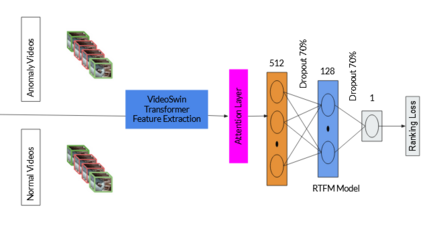

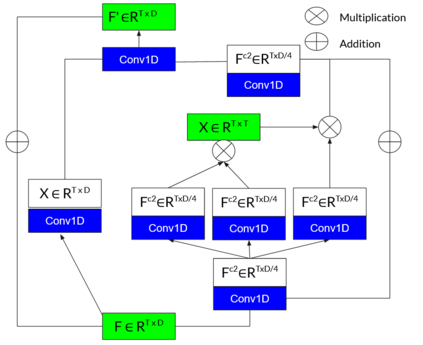

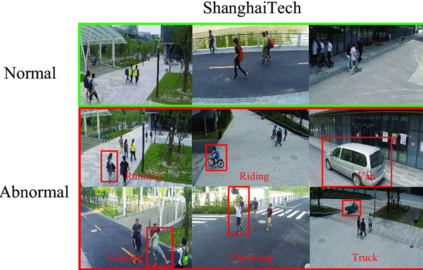

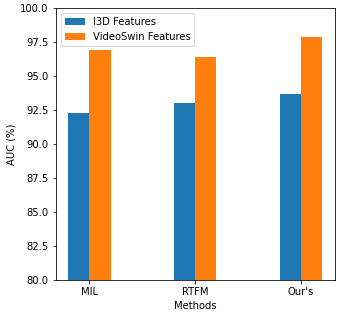

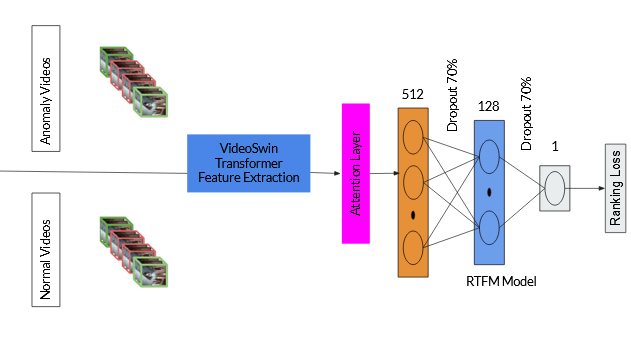

Surveillance footage can catch a wide range of realistic anomalies. This research suggests using a weakly supervised strategy to avoid annotating anomalous segments in training videos, which is time consuming. In this approach only video level labels are used to obtain frame level anomaly scores. Weakly supervised video anomaly detection (WSVAD) suffers from the wrong identification of abnormal and normal instances during the training process. Therefore it is important to extract better quality features from the available videos. WIth this motivation, the present paper uses better quality transformer-based features named Videoswin Features followed by the attention layer based on dilated convolution and self attention to capture long and short range dependencies in temporal domain. This gives us a better understanding of available videos. The proposed framework is validated on real-world dataset i.e. ShanghaiTech Campus dataset which results in competitive performance than current state-of-the-art methods. The model and the code are available at https://github.com/kapildeshpande/Anomaly-Detection-in-Surveillance-Videos

翻译:此项研究表明,在培训录像中,使用监督不力的战略,避免在耗时的培训录像中出现异常部分。在这种方法中,只使用视频标签来获取框架异常分数。在培训过程中,受到监督不力的视频异常检测(WSVAD)存在对异常和正常情况的错误识别,因此,从现有录像中提取质量更好的特征非常重要。Wisth这一动机,本文件使用质量更好的变压器特征,即Videos Videos, 名为 Videoswin Featers, 之后是关注层,其基础是变相变异和自我关注,以捕捉时间范围内的长短距离依赖性。这使我们更好地了解了现有视频。拟议框架在现实世界数据集上得到验证,即上海科技校园数据集,其结果比目前的最新技术方法有竞争力。模型和代码见https://github.com/kapileshpande/Anomaly-seritionion-in-Survilan-Videos。