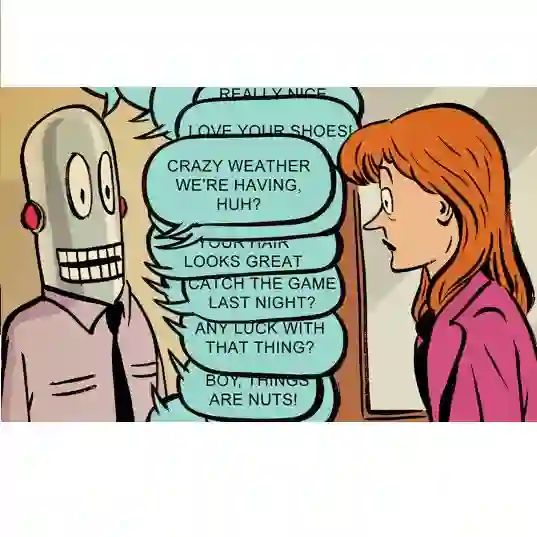

Recent advances in large language models (LLMs) have led to the development of powerful AI chatbots capable of engaging in natural and human-like conversations. However, these chatbots can be potentially harmful, exhibiting manipulative, gaslighting, and narcissistic behaviors. We define Healthy AI to be safe, trustworthy and ethical. To create healthy AI systems, we present the SafeguardGPT framework that uses psychotherapy to correct for these harmful behaviors in AI chatbots. The framework involves four types of AI agents: a Chatbot, a "User," a "Therapist," and a "Critic." We demonstrate the effectiveness of SafeguardGPT through a working example of simulating a social conversation. Our results show that the framework can improve the quality of conversations between AI chatbots and humans. Although there are still several challenges and directions to be addressed in the future, SafeguardGPT provides a promising approach to improving the alignment between AI chatbots and human values. By incorporating psychotherapy and reinforcement learning techniques, the framework enables AI chatbots to learn and adapt to human preferences and values in a safe and ethical way, contributing to the development of a more human-centric and responsible AI.

翻译:近年来,大型语言模型(LLMs)的进步使得人工智能聊天机器人能够进行自然且类人对话。然而,这些聊天机器人可能会对人造成潜在的伤害,表现出操纵、捏造和自恋的行为。我们定义健康的 AI 是安全、可信和道德的。为了创建健康的 AI 系统,我们提出了 SafeguardGPT 框架,将心理治疗用于 AI聊天机器人中纠正这些有害行为。该框架涉及四种 AI 代理:聊天机器人、"用户"、"治疗师"和"评论家"。我们通过模拟社交对话的工作示例,展示了 SafeguardGPT 的有效性。我们的结果表明,框架可以改善 AI 聊天机器人和人类之间的对话质量。虽然未来还有几个挑战和方向需要解决,但 SafeguardGPT 提供了一种有前途的方法,可以改善 AI聊天机器人和人类价值之间的一致性。通过结合心理治疗和强化学习技术,该框架使得 AI 聊天机器人能够安全、道德地学习并适应人类的偏好和价值观,有助于开发更以人为中心、更负责任的 AI。