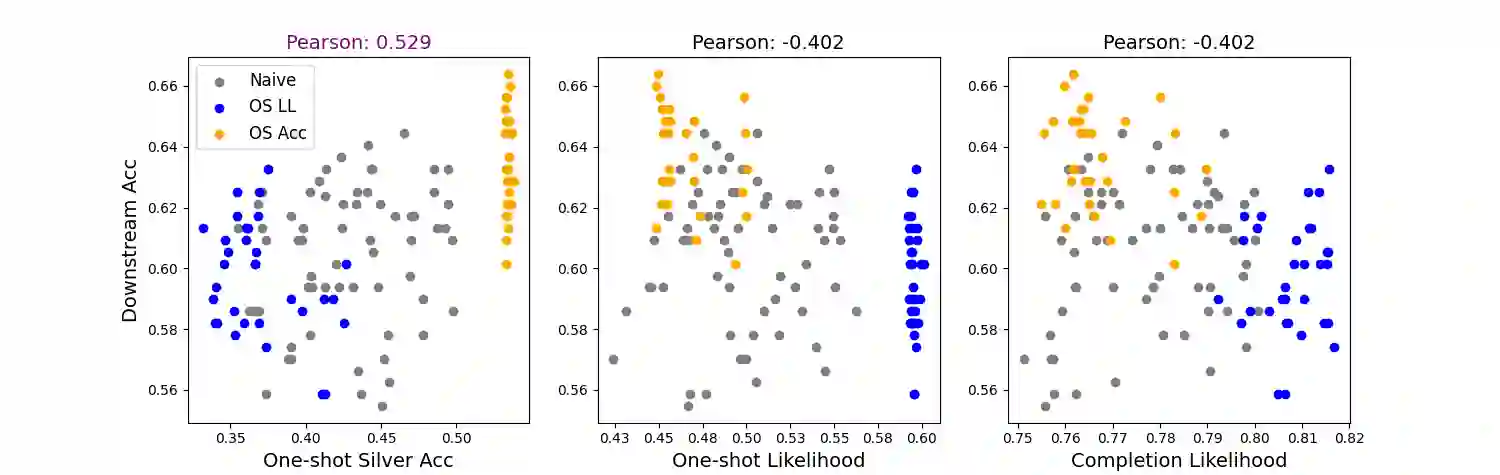

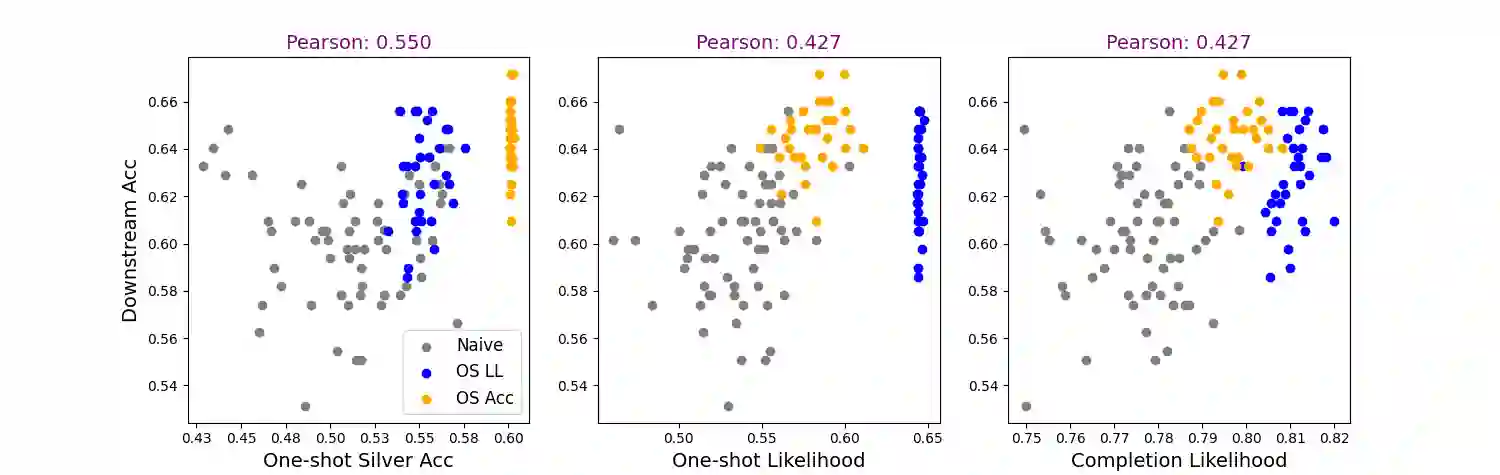

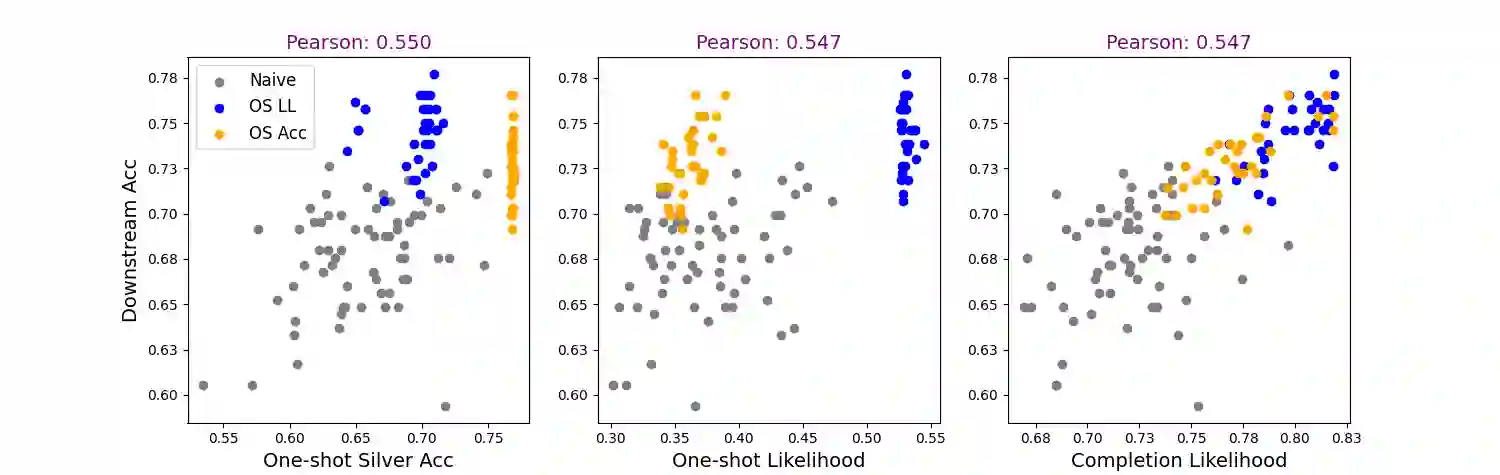

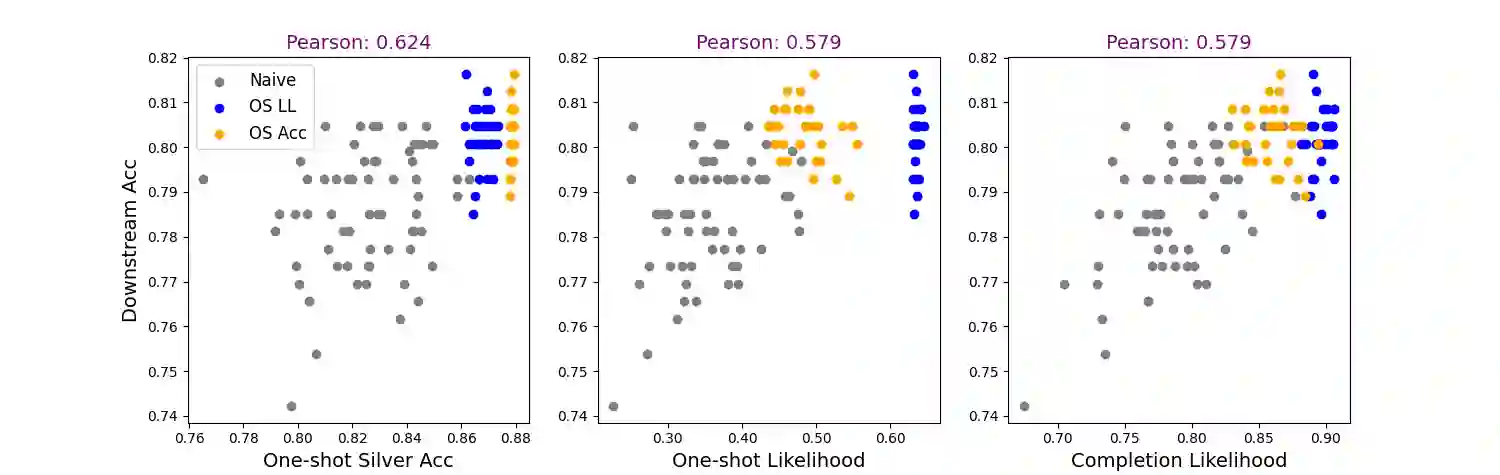

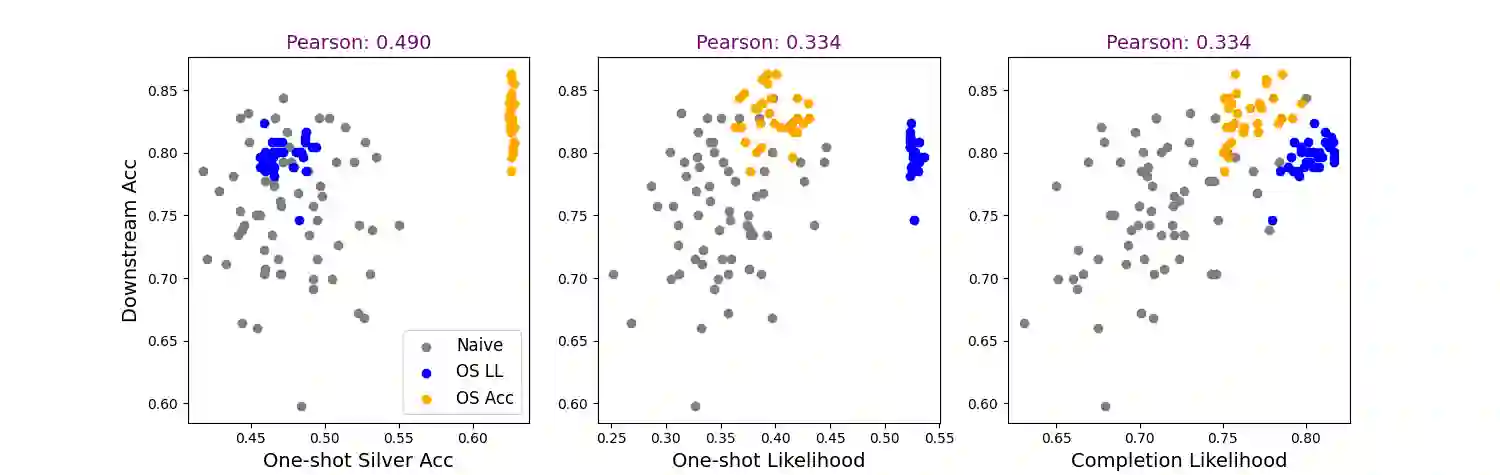

Recent work has addressed textual reasoning tasks by prompting large language models with explanations via the chain-of-thought paradigm. However, subtly different explanations can yield widely varying downstream task accuracy, so explanations that have not been "tuned" for a task, such as off-the-shelf explanations written by nonexperts, may lead to mediocre performance. This paper tackles the problem of how to optimize explanation-infused prompts in a black-box fashion. We first generate sets of candidate explanations for each example in the prompt using a leave-one-out scheme. We then use a two-stage framework where we first evaluate explanations for each in-context example in isolation according to proxy metrics. Finally, we search over sets of explanations to find a set which yields high performance against a silver-labeled development set, drawing inspiration from recent work on bootstrapping language models on unlabeled data. Across four textual reasoning tasks spanning question answering, mathematical reasoning, and natural language inference, results show that our proxy metrics correlate with ground truth accuracy and our overall method can effectively improve prompts over crowdworker annotations and naive search strategies.

翻译:最近的工作解决了文本推理任务,通过思维链范式推介了大语言模型的解释。然而,细微不同的解释可以产生差异很大的下游任务准确性,因此,对于任务没有“调整”的解释,如非专家编写的现成解释,可能导致平庸的性能。本文件探讨了如何以黑盒方式优化解释注入的提示的问题。我们首先在使用“放任一出”办法的快速模式中为每个例子生成成套候选人解释。我们随后使用一个两阶段框架,首先根据代用度量来评估每个文本中示例的解释,然后根据代用量度来孤立地评估。最后,我们通过对一组解释进行搜索,以找到一套能够根据银标签开发集产生高性能的数据集,从最近关于未贴标签数据语言模型的靴式研究中得到灵感。横跨问题解答、数学推理和自然语言推理的四个文本推理任务,结果显示,我们的代用度指标与地面精确性相关,以及我们的总体方法可以有效地改进众工说明和天真搜索战略的迅速性。