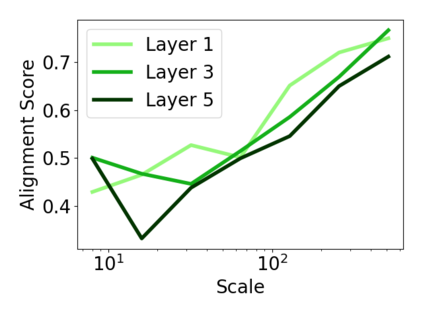

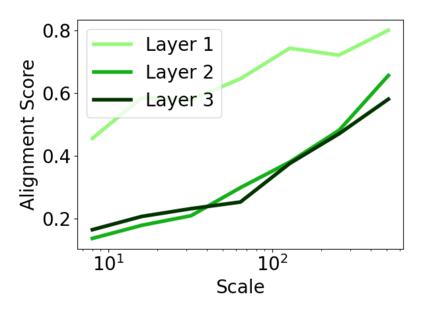

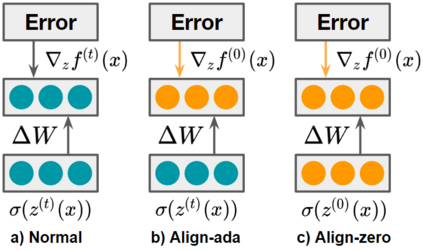

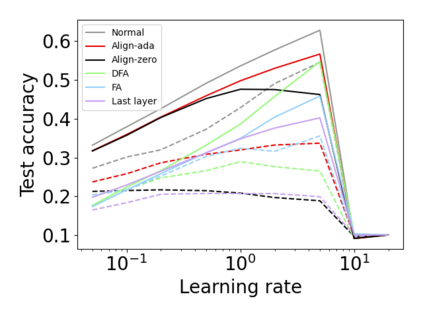

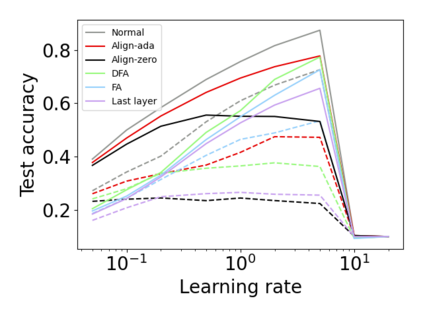

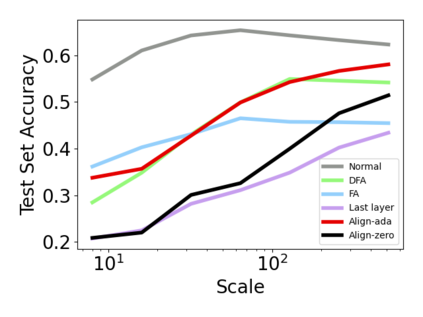

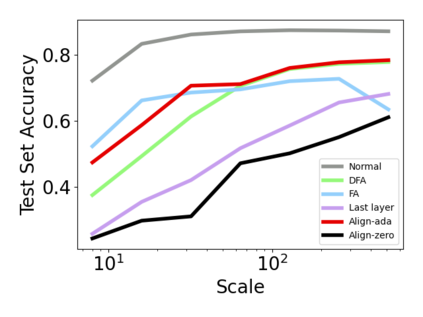

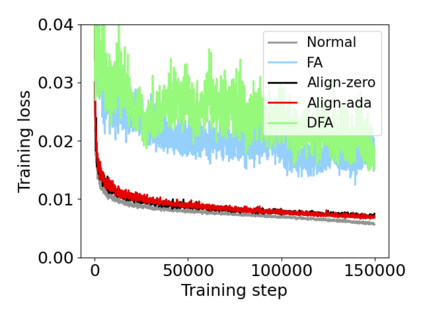

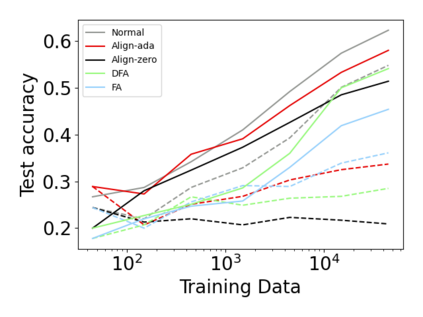

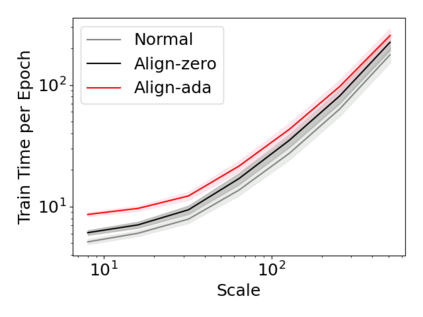

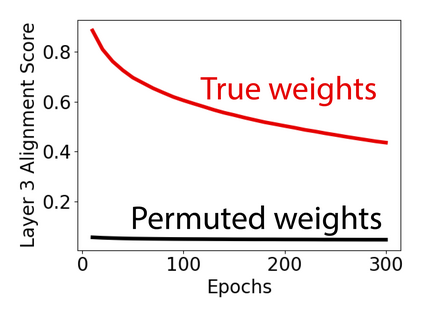

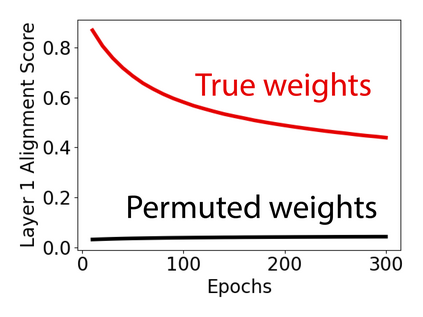

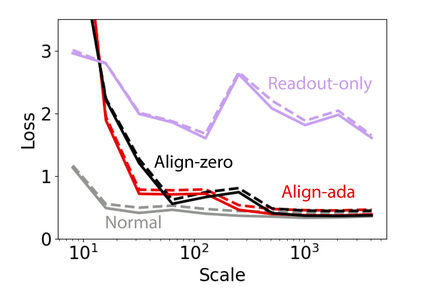

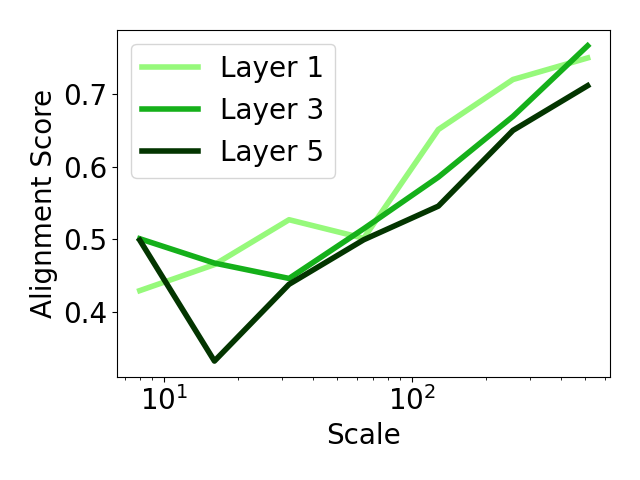

Recent works have examined theoretical and empirical properties of wide neural networks trained in the Neural Tangent Kernel (NTK) regime. Given that biological neural networks are much wider than their artificial counterparts, we consider NTK regime wide neural networks as a possible model of biological neural networks. Leveraging NTK theory, we show theoretically that gradient descent drives layerwise weight updates that are aligned with their input activity correlations weighted by error, and demonstrate empirically that the result also holds in finite-width wide networks. The alignment result allows us to formulate a family of biologically-motivated, backpropagation-free learning rules that are theoretically equivalent to backpropagation in infinite-width networks. We test these learning rules on benchmark problems in feedforward and recurrent neural networks and demonstrate, in wide networks, comparable performance to backpropagation. The proposed rules are particularly effective in low data regimes, which are common in biological learning settings.

翻译:最近的工作研究了在神经唐氏内核(NTK)制度下培训的广大神经网络的理论和经验特性。鉴于生物神经网络比其人造神经网络要广泛得多,我们认为NTK政权的宽神经网络是生物神经网络的可能模式。利用NTK理论,我们理论上表明,梯度下层驱动着与输入活动相关性相匹配的分层体重更新,经误算后显示,结果也存在于有限的宽度网络中。调整的结果使我们能够形成一个在理论上等同于无限宽度网络反向反向适应的生物动机、反向回向回向回向回向的学习规则的大家庭。我们测试这些关于进食和经常性神经网络的基准问题的学习规则,并在广泛的网络中显示可与反向反向回向调整的类似性表现。拟议规则在生物学习环境中常见的低数据系统中特别有效。