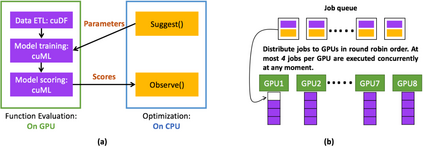

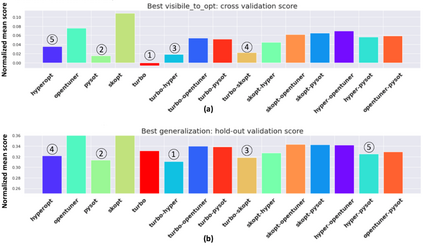

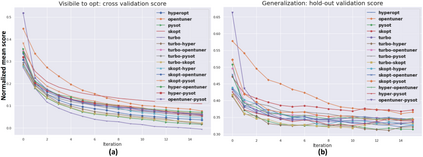

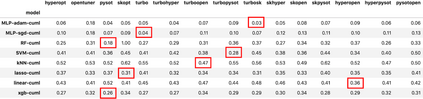

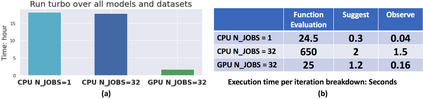

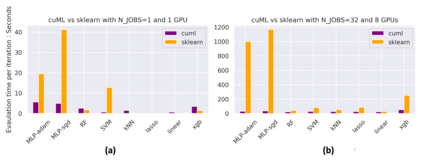

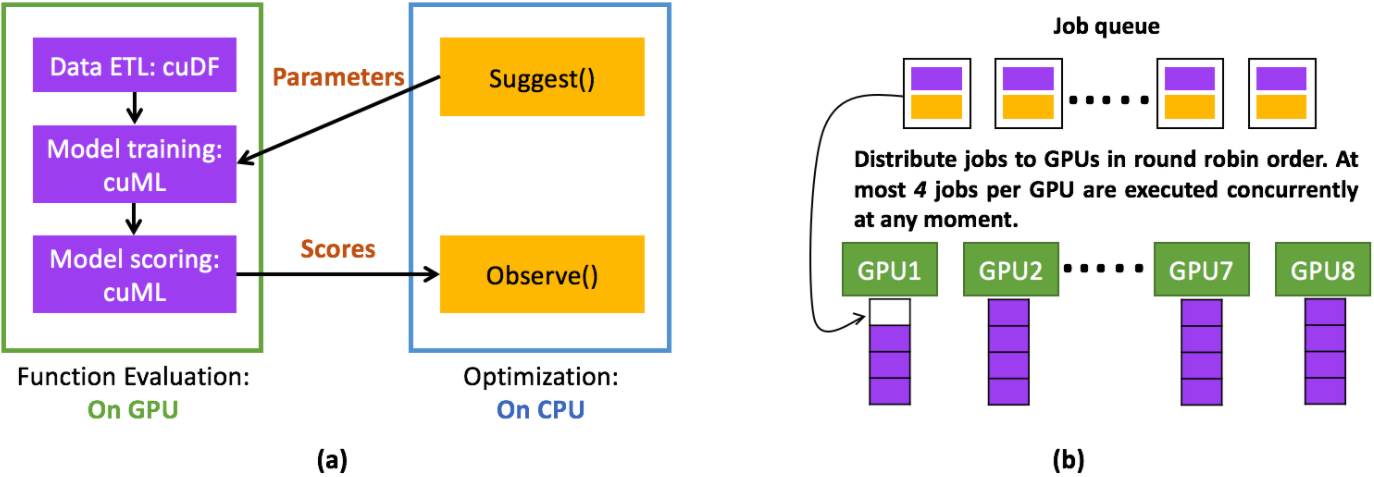

Black-box optimization is essential for tuning complex machine learning algorithms which are easier to experiment with than to understand. In this paper, we show that a simple ensemble of black-box optimization algorithms can outperform any single one of them. However, searching for such an optimal ensemble requires a large number of experiments. We propose a Multi-GPU-optimized framework to accelerate a brute force search for the optimal ensemble of black-box optimization algorithms by running many experiments in parallel. The lightweight optimizations are performed by CPU while expensive model training and evaluations are assigned to GPUs. We evaluate 15 optimizers by training 2.7 million models and running 541,440 optimizations. On a DGX-1, the search time is reduced from more than 10 days on two 20-core CPUs to less than 24 hours on 8-GPUs. With the optimal ensemble found by GPU-accelerated exhaustive search, we won the 2nd place of NeurIPS 2020 black-box optimization challenge.

翻译:黑匣子优化是调整复杂机器学习算法的关键, 这些算法是实验比理解更容易的。 在本文中, 我们显示一个简单的黑盒优化算法组合可以超过任何一个。 但是, 寻找这样一个最佳合算法需要大量实验。 我们提出一个多- GPU- 优化框架, 以通过平行运行许多实验加速寻找黑盒优化算法的最佳组合。 轻量优化由CPU进行, 而昂贵的模型培训和评价则分配给GPUs。 我们通过培训270万模型和运行541, 440 优化来评估15个优化。 在DGX-1 上, 搜索时间从两个20- CUPS的10天以上缩短到8- GPU的24小时以下。 在GPU- 放大的彻底搜索中发现的最佳组合, 我们赢得了 NeurIPS 2020 黑盒优化挑战的第二位 。