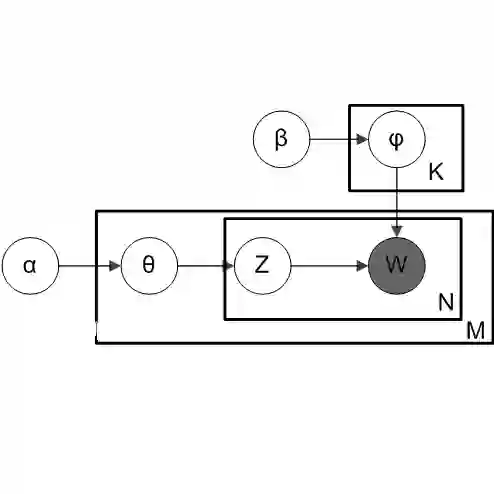

Latent Dirichlet Allocation(LDA) is a popular topic model. Given the fact that the input corpus of LDA algorithms consists of millions to billions of tokens, the LDA training process is very time-consuming, which may prevent the usage of LDA in many scenarios, e.g., online service. GPUs have benefited modern machine learning algorithms and big data analysis as they can provide high memory bandwidth and computation power. Therefore, many frameworks, e.g. Ten- sorFlow, Caffe, CNTK, support to use GPUs for accelerating the popular machine learning data-intensive algorithms. However, we observe that LDA solutions on GPUs are not satisfying. In this paper, we present CuLDA_CGS, a GPU-based efficient and scalable approach to accelerate large-scale LDA problems. CuLDA_CGS is designed to efficiently solve LDA problems at high throughput. To it, we first delicately design workload partition and synchronization mechanism to exploit the benefits of mul- tiple GPUs. Then, we offload the LDA sampling process to each individual GPU by optimizing from the sampling algorithm, par- allelization, and data compression perspectives. Evaluations show that compared with state-of-the-art LDA solutions, CuLDA_CGS outperforms them by a large margin (up to 7.3X) on a single GPU. CuLDA_CGS is able to achieve extra 3.0X speedup on 4 GPUs. The source code is publicly available on https://github.com/cuMF/ CuLDA_CGS.

翻译:LDA 算法的输入量由百万至数十亿个物证组成,因此,LDA 培训过程耗时非常费时,这可能会在许多场景中防止使用LDA,例如在线服务。GPU已经受益于现代机器学习算法和大数据分析,因为它们能够提供高记忆带宽和计算能力。因此,许多框架,例如10 SorFlow、Cafe、CNTK, 支持使用GPU来加速流行机器学习数据密集算法。然而,我们发现,GPU的LDA解决方案并不令人满意,这可能在许多场景中防止使用LDA,例如在线服务。GPUs已经受益于现代机器学习算法和大数据分析法。CLLDA_CSUDA设计了高效和可推广的LDA问题。我们首先精密设计工作量平衡和同步机制,以利用 mul-cole GPUs 的效益。然后,我们从LDA 将LDA 的外部数据采集过程卸下,通过SUDA 全部的样本分析法。