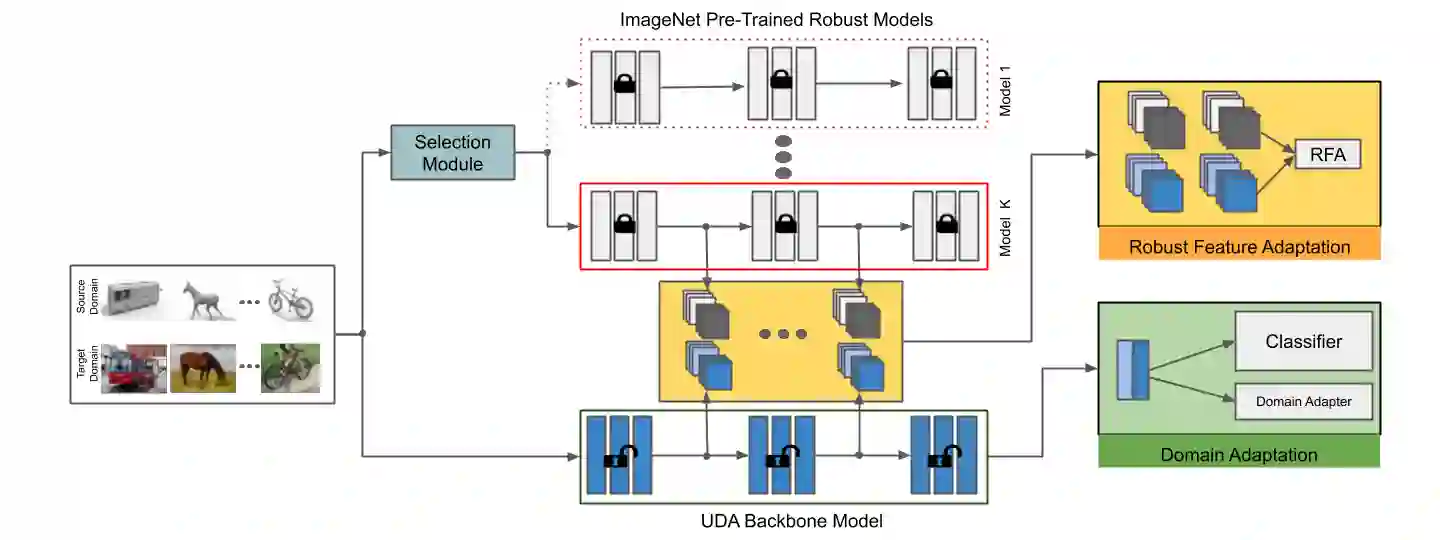

Extensive Unsupervised Domain Adaptation (UDA) studies have shown great success in practice by learning transferable representations across a labeled source domain and an unlabeled target domain with deep models. However, previous works focus on improving the generalization ability of UDA models on clean examples without considering the adversarial robustness, which is crucial in real-world applications. Conventional adversarial training methods are not suitable for the adversarial robustness on the unlabeled target domain of UDA since they train models with adversarial examples generated by the supervised loss function. In this work, we leverage intermediate representations learned by multiple robust ImageNet models to improve the robustness of UDA models. Our method works by aligning the features of the UDA model with the robust features learned by ImageNet pre-trained models along with domain adaptation training. It utilizes both labeled and unlabeled domains and instills robustness without any adversarial intervention or label requirement during domain adaptation training. Experimental results show that our method significantly improves adversarial robustness compared to the baseline while keeping clean accuracy on various UDA benchmarks.

翻译:广泛的无人监督的域适应(UDA)研究表明,在实践上取得了巨大成功,在标签源域和没有标签的目标域和深层模型中学习了可转让演示,但是,以往的工作重点是提高UDA模型在清洁范例方面的普及能力,而没有考虑到在现实世界应用中至关重要的对抗性强力。常规对抗性培训方法不适合UDA未标签目标域的对抗性强力,因为它们用受监督的损失功能生成的对抗性实例来培训模型。在这项工作中,我们利用多个强大的图像网模型所学的中间演示来提高UDA模型的稳健性。我们的方法是将UDA模型的特征与经过预先培训的模型所学的强力特征与域适应培训相结合,同时利用标签和未加标签的域,在域适应培训中在没有任何对抗性干预或标签要求的情况下,将强力注入。实验结果表明,我们的方法大大改进了对抗性强力,而比基线要强得多,同时保持各种UDA基准的准确性。