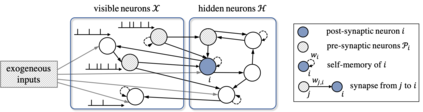

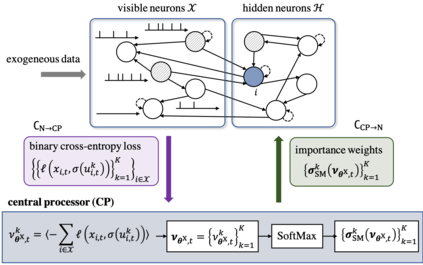

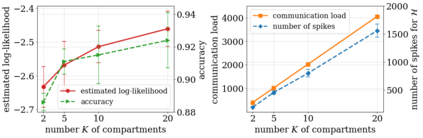

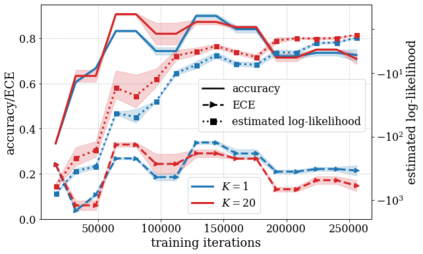

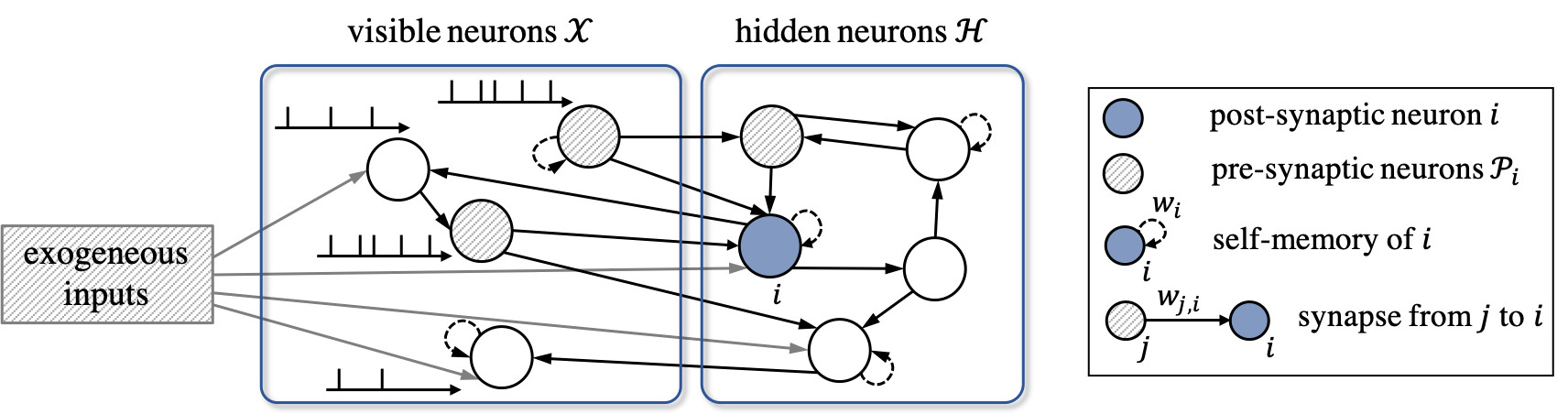

Spiking Neural Networks (SNNs) offer a novel computational paradigm that captures some of the efficiency of biological brains by processing through binary neural dynamic activations. Probabilistic SNN models are typically trained to maximize the likelihood of the desired outputs by using unbiased estimates of the log-likelihood gradients. While prior work used single-sample estimators obtained from a single run of the network, this paper proposes to leverage multiple compartments that sample independent spiking signals while sharing synaptic weights. The key idea is to use these signals to obtain more accurate statistical estimates of the log-likelihood training criterion, as well as of its gradient. The approach is based on generalized expectation-maximization (GEM), which optimizes a tighter approximation of the log-likelihood using importance sampling. The derived online learning algorithm implements a three-factor rule with global per-compartment learning signals. Experimental results on a classification task on the neuromorphic MNIST-DVS data set demonstrate significant improvements in terms of log-likelihood, accuracy, and calibration when increasing the number of compartments used for training and inference.

翻译:Spik NeuralNetworks(SNNS)提供了一种新的计算模式,通过二进制神经动态活性激活的处理来捕捉生物大脑的某些效率。 概率性 SNN模型通常会通过使用对日志相似度梯度的公正估计来进行培训,以最大限度地实现预期产出的可能性。 虽然以前的工作曾使用过从网络的单一运行中获得的单模量估计器,但本文提议利用多个舱,在共享合成重量的同时对独立信号进行取样。 关键的想法是利用这些信号来获取对日志相似度培训标准及其梯度的更准确的统计估计。 这种方法基于普遍期望- 最大化( GEM) (GEM) (GEM) (GEM ) (GEM ) (GEM ), 后者利用重要取样优化对日志相似度的更近似度。 衍生的在线学习算法将三要素规则与全局的学习信号一起实施。 在增加用于培训的分数时, 神经形态- DVS 数据集的分类任务实验结果显示在日志相似性、准确性和校准数据组数方面的显著改进。