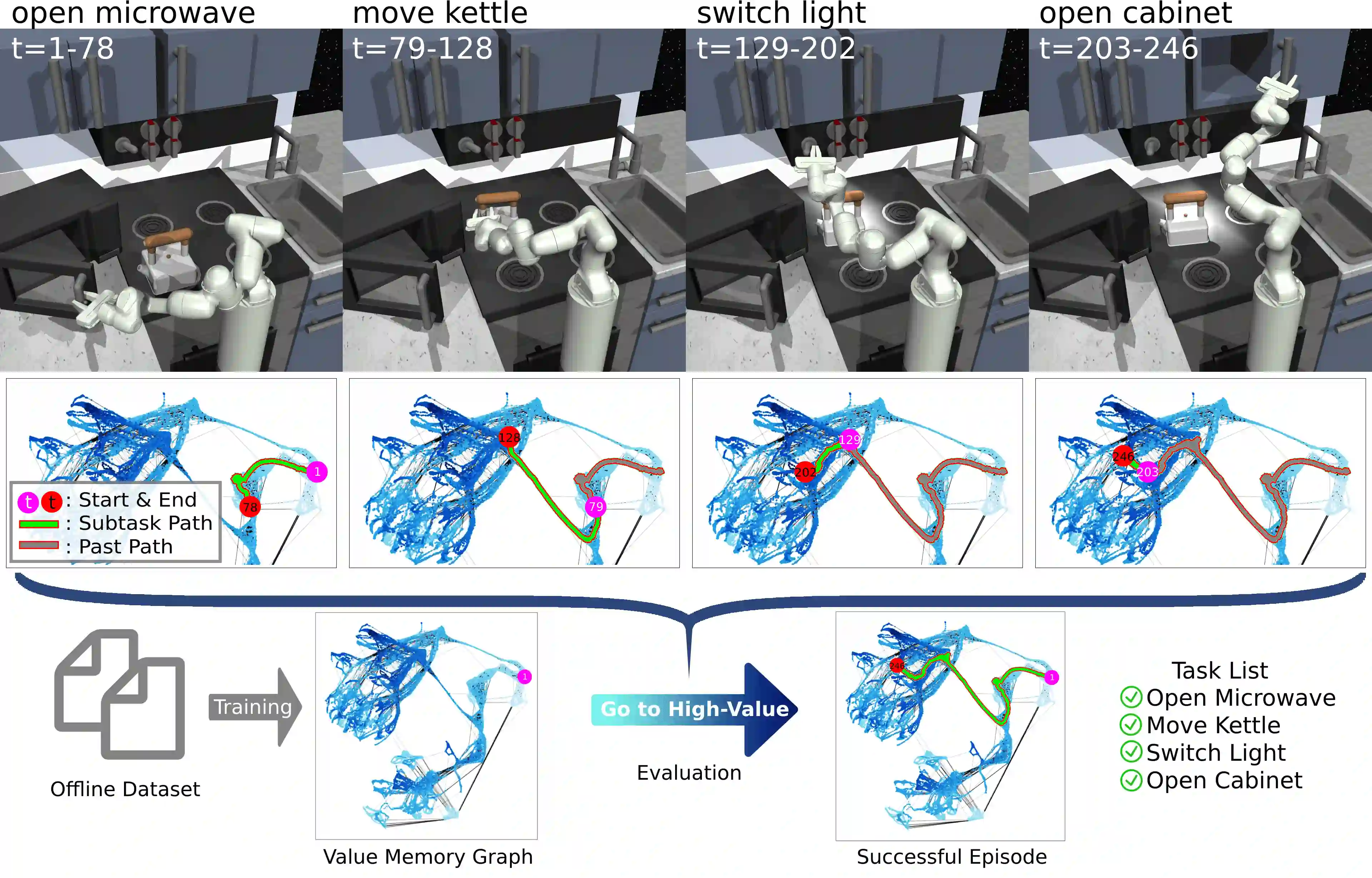

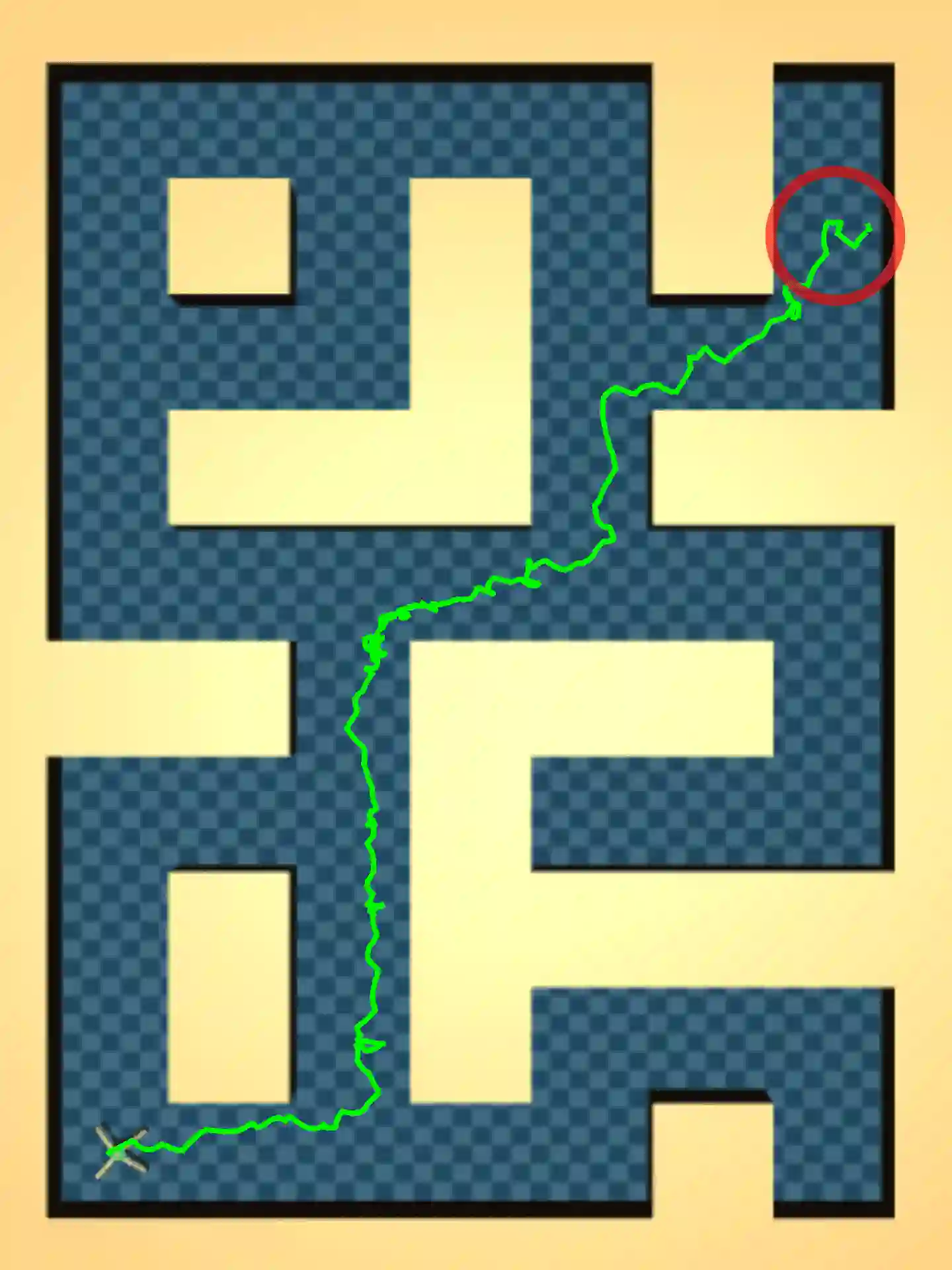

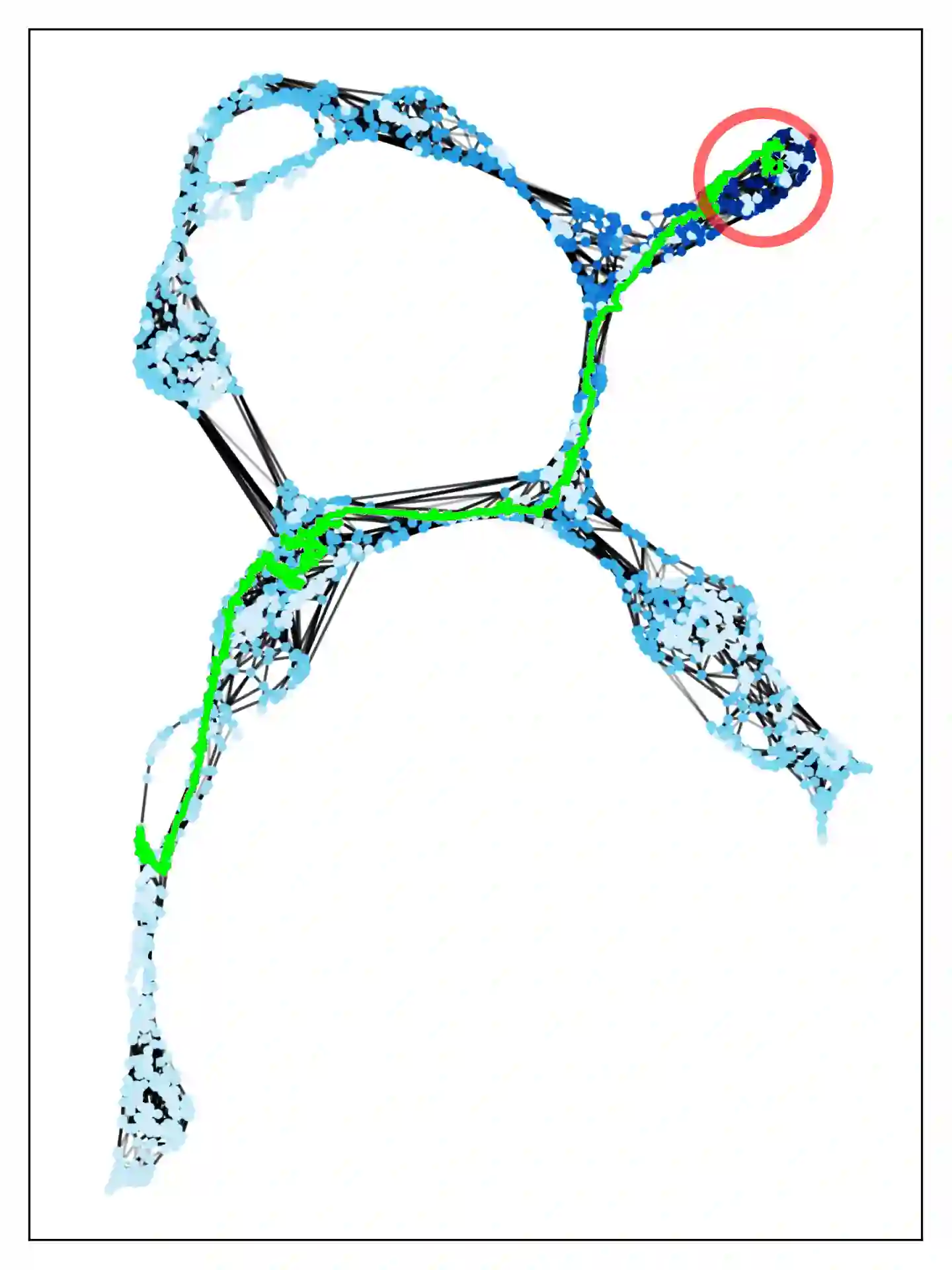

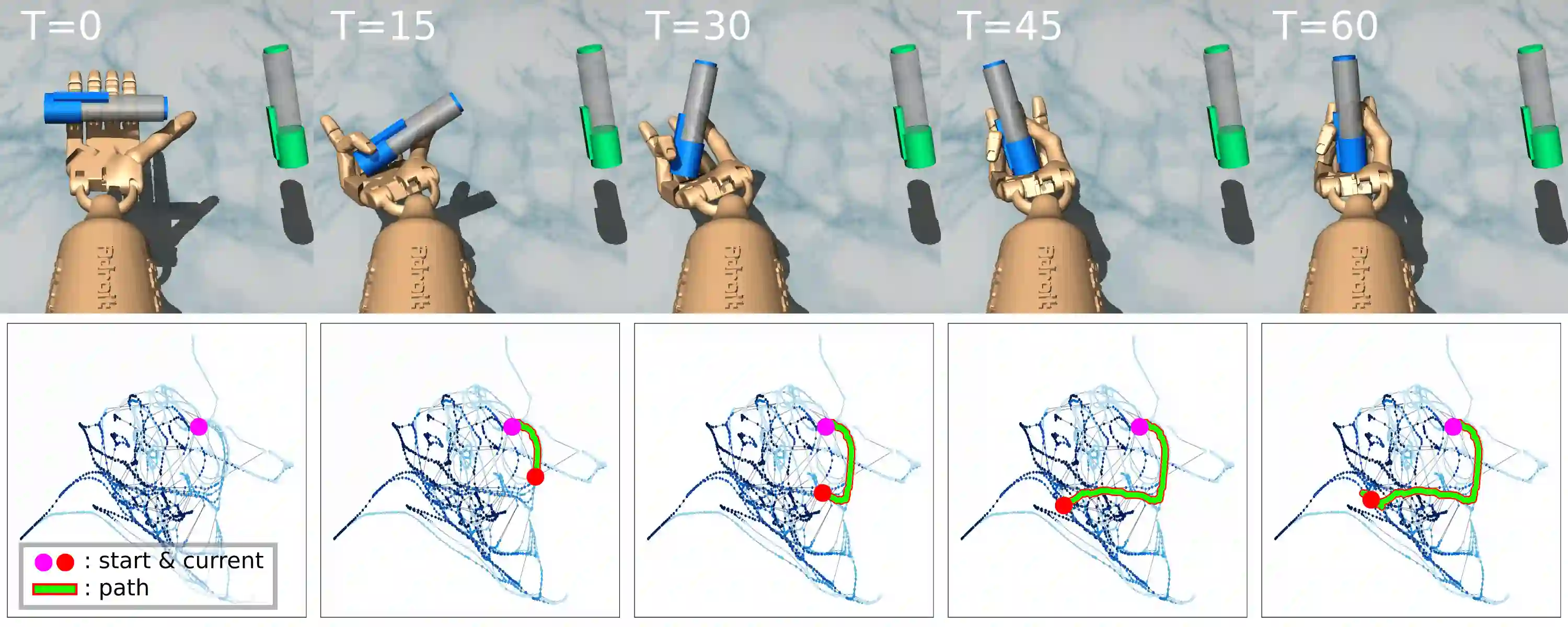

World models in model-based reinforcement learning usually face unrealistic long-time-horizon prediction issues due to compounding errors as the prediction errors accumulate over timesteps. Recent works in graph-structured world models improve the long-horizon reasoning ability via building a graph to represent the environment, but they are designed in a goal-conditioned setting and cannot guide the agent to maximize episode returns in a traditional reinforcement learning setting without externally given target states. To overcome this limitation, we design a graph-structured world model in offline reinforcement learning by building a directed-graph-based Markov decision process (MDP) with rewards allocated to each directed edge as an abstraction of the original continuous environment. As our world model has small and finite state/action spaces compared to the original environment, value iteration can be easily applied here to estimate state values on the graph and figure out the best future. Unlike previous graph-structured world models that requires externally provided targets, our world model, dubbed Value Memory Graph (VMG), can provide the desired targets with high values by itself. VMG can be used to guide low-level goal-conditioned policies that are trained via supervised learning to maximize episode returns. Experiments on the D4RL benchmark show that VMG can outperform state-of-the-art methods in several tasks where long horizon reasoning ability is crucial. Code will be made publicly available.

翻译:以模型为基础的强化学习世界模型通常面临不切实际的长期热量预测问题,因为预测错误随着时间跨度的累积而积累,因此,预测错误会增加。图表结构世界模型的近期工作通过建立图形代表环境的图表,提高了长象推理能力,但是这些模型是在有目标条件的设置下设计的,无法指导代理人在传统的强化学习环境中在没有外部给定的目标国的情况下最大限度地实现事件回报。为了克服这一限制,我们设计了一个图表结构世界模型,通过建立定向的Markov决策过程(MDP),为每个定向边缘分配奖励,作为原始连续环境的抽象。由于我们的世界模型与原始环境相比,具有较小和有限的状态/行动空间,因此,这里可以很容易地应用这些数值来估计图表上的数值并描绘最佳的未来。与以前的图表结构世界模型不同,我们的世界模型,调值值记忆图(VMG)可以提供高值的预期目标。VMG可以用来指导低水平目标-限制政策,作为原始连续环境的抽象的抽象过程。通过监督的模型模型,可以用来指导VMG-C-C-C-C-C-C-CL-C-C-CL-C-C-L-C-C-CL-C-C-C-CL-L-L-L-L-L-C-C-C-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-D-D-D-D-D-L-D-L-L-L-D-L-L-L-L-L-L-D-L-L-L-L-L-L-L-D-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L-L