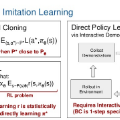

Offline reinforcement learning (RL) methods can generally be categorized into two types: RL-based and Imitation-based. RL-based methods could in principle enjoy out-of-distribution generalization but suffer from erroneous off-policy evaluation. Imitation-based methods avoid off-policy evaluation but are too conservative to surpass the dataset. In this study, we propose an alternative approach, inheriting the training stability of imitation-style methods while still allowing logical out-of-distribution generalization. We decompose the conventional reward-maximizing policy in offline RL into a guide-policy and an execute-policy. During training, the guide-poicy and execute-policy are learned using only data from the dataset, in a supervised and decoupled manner. During evaluation, the guide-policy guides the execute-policy by telling where it should go so that the reward can be maximized, serving as the \textit{Prophet}. By doing so, our algorithm allows \textit{state-compositionality} from the dataset, rather than \textit{action-compositionality} conducted in prior imitation-style methods. We dumb this new approach Policy-guided Offline RL (\texttt{POR}). \texttt{POR} demonstrates the state-of-the-art performance on D4RL, a standard benchmark for offline RL. We also highlight the benefits of \texttt{POR} in terms of improving with supplementary suboptimal data and easily adapting to new tasks by only changing the guide-poicy.

翻译:离线强化学习方法通常可以分为两种类型:基于强化学习和基于模仿学习。基于强化学习的方法理论上可以具有超出分布的泛化能力,但是这种方法在估计离策略时容易出现误差。基于模仿学习的方法避免了离策略估计,但是过于保守,难以超越数据集。在这项研究中,我们提出了一种替代方法,继承了模仿学习方法的训练稳定性,同时还允许逻辑上的超越分布的泛化。我们将传统的离线强化学习最大化回报的策略分解为引导策略和执行策略。在训练过程中,引导策略和执行策略仅使用数据集中的数据以一种供应式和解耦的方式进行了学习。在评估期间,引导策略指导执行策略朝着最大化回报的方向,并作为 "先知" 提供支持。通过这种方式,我们的算法允许从数据集中得到的状态组合性而不是类似以前的模仿学习方法中所进行的动作组合性。我们将这一新方法称为基于策略引导的离线强化学习(\texttt{POR})。在 D4RL 上,\texttt{POR} 展现出了最先进的性能,这是离线强化学习的标准基准。我们还强调了\texttt{POR}在利用补充的次优数据和通过仅更改引导策略就可以轻松适应新任务方面的优点。