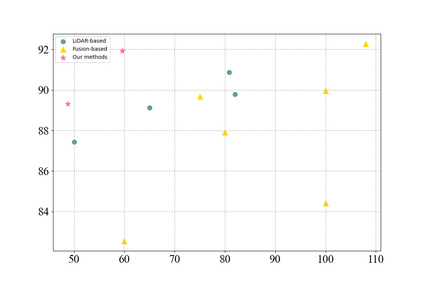

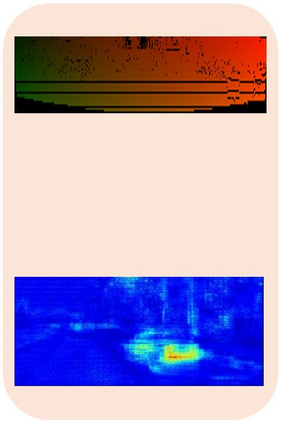

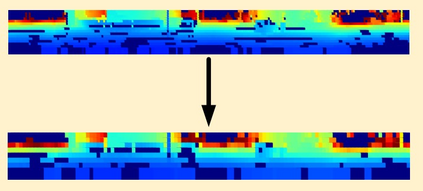

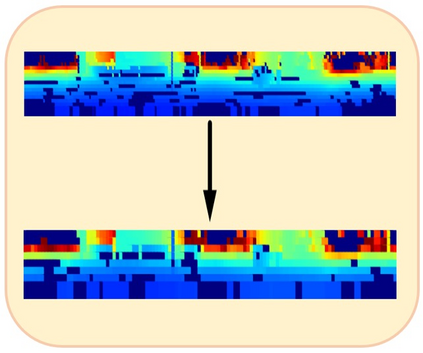

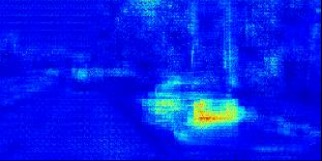

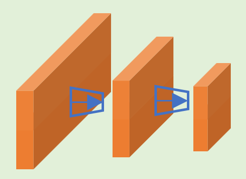

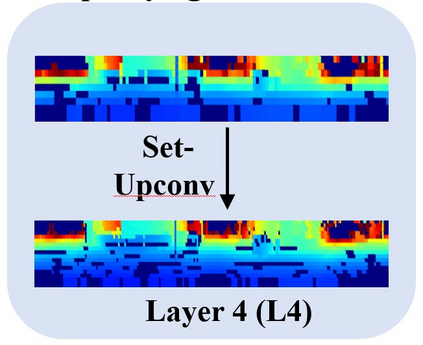

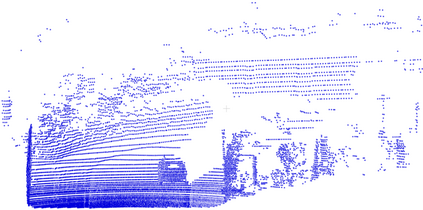

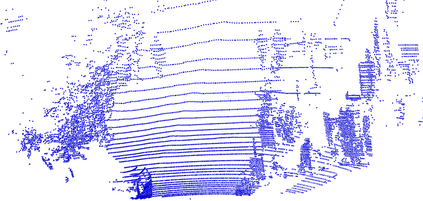

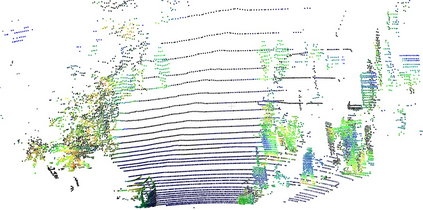

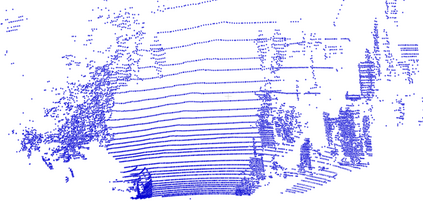

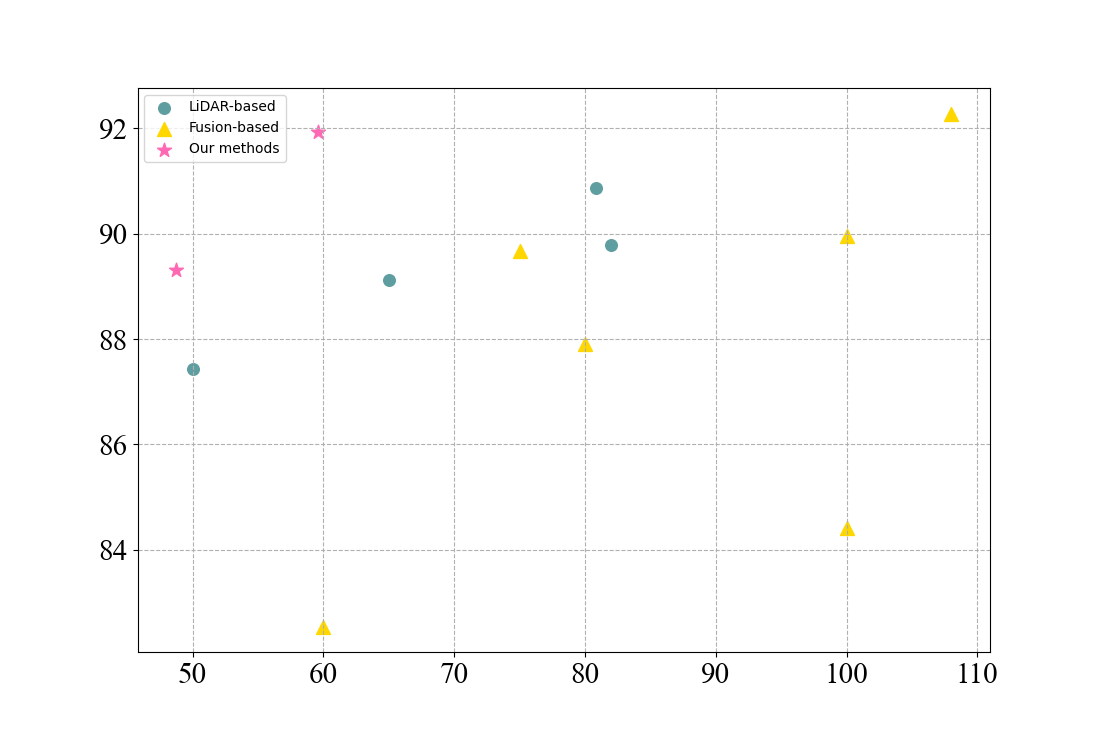

Promising complementarity exists between the texture features of color images and the geometric information of LiDAR point clouds. However, there still present many challenges for efficient and robust feature fusion in the field of 3D object detection. In this paper, first, unstructured 3D point clouds are filled in the 2D plane and 3D point cloud features are extracted faster using projection-aware convolution layers. Further, the corresponding indexes between different sensor signals are established in advance in the data preprocessing, which enables faster cross-modal feature fusion. To address LiDAR points and image pixels misalignment problems, two new plug-and-play fusion modules, LiCamFuse and BiLiCamFuse, are proposed. In LiCamFuse, soft query weights with perceiving the Euclidean distance of bimodal features are proposed. In BiLiCamFuse, the fusion module with dual attention is proposed to deeply correlate the geometric and textural features of the scene. The quantitative results on the KITTI dataset demonstrate that the proposed method achieves better feature-level fusion. In addition, the proposed network shows a shorter running time compared to existing methods.

翻译:彩色图像的纹理特征与LiDAR点云的几何信息之间有着令人乐观的互补性。然而,在3D对象探测领域,对于高效和稳健的特征融合仍存在着许多挑战。在本文中,首先,2D平面填满了无结构的三维点云,3D点云特征使用投影-有意识相融合层更快地提取。此外,不同感应信号之间的对应指数在数据处理前先建立,从而能够更快地进行跨式特征融合。为了解决利DAR点和图像像像素不协调问题,提出了两个新的插插和播放组合模块,即LiCamFuse和BiLiCamFuse。在利CamFuse中,提出了了解双式特征的Eucloidean距离的软查询权重。在BiLiCamFuse中,提出了双重关注的聚合模块建议,以加深对现场的几何和质特征的关联性。KITTI数据集的定量结果表明,拟议的方法将达到更短的网络水平。