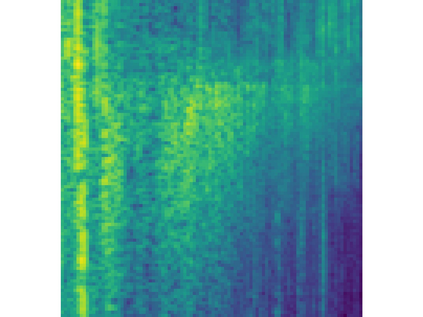

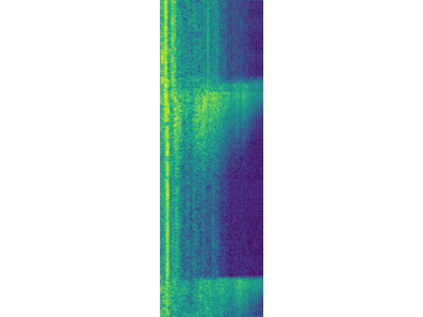

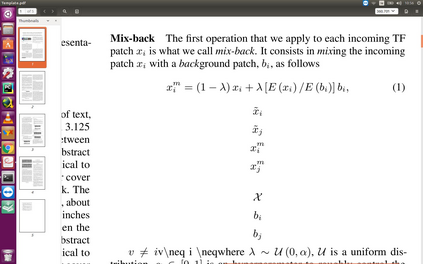

Deep neural networks trained with standard cross-entropy loss memorize noisy labels, which degrades their performance. Most research to mitigate this memorization proposes new robust classification loss functions. Conversely, we explore the behavior of supervised contrastive learning under label noise to understand how it can improve image classification in these scenarios. In particular, we propose a Multi-Objective Interpolation Training (MOIT) approach that jointly exploits contrastive learning and classification. We show that standard contrastive learning degrades in the presence of label noise and propose an interpolation training strategy to mitigate this behavior. We further propose a novel label noise detection method that exploits the robust feature representations learned via contrastive learning to estimate per-sample soft-labels whose disagreements with the original labels accurately identify noisy samples. This detection allows treating noisy samples as unlabeled and training a classifier in a semi-supervised manner. We further propose MOIT+, a refinement of MOIT by fine-tuning on detected clean samples. Hyperparameter and ablation studies verify the key components of our method. Experiments on synthetic and real-world noise benchmarks demonstrate that MOIT/MOIT+ achieves state-of-the-art results. Code is available at https://git.io/JI40X.

翻译:进行标准的跨血压损失的深度神经网络,经过标准的跨血压损失记忆的吵闹标签培训,这些标签降低了它们的性能。大多数缓解这种记忆化的研究都提出了新的稳健分类损失功能。相反,我们探索在标签噪音下监督对比性学习的行为,以了解如何在这些情景中改进图像分类。我们特别提议采用多目的内插培训(MOIT)方法,共同利用对比性学习和分类方法。我们进一步提议,在标签噪音存在的情况下,标准对比性学习会退化,并提议一个内插培训战略来缓解这种行为。我们进一步提议采用一种新的标签噪音探测方法,利用通过对比性学习所学到的强势特征显示法,以估计与原始标签不一致的每份样本,准确识别噪音样品。这种检测可以将噪音样品作为无标签处理,并以半监督的方式培训一个分类器。我们进一步提议采用MOIT+,通过对检测到的干净样品进行微调来改进MOIT。超力40度测量和校正研究,以核实我们的方法的关键组成部分。关于合成和现实世界噪音的实验结果显示:MAIT/MOIX标准。