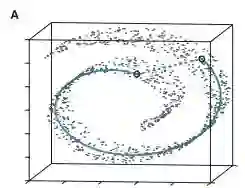

Invoking the manifold assumption in machine learning requires knowledge of the manifold's geometry and dimension, and theory dictates how many samples are required. However, in applications data are limited, sampling may not be uniform, and manifold properties are unknown and (possibly) non-pure; this implies that neighborhoods must adapt to the local structure. We introduce an algorithm for inferring adaptive neighborhoods for data given by a similarity kernel. Starting with a locally-conservative neighborhood (Gabriel) graph, we sparsify it iteratively according to a weighted counterpart. In each step, a linear program yields minimal neighborhoods globally and a volumetric statistic reveals neighbor outliers likely to violate manifold geometry. We apply our adaptive neighborhoods to non-linear dimensionality reduction, geodesic computation and dimension estimation. A comparison against standard algorithms using, e.g., k-nearest neighbors, demonstrates their usefulness.

翻译:在机器学习中援引多重假设要求了解元体的几何和尺寸,而理论则决定了需要多少样本。然而,在应用数据有限的情况下,取样可能并不统一,而多种特性可能并不为人所知,而且(可能)不纯;这意味着邻里必须适应当地结构。我们引入一种算法,用于推断类似内核提供的数据的适应性邻里。从当地保守邻里图(Gabriel)开始,我们根据加权对应方对它进行迭接。在每一个步骤中,线性程序产生全球最小的邻里,而数量统计显示邻里外围可能违反多重几何特征。我们将我们的适应性邻里应用于非线性维度减少、大地测量计算和维度估计。与标准算法(例如,K-最远的邻里)进行比较,以显示其有用性。