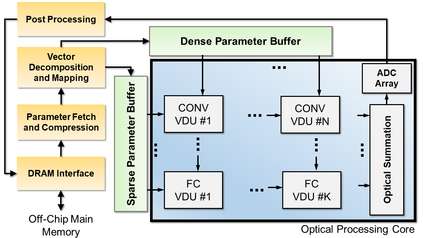

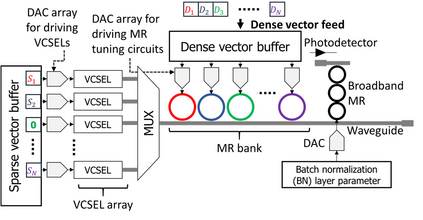

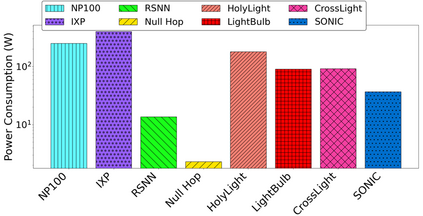

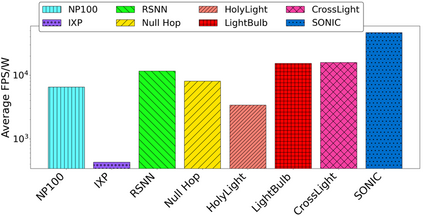

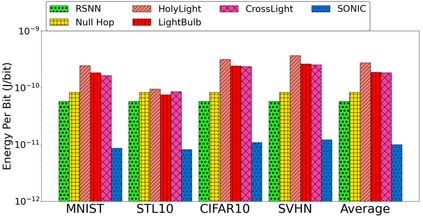

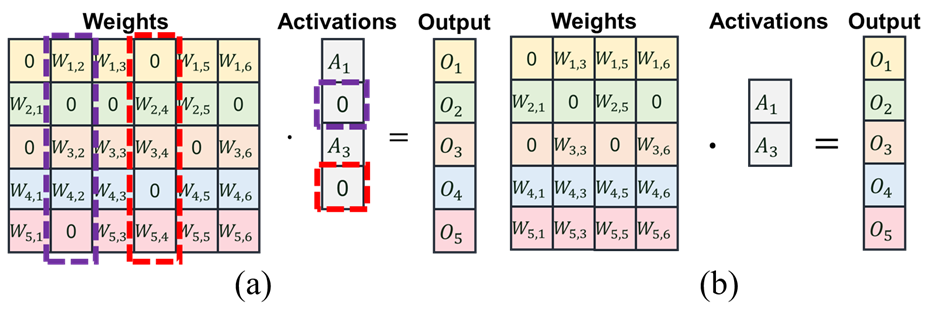

Sparse neural networks can greatly facilitate the deployment of neural networks on resource-constrained platforms as they offer compact model sizes while retaining inference accuracy. Because of the sparsity in parameter matrices, sparse neural networks can, in principle, be exploited in accelerator architectures for improved energy-efficiency and latency. However, to realize these improvements in practice, there is a need to explore sparsity-aware hardware-software co-design. In this paper, we propose a novel silicon photonics-based sparse neural network inference accelerator called SONIC. Our experimental analysis shows that SONIC can achieve up to 5.8x better performance-per-watt and 8.4x lower energy-per-bit than state-of-the-art sparse electronic neural network accelerators; and up to 13.8x better performance-per-watt and 27.6x lower energy-per-bit than the best known photonic neural network accelerators.

翻译:松散的神经网络可以极大地促进在资源限制的平台上部署神经网络,因为它们提供紧凑的模型大小,同时保持推理准确性。由于参数矩阵的宽度,稀散的神经网络原则上可以在加速器结构中加以利用,以提高能源效率和延缓性。然而,为了实现这些改进,需要在实践中探索超度-有觉悟的硬件-软件共同设计。在本文中,我们提议了一个新的基于硅的光子光子的稀薄神经网络推断加速器,称为SONIC。我们的实验分析显示,SONIC可以达到5.8x的更好性能-每瓦和8.4x的低能量/位,高于最先进的稀散式电子神经网络加速器;比最著名的光学神经网络加速器达到13.8x的性能-perwat和27.6x低能量-位。