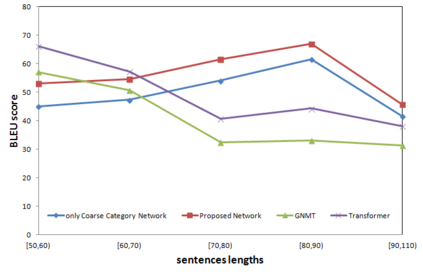

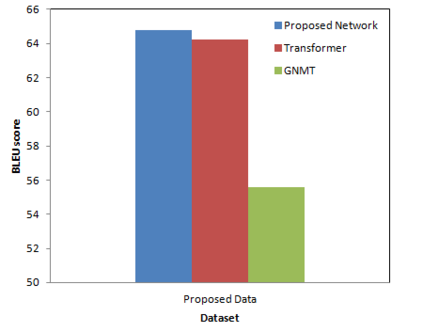

In recent years, the sequence-to-sequence learning neural networks with attention mechanism have achieved great progress. However, there are still challenges, especially for Neural Machine Translation (NMT), such as lower translation quality on long sentences. In this paper, we present a hierarchical deep neural network architecture to improve the quality of long sentences translation. The proposed network embeds sequence-to-sequence neural networks into a two-level category hierarchy by following the coarse-to-fine paradigm. Long sentences are input by splitting them into shorter sequences, which can be well processed by the coarse category network as the long distance dependencies for short sentences is able to be handled by network based on sequence-to-sequence neural network. Then they are concatenated and corrected by the fine category network. The experiments shows that our method can achieve superior results with higher BLEU(Bilingual Evaluation Understudy) scores, lower perplexity and better performance in imitating expression style and words usage than the traditional networks.

翻译:近些年来,有关注机制的顺序到顺序学习神经网络取得了巨大进展,然而,仍然存在挑战,特别是神经机器翻译(NMT)的挑战,例如长刑期翻译质量较低。在本文件中,我们展示了一个等级深层次的神经网络结构,以提高长刑期翻译的质量。拟议网络将顺序到顺序的神经网络嵌入一个两级等级,采用粗略到线的模式,将顺序到顺序的神经网络嵌入到两级的等级中。长的刑期是通过将其分成较短的顺序来投入的,粗鲁的类别网络可以很好地处理,因为短刑期的远距离依赖性可以由基于顺序到顺序的神经网络的网络来处理。然后它们被精细的类别网络连接和校正。实验表明,我们的方法可以取得优异的结果,比传统网络的表达风格和用词量更高,而且更难解和更好表现。