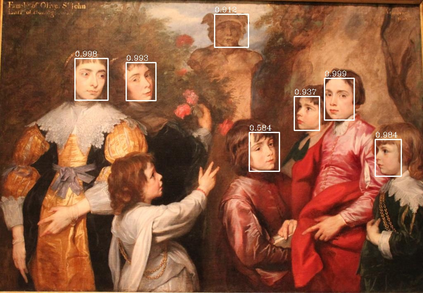

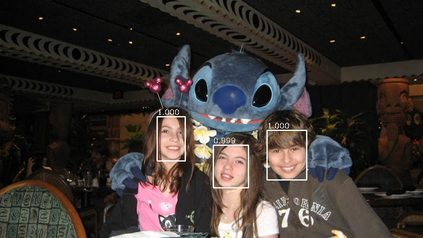

Tiny deep learning on microcontroller units (MCUs) is challenging due to the limited memory size. We find that the memory bottleneck is due to the imbalanced memory distribution in convolutional neural network (CNN) designs: the first several blocks have an order of magnitude larger memory usage than the rest of the network. To alleviate this issue, we propose a generic patch-by-patch inference scheduling, which operates only on a small spatial region of the feature map and significantly cuts down the peak memory. However, naive implementation brings overlapping patches and computation overhead. We further propose network redistribution to shift the receptive field and FLOPs to the later stage and reduce the computation overhead. Manually redistributing the receptive field is difficult. We automate the process with neural architecture search to jointly optimize the neural architecture and inference scheduling, leading to MCUNetV2. Patch-based inference effectively reduces the peak memory usage of existing networks by 4-8x. Co-designed with neural networks, MCUNetV2 sets a record ImageNet accuracy on MCU (71.8%), and achieves >90% accuracy on the visual wake words dataset under only 32kB SRAM. MCUNetV2 also unblocks object detection on tiny devices, achieving 16.9% higher mAP on Pascal VOC compared to the state-of-the-art result. Our study largely addressed the memory bottleneck in tinyML and paved the way for various vision applications beyond image classification.

翻译:微控制器(MCUs)的微控制器(MCTUs)的微小深度学习由于记忆体积有限而具有挑战性。我们发现,记忆瓶颈是由于进化神经网络(CNN)设计中的内存分布不平衡造成的:前几个区比网络其他部分的内存使用量要大得多。为了缓解这一问题,我们建议采用通用的逐条补丁推价表,该表只能在地貌地图的一个小空间区域运行,并大大缩短顶峰记忆。然而,天真的执行带来了重叠的补丁和计算间接费用。我们进一步建议网络再分配,将接收字段和FLOP转换到后一阶段,并减少计算间接费用。手再分配接收字段是困难的。我们用神经结构来自动调整过程,以共同优化神经架构和感知力表的进度。基于补数的推力将现有网络的最高峰记忆使用量减少4-8x。与神经网络共同设计,MCUV2设置了MCU(71.8 %)的图像网络精确度,并在SMA-MLM(M) 16) 目标的直径CM(S-CR-CL) 的直径CL) 上,在S-CR-CLisal-Cal-CS-CS-CS-CS-CS-Cal) 目标上实现S-hal-hmal-hal-hal-hal) laveal 的正确数据上,在S-hmaltal-hmal-dal-dal-hmaldaldaldaldaldalddddaldaldddal 上,在Smaldddddaldaldaldaldddddaldaldal 上,在S.dddddddddddddddddddddddal上,在Smaldaldaldal上进行了上进行了上进行了上进行了上进行了上进行了上进行了上进行。