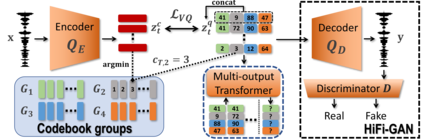

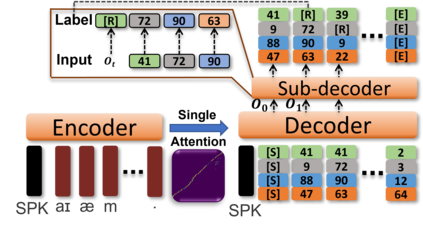

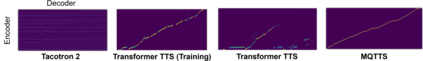

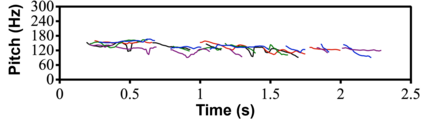

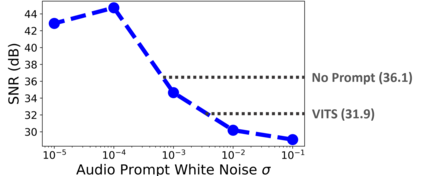

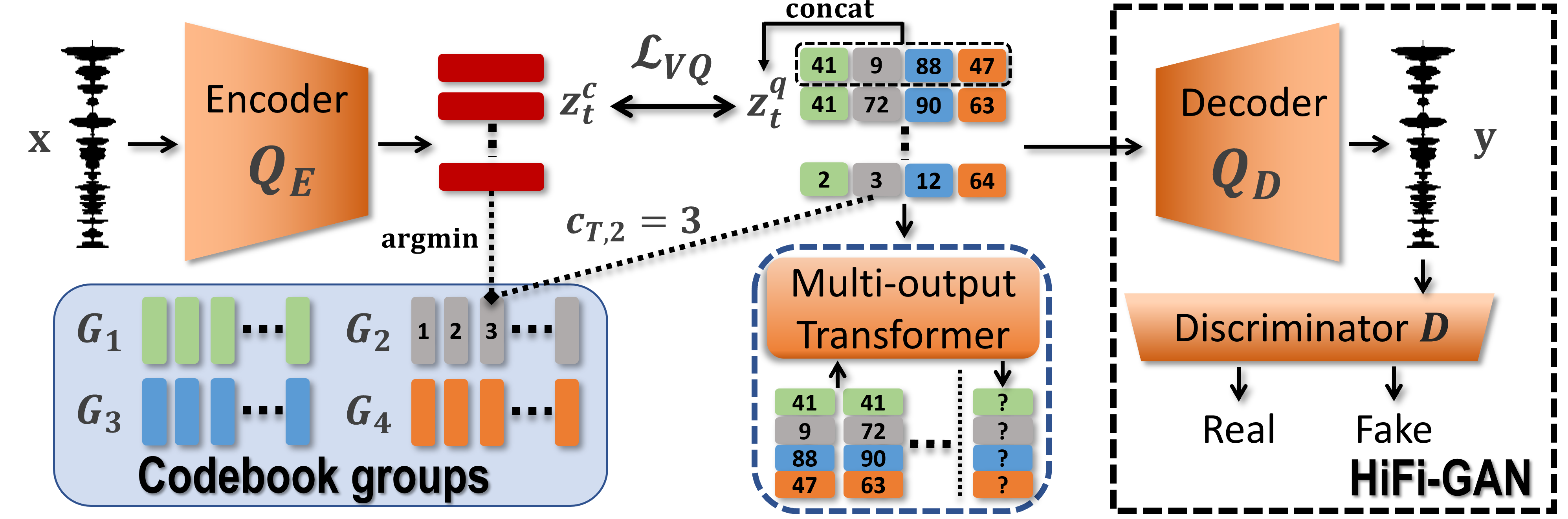

Recent Text-to-Speech (TTS) systems trained on reading or acted corpora have achieved near human-level naturalness. The diversity of human speech, however, often goes beyond the coverage of these corpora. We believe the ability to handle such diversity is crucial for AI systems to achieve human-level communication. Our work explores the use of more abundant real-world data for building speech synthesizers. We train TTS systems using real-world speech from YouTube and podcasts. We observe the mismatch between training and inference alignments in mel-spectrogram based autoregressive models, leading to unintelligible synthesis, and demonstrate that learned discrete codes within multiple code groups effectively resolves this issue. We introduce our MQTTS system whose architecture is designed for multiple code generation and monotonic alignment, along with the use of a clean silence prompt to improve synthesis quality. We conduct ablation analyses to identify the efficacy of our methods. We show that MQTTS outperforms existing TTS systems in several objective and subjective measures.

翻译:最近在阅读或行为组合方面受过培训的文本到语音系统(TTS)已经接近人类层面的自然性质。然而,人类言论的多样性往往超出了这些公司的范围。我们认为,处理这种多样性的能力对于AI系统实现人类层面的通信至关重要。我们的工作探索了如何使用更丰富的真实世界数据来建立语音合成器。我们用YouTube和播客的真实世界语言来培训TS系统。我们观察了以Mel-spectrog为基础的自动反制模型中培训和推断一致性的不匹配,导致无法理解的合成,并表明在多个代码组中学习的离散代码有效地解决了这一问题。我们引入了我们的MQTTS系统,该系统的设计是为了多代码生成和单声调,同时使用干净的沉默提示来提高合成质量。我们用真实世界的语音分析来确定我们的方法的功效。我们显示,MQTTS在几个客观和主观的措施中超越了现有的 TTS系统。