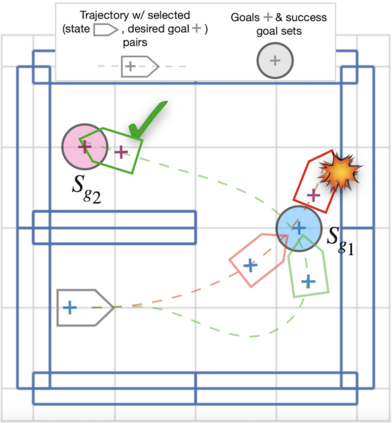

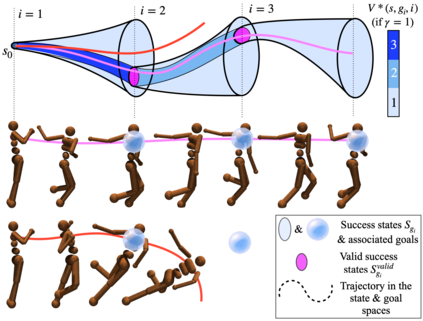

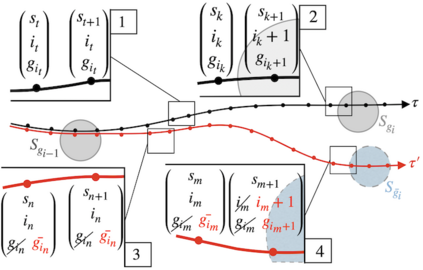

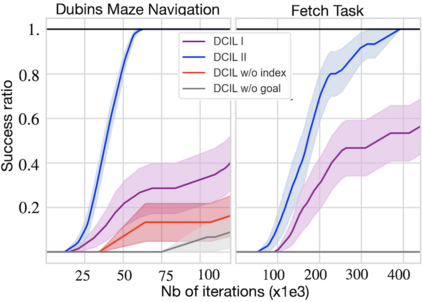

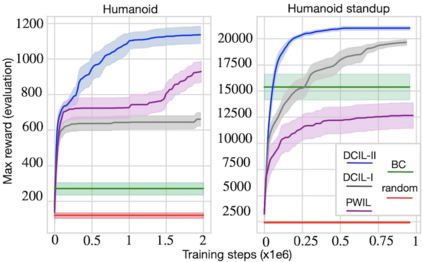

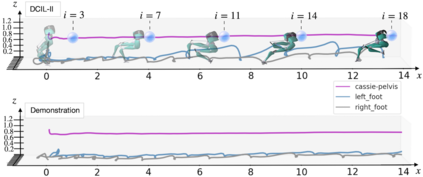

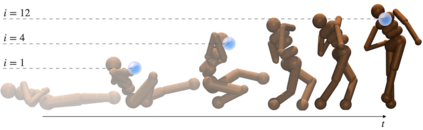

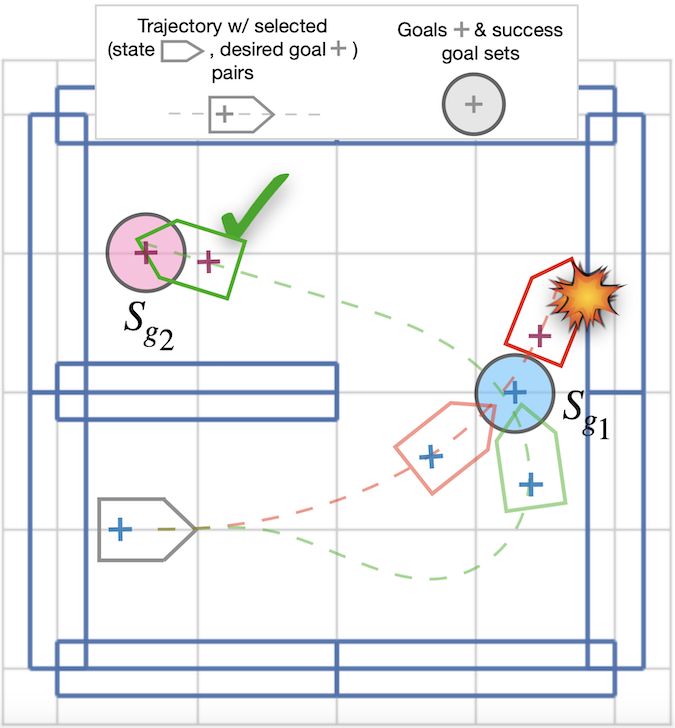

Deep Reinforcement Learning has been successfully applied to learn robotic control. However, the corresponding algorithms struggle when applied to problems where the agent is only rewarded after achieving a complex task. In this context, using demonstrations can significantly speed up the learning process, but demonstrations can be costly to acquire. In this paper, we propose to leverage a sequential bias to learn control policies for complex robotic tasks using a single demonstration. To do so, our method learns a goal-conditioned policy to control a system between successive low-dimensional goals. This sequential goal-reaching approach raises a problem of compatibility between successive goals: we need to ensure that the state resulting from reaching a goal is compatible with the achievement of the following goals. To tackle this problem, we present a new algorithm called DCIL-II. We show that DCIL-II can solve with unprecedented sample efficiency some challenging simulated tasks such as humanoid locomotion and stand-up as well as fast running with a simulated Cassie robot. Our method leveraging sequentiality is a step towards the resolution of complex robotic tasks under minimal specification effort, a key feature for the next generation of autonomous robots.

翻译:深强化学习成功应用了机器人控制。 但是, 当应用到代理器只有在完成复杂任务后才得到奖励的问题时, 相应的算法斗争被成功应用到 。 在这方面, 使用演示可以大大加快学习过程, 但获取的演示成本会很高 。 在本文中, 我们提议使用单一演示来利用顺序偏差来学习复杂的机器人任务的控制政策 。 为此, 我们的方法可以学习一个有目标限制的政策来控制相继低维目标之间的系统 。 这种相继的、 具有目标意义的方法会引发相继目标之间的兼容性问题 : 我们需要确保达到一个目标后产生的状态与实现以下目标相兼容 : 我们需要确保实现一个目标后产生的状态与实现以下目标相兼容 。 为了解决这一问题, 我们提出了名为 DCIL- II 的新算法。 我们展示了DCIL- II 能够以前所未有的样本效率解决一些具有挑战性的模拟任务, 如人类移动和站起, 以及快速运行模拟的 Cassi 机器人。 我们利用连续性的方法是在最低规格努力下解决复杂机器人任务的一个步骤, 。 这是下一代自主机器人的关键特征 。